Disclaimer: This is a translation of an article. All rights belongs to author of original article and Miro company.

I'm a QA Engineer in Miro. Let me tell about our experiment of transferring partially testing tasks to developers and of transforming Test Engineer role into QA (Quality assurance).

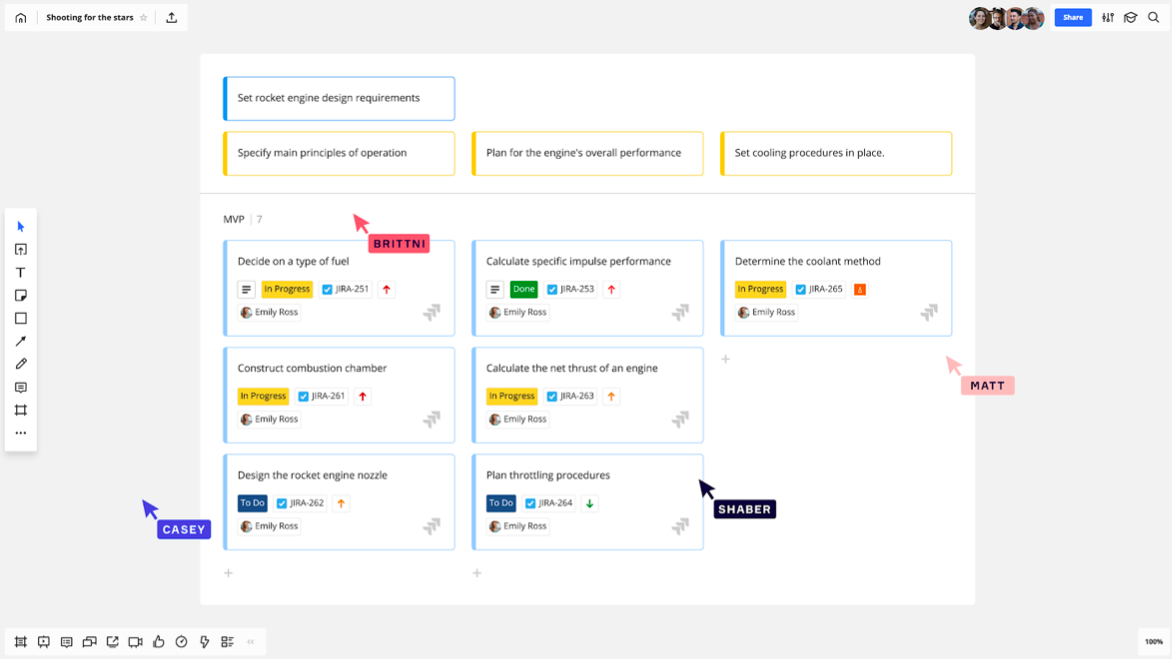

First briefly about our development process. We have daily releases for client side and 3 to 5 weekly releases of server side. Team have 60+ people spitted onto 10 Functional Scrum Teams.

I'm working in Integration team. Our tasks are:

- Integration of our service into external products

- Integration of external products into our service

For example we have integrated Jira. Jira Cards — visual representation of tasks so it's useful to work with tasks not opening Jira at all.

How the experiment starts

All starts with trivial issue. When someone of Test Engineers had sick leave then team performance was degraded significantly. Team was continued working on tasks. However when code was reached testing phase task was hold on. As a result new functionality didn't reach production in time.

Going onto vacation by Test Engineer is a more complex story. He/she needs to find another Test Engineer who ready to take extra tasks and conduct knowledge sharing. Going onto vacation by two Test Engineers at the sane time is not an applicable luxury.

We have started to think how to solve this problem. We find out that root cause is that testing phase is a bottleneck. Let me share few examples of these.

Case 1: Task ping-pong

There are Me and Developer. Each have own tasks. Developer have finished one task and send it for testing to me. As far as this new task has higher priority I'm switching on it. I found defects, raise them in Jira and return task back to Developer for fixes.

I switch back to previous tasks. Developer switch back from current tasks to returned task. After fixes developer returns task back to Me for retesting. I found defect again and return task back again. This could long continuous.

As a result accumulated time spend on task is increasing in few time and therefore time to market which is critical for us as a product company. There are few reason of effort increasing:

- Task constantly moving between Test Engineer and developer

- Task spending time in waiting for Test Engineer or Developer

- Developer and Test Engineer have to frequently switch context between task which causes additional loses of time and mental energy.

Case 2: tasks queue is raising

There are a few developers in our Scrum Team. They send task to me for testing regularly. That forms a task queue which I proceed based on priorities.

That's how we have worked for about a year. However during this time company is grown. Number of projects and developers was increased and therefore number of tasks for testing. At the same time I still was the only one Test Engineer in our Scrum Team and I weren't able to increase my performance in times. As a result the more and more tasks stuck in queue for testing and ping-pong process also increased waiting time.

Suddenly I got sick. New Features delivery from our Scrum Team to production was fully stopped.

What team should do in this situation? It's possible to ask for a help of Test Engineer for another team. However another Test Engineer is not in context and didn't get knowledge transfer upfront which will negatively affect quality and schedule in both teams.

The outcome from both cases in the same — teams are too depending from test Engineers:

- Velocity of the team are limited by velocity of Test Engineer.

- Amoun of developers can't be increased without adding Test Engineers because it doesn't make sense to speedup development if all developed tasks are stuck in queue for testing.

- While Test Engineer verifies task after Developer the Developer's felling of responsibility for a quality is decreasing.

- If Test Engineer is unavailable then delivery process is suffering.

Let's add Test Engineers?

The obvious idea — let's increase amount of Test Engineers. That could work until certain moment: amount of tasks will increase but it's impossible to increase amount of Test Engineers continuously. It will be too costly and inefficient.

More efficient is to keep velocity and quality with current resources. That's why we have decided to launch an experiment which will help teams to create functionality with embedded quality assuming risks and corner cases. We called this experiment "Transform tester to QA" because it's about transformation of one role from monkey-testers seeking bugs to QA who consciously influence and provide quality across all SDLC phases.

Let's improve development processes

Experiment objectives:

- Remove team's dependency of Test Engineers not losing in quality and timing.

- Improve level of quality assurance provided by QAs and teams.

The first important step was to change team's mindset. All was expected that Test Engineers exclusively cares and responsible for quality.

Our idea was that adding effort in task preparation and verification will help to reduce number of ping-pong iterations. Therefore it will allow to deliver new functionality on production keeping acceptable velocity and quality levels or even to improve these.

My Scrum Team together with Test Engineers from other Teams had created new process. We had been worked accordingly this new process for a half year and fix few issues appears during this time and now process looks like:

- Presentation on task statement.

- Technical solution and test scenario.

- Development and verification.

- Release.

Task statement

Product Owner present task statement for a Team. Team analyses task statement to discover corner cases from technical and product point of view. If there are questions appears for clarification or investigation then it's tracked as a separate task with dedicated time in a sprint.

Technical solution

As an outcome of Analysis is a Technical Solution which is created by one or few developers. All relevant teammates along with QA must review and agreed on it. Technical Solution contains purpose of solution, product's functional blocks which will be affected and description of planned code changes.

Such Technical Solution allows to discover more lightweight and more proper solution based on experience of relevant development process participants. Having detailed Technical Solution it's easier to discover and avoid blocking issues, easier to conduct code-review.

Here is an example of some blocks in Technical Solution:

Task description

Are new entities or approaches introducing into the system?

If yes, these are described along with explanation why it's impossible to solve task with current approaches.

Are interactions of servers within a cluster changing? Are new cluster roles introducing?

Is Data Model changing?

For server it's about objects and models.

If data model is complex it could be represented ans UML diagram or text description.

Is client-server interaction changing?

Changes description. If it's an API then could we publish it? Don't forget about exceptions handling i.e. log correct reasons.

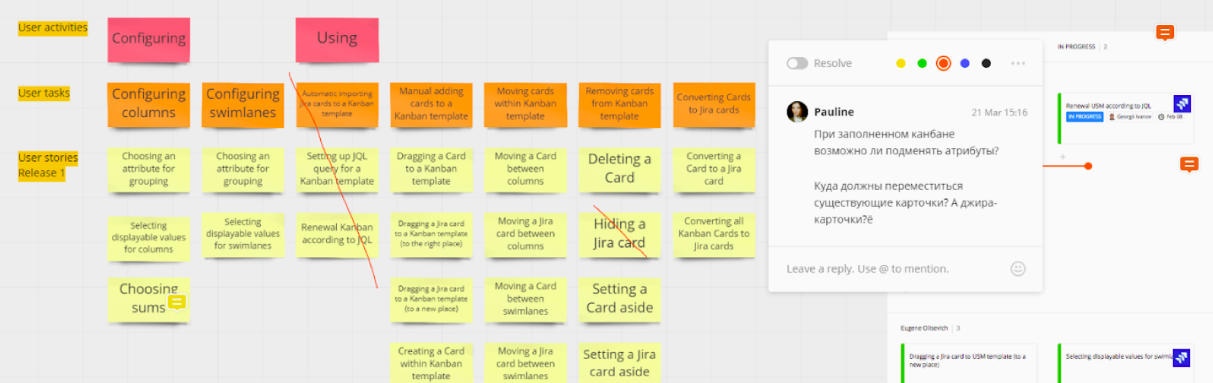

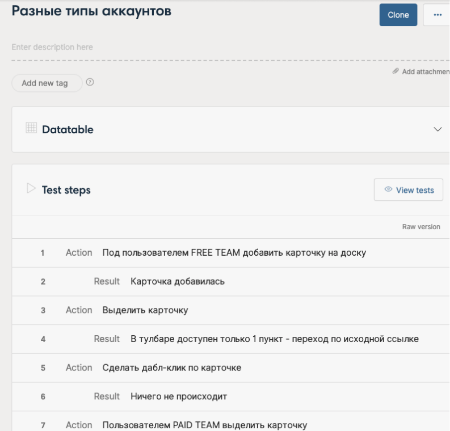

Test scenario

In parallel Developers or QA are creating test scenarios assuming corner cases and potential problem points. If it's created by Developer then QA must review it on completeness. If Test Scenario is created by QA then Developer need to review and confirm that it's clear how to implement each points. Team also assess Test Scenarios on correctness.

For creating and keeping test scenarios we are using HipTest.

Development and verification

Developer creates an application code based on Technical Solution and assuming corner cases and test scenarios. passing code review and verify feature against test scenarios. Merges branch to master.

On this stage QA supports Developer. For example help with reproducing complex test cases, test environment configuration, conducting loading testing, consulting about delivering big features on production.

Release of done functionality

This is a final stage. Here Team may need to provide pre- and post-release actions. For example toggle on new functionality for beta-users.

Documentation and tools

New development process had required from Developers more deep involving it testing process.

Therefore it was important that I as a QA supply them with all necessary tools and study them of Functional Testing Fundamentals. Together with other Teams' QAs we have compiled a list of necessary documentation, have created missing testing instructions and have updated existing including non obvious for Developers things.

Then we have granted to Developers access to all testing tools and test environments and have started to execute testing together. On the beginning Developers didn't want to take responsibility for testing results because no one verified after them. It was unusual. Each Developer had only Test Scenario, functionality created by Developer and Merge button. But they got adapted fast.

Results of the Experiment

It's a half year since the beginning of the Experiment. There is a graph of bugs amount per week. Amount of all discovered bugs is represented by red color. Amount of fixed bugs is represented by green. Yellow line is a beginning of the Experiment.

It's visible that red line stay on the same level except pike at the beginning of the Experiment.

Amount of bugs didn't increased and therefore we have kept current level of quality.

Along with that average time spent on task decreased on 2%: 12 hours and 40 minutes before Experiment vs. 12 hours 25 minutes after. It means that we managed to keep velocity.

As a result there is no more hard dependency from QA in a Team. If I'll get sick or will go to vacation the Team will continue development without losses in velocity and quality.

Are we considering Experiment successful despite velocity and quality stays the same? Yes we do because at the same time we have freed QA capacity for conscious work on product and QA Strategy. For example for exploratory testing, increasing functional test coverage and describing of principles and rules of test documentation creation in all teams.

We have seed experiment mindset in another teams also. For example in one Team QA now doesn't test what described in pull request by Developer because Developer may verify it himself. in another Team QA reviewing pull request and tests only complex and non obvious corner cases.

What should bear in mind before launch an experiment

It's a complex and long way. It couldn't be implemented immediately. It requires preparation and patience. It doesn't promise quick wins.

Be ready for Team's resistant. It not a way just to state that from now on Developers will verify functionality. It's important to prepare them to this, craft approaches, describe the pros of experiment for Team and product.

Velocity loss at the beginning is unavoidable. Time for Knowledge transfer for Developers, for creating missing documentation and for changes in a processes it an investment.

There is no silver bullet Similar processes are implementing for example in Atlassian however it doesn't mean that it's possible to implement it in your company as is.It's important to adapt experiment for culture and specific of the company.