What do you get when you cross an IT department, a faulty Sprint review, determination, and pizza? Greatness, that's what.

Our first attempt to introduce sprint reviews at Dodo Pizza failed spectacularly. You would think, perhaps, that a pizza chain doesn’t need Scrum practices at all. However, strange as it may seem, one of the key advantages of Dodo Pizza is its own IT system that controls all the working processes of 430 pizzerias in 11 countries.

More than 60 programmers and analysts work on our system now, and we’re planning to increase that number to more than 200. Like any fast-growing start-up, we are aiming for maximum efficiency, so we use Agile frameworks a lot, including Scrum, LeSS, and extreme programming. But how do you employ Scrum without sprint reviews? That’s the question you might ask—and you would be right to.

As we know, a sprint review sets the work rhythm for a team and motivates it to finish the task by the end of the sprint. More importantly, a sprint review helps create a product that is valuable for the business, not just solve problems from the backlog. At least, that’s what the books say.

But somehow, this approach never worked for us. For instance, at one of our first sprint reviews, we presented a new website (dodopizza.kz) to our Kazakhstan franchisees. The feedback was inspiring, our partners told us that the website was looking great, and comparing to the competitors, it was practically a masterpiece. But after its launch, it turned out that it was seriously lacking. So we’d wasted time on a sprint review but hadn’t gotten any useful feedback.

Soon, we quietly stopped these sprint reviews altogether.

Several months passed, and I decided to try again. At that point, we had eight teams working on one backlog in the LeSS framework. We tried to adhere to all Large Scale Scrum rules, and the cancellation of sprint reviews was a violation.

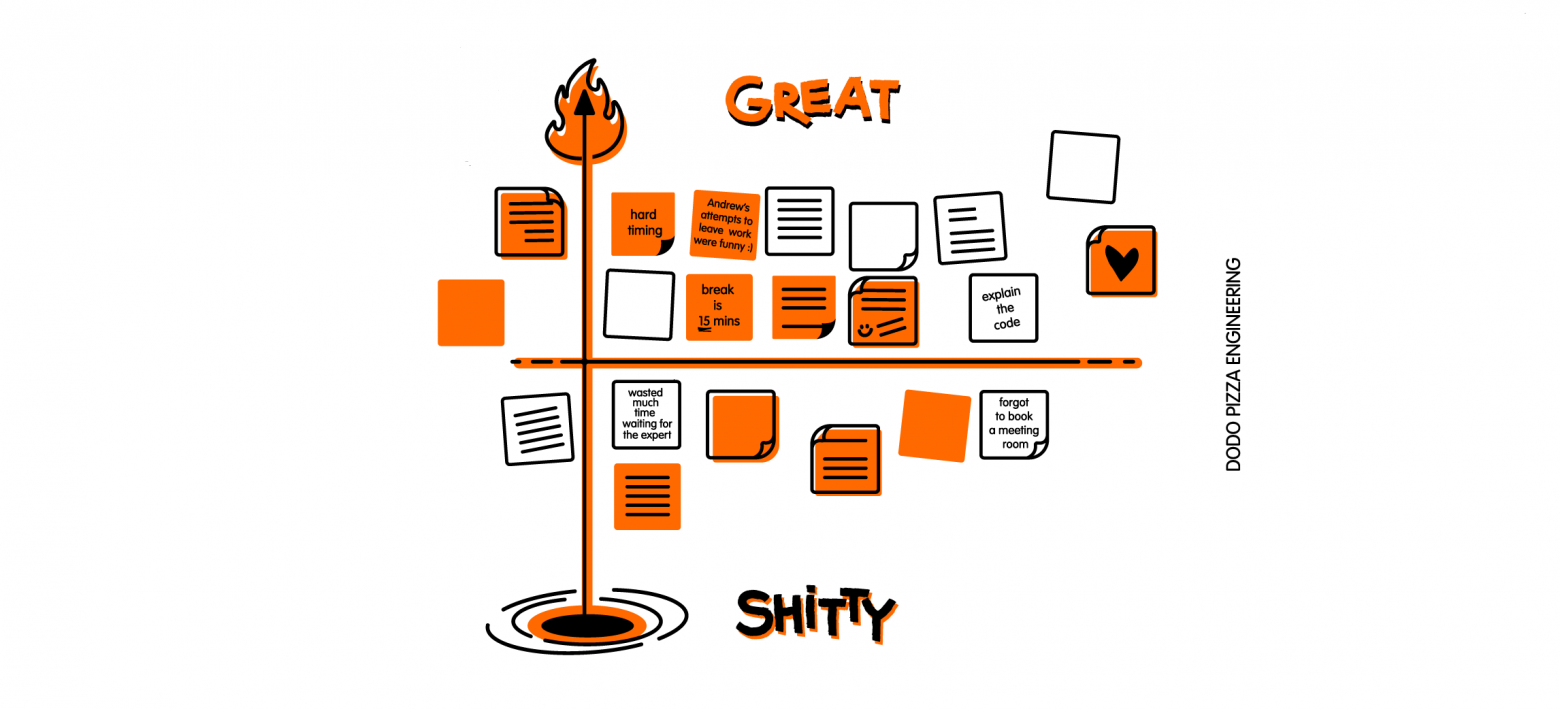

I braced myself for bad initial results—it was clear we would have to find the right format through trial and error. After every review, I asked the participants to assess it on a scale of 1 to 10. Initially, our grades were very low, but we didn’t give up and continued to experiment, so in a couple of months, the reviews began to move to the upper part of the scale.

This is what we did differently.

It’s become clear that the sprint review preparation demands no less time than the sprint review itself, or even more. A review takes two hours, and I spend three hours preparing for it. There are review goals to discuss and agree on with the team, partners, the parent company managers, employees, and other guests to talk to, conference rooms to book, a poster to make, facilitators to find and instruct, a timetable to put together, feedback flip charts to hang, etc. And without all that, chaos ensues.

At first, we showed half-ready features at sprint reviews. Then it occurred to us that this meant cheating our teams and, even worse, our clients. We showed the CEO a mapping service caching cartographic data once. At the next review, we showed it again, with bugs fixed. When we brought it to him the third time and showed it on the live website, he had a very legitimate question: why the hell does he have to look at the same thing over and over again? Now, at sprint reviews, we show only features that are on a live site already. If something is nearly done and we just need to fix bugs, test it, and roll it out, we simply don’t show it yet.

LeSS creators recommend conducting a sprint review as a “bazaar,” where all teams demonstrate their work in a large room, and people visit those stations they are interested in. We tried that several times, and it was a lot of noise and confusion.

Laptop screens are small and uncomfortable, you can’t concentrate very well because of the noise at other stations, and you get chaos when people are constantly wandering about. So now we all gather in a large conference room only at the beginning and the end of the review. The main action takes place in small conference rooms, where each team presents its work. It’s more comfortable, there is all the necessary equipment, big screens, and Internet access for remote participants, and you can gather feedback in peace.

At first, stakeholders walked freely between teams, but it was annoying. Imagine that you’re doing your presentation, have been speaking for ten minutes, and then somebody comes up and asks you questions about those things you’ve just covered.

If you answer, everybody else is bored. If you don’t, this person may resent it. So we’ve decided not to allow wandering between groups. If you’ve chosen a subject, please, sit in a conference room and wait 20 minutes till the next break.

We’ve realized that the guest list is very important. Nothing motivates a developer more than a company CEO visiting a sprint review, especially when you show some technical thingamabob, like hosting a microservice in Kubernetes or migrating an Auth component to .Net Core. Then you have to explain why you even did all this stuff in the first place. Fyodor Ovchinnikov, the Dodo Pizza CEO, can charge the audience with energy, lift their spirits in three minutes, and outline the broad prospects of the company’s development, but when demonstrating front-end features, like a build-your-own-pizza feature in our mobile app, we invite outside guests, mostly acquaintances and friends from Facebook.

It turns out that the presenter makes up half of the success of an interesting and exciting sprint review. Many people have played that role; I tried it first, with the assistance of our Scrum masters.

Then Sergey Gryazev, our mobile app product owner, became a presenter, and now we can’t imagine such events without his jokes. And recently, we acquired a ritual artifact, the Speaker’s Cap. The speaker should put a cap on. It doesn’t mean anything special, it’s just a ritual, but it cheers everybody up.

It’s easy to conduct a sprint review when everybody sits in the same room. But we have a lot of remote employees in Syktyvkar, Nizhny Novgorod, Kazan, and Goryachy Klyuch, and it’s important for them to be involved, too.

At first, they complained that they couldn’t hear or see practically anything, but we’ve learned to care about them as well as about our offline participants. In the sprint review preparation checklist, there are reminders that we need to check the Internet connection and adjust the equipment. We post event updates via Slack, and recently, we began to stream our meetings on the Dodo Pizza Youtube channel.

When we’d already started to think that everything was fine and we didn’t need to improve anything else, we asked ourselves if we’re actually doing the right thing. A sprint review is a rather expensive affair, taking into account the number of participants, their wages, and the time spent. Do we use these two hours with maximum efficiency? As a result, we’ve decided not to gather feedback at sprint reviews at all. In such conditions, feedback is never comprehensive or of good quality (the Kazakhstan website review is a case in point). Besides, we gather a lot of meaningful and useful feedback during the sprint itself, questioning all interested parties, from internal customers to users.

You’d be surprised, but even in the Scrum Guide, there is not a word about gathering feedback at a sprint review. “During the Sprint Review, the Scrum Team and stakeholders collaborate about what was done in the Sprint.” The Scrum Team and stakeholders, not users. And they collaborate, not gather feedback. It’s not about that at all.

Not all the stakeholders take an active part in the development process, but all of them want to be kept informed of what’s going on there. And that’s our sprint review goal now. We still show what we’ve done, but besides that, our teams talk about the genesis of new features. What was our goal? What was going on during the sprint? What distracted or impeded us? What measures did we take to reach our goal? And it helps; this way, managers can understand, for example, why concealing a client’s email address in a receipt with asterisks is not a trivial task at all and not just “half an hour of programming,” as they thought previously. And such dialogue helps our software developers think in terms of the clients’ problems, not getting carried away by the solution they’re working on.

These are the main things we’ve changed in our approach to sprint reviews. There is definitely progress, but there is also still room for improvement, so we continue our experiments.

Above all, we’ve understood one thing—you don’t need to obsess over the Scrum Guide recommendations too much. You should go by trial and error. There are no universal solutions; you should look for those working for you.

So, in conclusion, I just want to warn you. Don’t copy our format. It works for us, as it was born out of experimenting. Look for your own approach and you’ll succeed. What’s the worst that can happen?

Our first attempt to introduce sprint reviews at Dodo Pizza failed spectacularly. You would think, perhaps, that a pizza chain doesn’t need Scrum practices at all. However, strange as it may seem, one of the key advantages of Dodo Pizza is its own IT system that controls all the working processes of 430 pizzerias in 11 countries.

More than 60 programmers and analysts work on our system now, and we’re planning to increase that number to more than 200. Like any fast-growing start-up, we are aiming for maximum efficiency, so we use Agile frameworks a lot, including Scrum, LeSS, and extreme programming. But how do you employ Scrum without sprint reviews? That’s the question you might ask—and you would be right to.

As we know, a sprint review sets the work rhythm for a team and motivates it to finish the task by the end of the sprint. More importantly, a sprint review helps create a product that is valuable for the business, not just solve problems from the backlog. At least, that’s what the books say.

But somehow, this approach never worked for us. For instance, at one of our first sprint reviews, we presented a new website (dodopizza.kz) to our Kazakhstan franchisees. The feedback was inspiring, our partners told us that the website was looking great, and comparing to the competitors, it was practically a masterpiece. But after its launch, it turned out that it was seriously lacking. So we’d wasted time on a sprint review but hadn’t gotten any useful feedback.

Soon, we quietly stopped these sprint reviews altogether.

Several months passed, and I decided to try again. At that point, we had eight teams working on one backlog in the LeSS framework. We tried to adhere to all Large Scale Scrum rules, and the cancellation of sprint reviews was a violation.

I braced myself for bad initial results—it was clear we would have to find the right format through trial and error. After every review, I asked the participants to assess it on a scale of 1 to 10. Initially, our grades were very low, but we didn’t give up and continued to experiment, so in a couple of months, the reviews began to move to the upper part of the scale.

This is what we did differently.

Doing Our Homework

It’s become clear that the sprint review preparation demands no less time than the sprint review itself, or even more. A review takes two hours, and I spend three hours preparing for it. There are review goals to discuss and agree on with the team, partners, the parent company managers, employees, and other guests to talk to, conference rooms to book, a poster to make, facilitators to find and instruct, a timetable to put together, feedback flip charts to hang, etc. And without all that, chaos ensues.

If It’s Not Finished, Don’t Show It

At first, we showed half-ready features at sprint reviews. Then it occurred to us that this meant cheating our teams and, even worse, our clients. We showed the CEO a mapping service caching cartographic data once. At the next review, we showed it again, with bugs fixed. When we brought it to him the third time and showed it on the live website, he had a very legitimate question: why the hell does he have to look at the same thing over and over again? Now, at sprint reviews, we show only features that are on a live site already. If something is nearly done and we just need to fix bugs, test it, and roll it out, we simply don’t show it yet.

Conference Rooms Instead of an Open Space

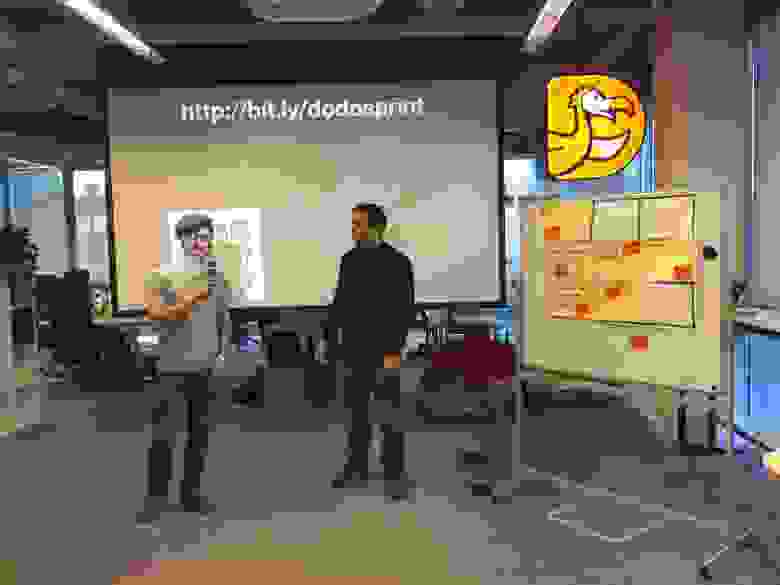

LeSS creators recommend conducting a sprint review as a “bazaar,” where all teams demonstrate their work in a large room, and people visit those stations they are interested in. We tried that several times, and it was a lot of noise and confusion.

Laptop screens are small and uncomfortable, you can’t concentrate very well because of the noise at other stations, and you get chaos when people are constantly wandering about. So now we all gather in a large conference room only at the beginning and the end of the review. The main action takes place in small conference rooms, where each team presents its work. It’s more comfortable, there is all the necessary equipment, big screens, and Internet access for remote participants, and you can gather feedback in peace.

Wandering Is Not Allowed

At first, stakeholders walked freely between teams, but it was annoying. Imagine that you’re doing your presentation, have been speaking for ten minutes, and then somebody comes up and asks you questions about those things you’ve just covered.

If you answer, everybody else is bored. If you don’t, this person may resent it. So we’ve decided not to allow wandering between groups. If you’ve chosen a subject, please, sit in a conference room and wait 20 minutes till the next break.

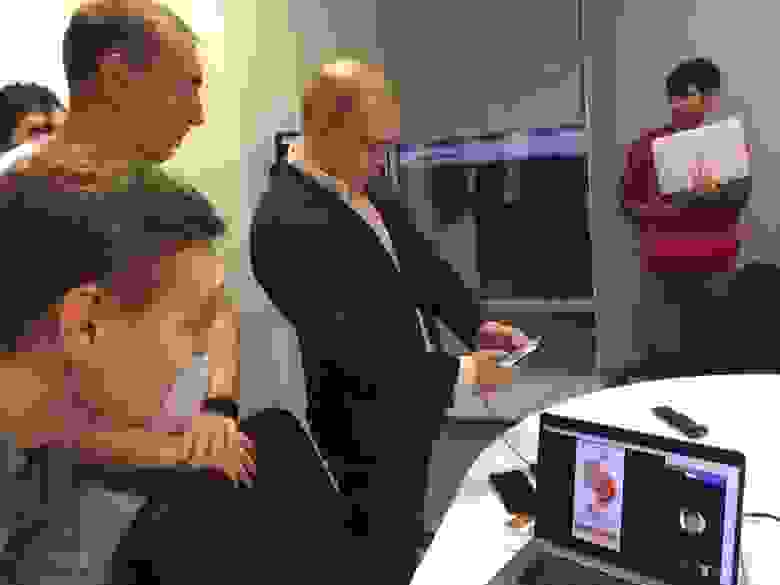

Dear Guests

We’ve realized that the guest list is very important. Nothing motivates a developer more than a company CEO visiting a sprint review, especially when you show some technical thingamabob, like hosting a microservice in Kubernetes or migrating an Auth component to .Net Core. Then you have to explain why you even did all this stuff in the first place. Fyodor Ovchinnikov, the Dodo Pizza CEO, can charge the audience with energy, lift their spirits in three minutes, and outline the broad prospects of the company’s development, but when demonstrating front-end features, like a build-your-own-pizza feature in our mobile app, we invite outside guests, mostly acquaintances and friends from Facebook.

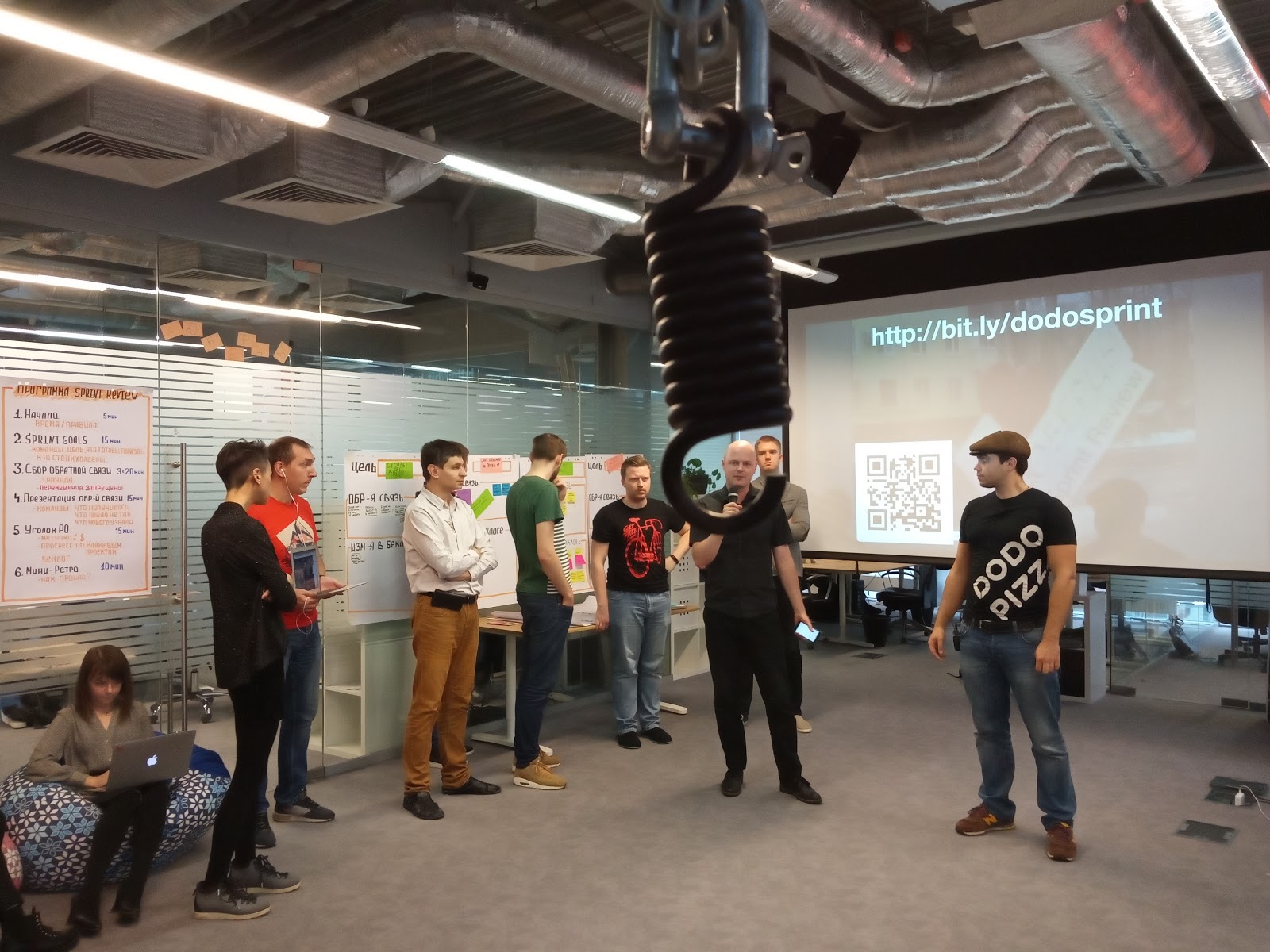

A Funny Presenter and a Cap

It turns out that the presenter makes up half of the success of an interesting and exciting sprint review. Many people have played that role; I tried it first, with the assistance of our Scrum masters.

Then Sergey Gryazev, our mobile app product owner, became a presenter, and now we can’t imagine such events without his jokes. And recently, we acquired a ritual artifact, the Speaker’s Cap. The speaker should put a cap on. It doesn’t mean anything special, it’s just a ritual, but it cheers everybody up.

Remote Participants

It’s easy to conduct a sprint review when everybody sits in the same room. But we have a lot of remote employees in Syktyvkar, Nizhny Novgorod, Kazan, and Goryachy Klyuch, and it’s important for them to be involved, too.

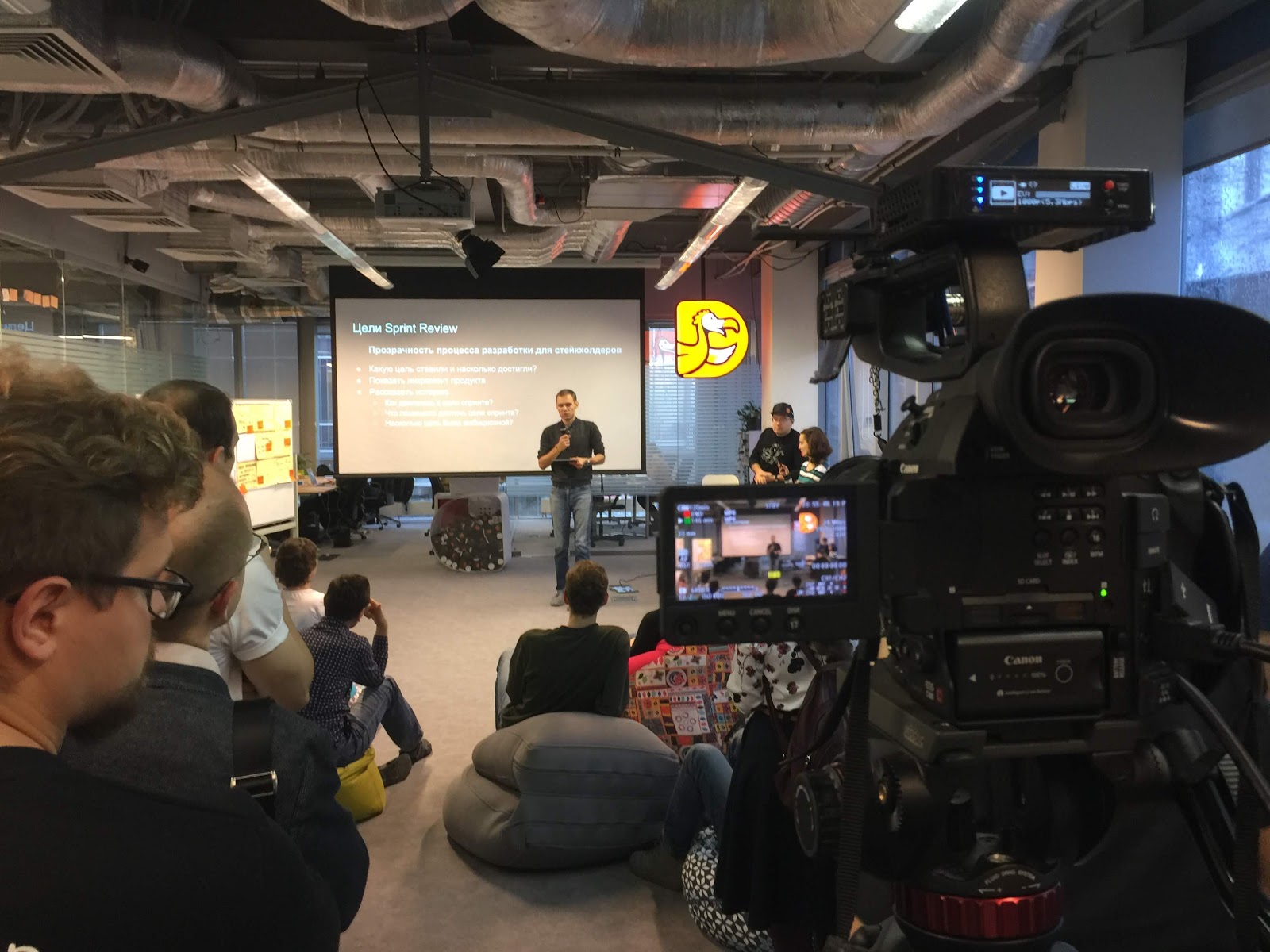

At first, they complained that they couldn’t hear or see practically anything, but we’ve learned to care about them as well as about our offline participants. In the sprint review preparation checklist, there are reminders that we need to check the Internet connection and adjust the equipment. We post event updates via Slack, and recently, we began to stream our meetings on the Dodo Pizza Youtube channel.

Don’t Gather Feedback

When we’d already started to think that everything was fine and we didn’t need to improve anything else, we asked ourselves if we’re actually doing the right thing. A sprint review is a rather expensive affair, taking into account the number of participants, their wages, and the time spent. Do we use these two hours with maximum efficiency? As a result, we’ve decided not to gather feedback at sprint reviews at all. In such conditions, feedback is never comprehensive or of good quality (the Kazakhstan website review is a case in point). Besides, we gather a lot of meaningful and useful feedback during the sprint itself, questioning all interested parties, from internal customers to users.

You’d be surprised, but even in the Scrum Guide, there is not a word about gathering feedback at a sprint review. “During the Sprint Review, the Scrum Team and stakeholders collaborate about what was done in the Sprint.” The Scrum Team and stakeholders, not users. And they collaborate, not gather feedback. It’s not about that at all.

Welcome to the Backstage

Not all the stakeholders take an active part in the development process, but all of them want to be kept informed of what’s going on there. And that’s our sprint review goal now. We still show what we’ve done, but besides that, our teams talk about the genesis of new features. What was our goal? What was going on during the sprint? What distracted or impeded us? What measures did we take to reach our goal? And it helps; this way, managers can understand, for example, why concealing a client’s email address in a receipt with asterisks is not a trivial task at all and not just “half an hour of programming,” as they thought previously. And such dialogue helps our software developers think in terms of the clients’ problems, not getting carried away by the solution they’re working on.

These are the main things we’ve changed in our approach to sprint reviews. There is definitely progress, but there is also still room for improvement, so we continue our experiments.

Above all, we’ve understood one thing—you don’t need to obsess over the Scrum Guide recommendations too much. You should go by trial and error. There are no universal solutions; you should look for those working for you.

So, in conclusion, I just want to warn you. Don’t copy our format. It works for us, as it was born out of experimenting. Look for your own approach and you’ll succeed. What’s the worst that can happen?