The director Denis Villeneuve and cinematographer Greig Fraser in their Dune: Part Two movie made a curious decision to film the scenes on the surface of the Giedi Prime planet in the infrared spectrum. It turned out to have interesting aesthetics and there are some interesting related physics to discuss and speculate about how realistic the look of it is.

How it was filmed?

In this interview Greig Fraser talks about how they filmed these scenes in infrared. They didn’t have to use any special devices. They used regular digital motion picture cameras but replaced an infrared blocking filter with a filter blocking visible light instead and passing the infrared light. The exact filter and its spectral characteristics are not disclosed, but this experiment showed an infrared filter transmitting at 720 nm.

in the range very roughly from 400 nm to 700 nm.

Above 700 nm and to 1 mm - infrared radiation (IR).

From 700 nm and to 1400 nm - near infrared (NIR).

On the other side of the visible spectrum from 10 nm to 400 nm - ultraviolet radiation (UV).

Source.

The ease of switching from filming in the visible spectrum to filming in the near infrared spectrum by simple replacement of the optical filter is explained by the fact that there is no physically fundamental difference or clear boundary between visible light and near infrared light. Actually, all modern digital cameras are capable of detecting the near infrared radiation by default. For everyday use the IR must be filtered out or the images will be ruined due to messed up brightness and colors. But for some specific applications expanding the light spectrum is helpful.

Source.

The photosensor array is the detector of electromagnetic radiation in digital cameras. Each element of the array is a semiconductor device which transforms the energy of radiation to electrical signal. There two main types of the photosensor arrays: charge-coupled device (CCD) and active-pixel sensors (usually labeled as CMOS sensors due to the complementary metal-oxide-semiconductor manufacturing technology). A CCD sensor is basically a capacitor made of semiconductor and a CMOS sensor is basically a photodiode. Both types of sensors work thanks to the photoelectric effect in the semiconductors. In a semiconductor to transform the energy of a light particle - photon, must possess higher energy than some threshold energy, which is determined by the semiconductor band gap.

For the silicon which is the main semiconductor of all modern semiconductor industry and electronics the bandgap is roughly 1.14 eV. The wavelength of a photon with that much energy is 1080 nm. So the detectors made from silicon will detect the light starting from the middle of the near infrared spectral band.

In cheap devices manufacturers can sacrifice image quality and install a worse IR filter. With a simple digital camera it’s possible to do an experiment at home with detecting an IR light. In TV or A/C remote controls the IR light emitting diodes are used to transmit data and commands. The IR light from the LED is invisible to the human eye, but when filmed with the digital camera during the button press the LED will light up with purple. This happens because the IR filter is not blocking the IR radiation and it is detected by the blue and red elements of the photosensor array.

Source.

How does a human look in the IR?

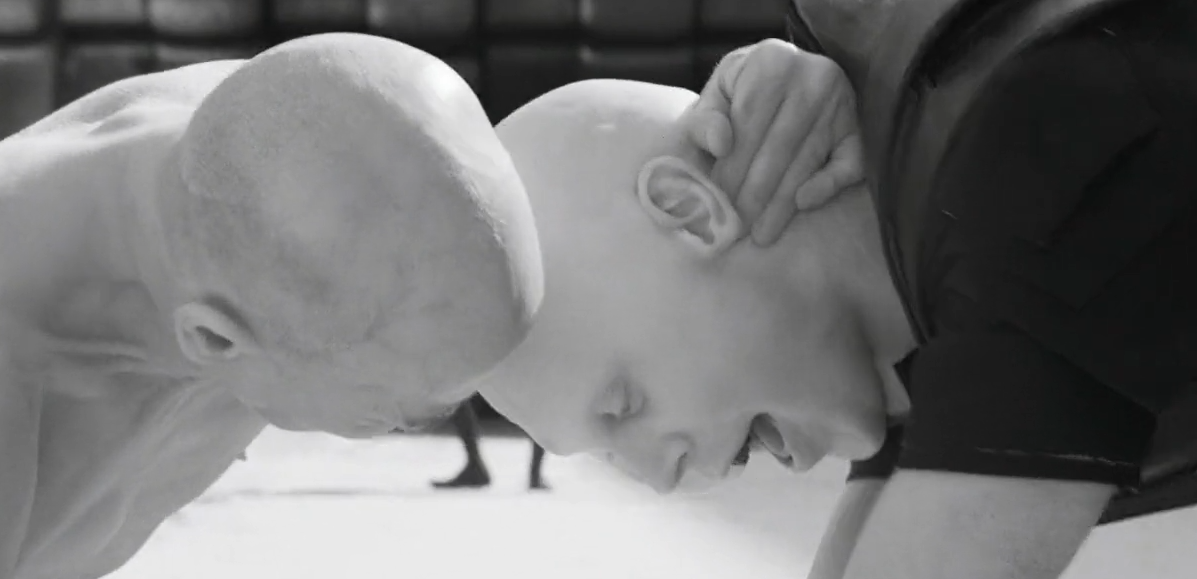

Let’s go back to the still shot from the movie with the heads of Atreides soldier and Feyd-Rautha filmed in the IR spectrum.

The upper part of the Feyd-Rautha’s head looks brighter and cleaner than the lower part and than the Atreides’ head. The explanation is simple: the actor Austin Butler, who played Feyd-Rautha, didn’t shave his head for the role. The actor wore makeup and a special bald cap to hide his hair and eyebrows. On the still shot you can note the transition between the makeup and his own skin.

Another difference is a clearly visible vein on the Atreides’ head and no veins visible on the Feyd-Rautha’s head. The human tissues in the visible spectrum are obviously not transparent, but the NIR light can penetrate the tissues deeper due to lower absorption and scattering. But veins in IR are well visible, because blood contains hemoglobin which is more absorbing in NIR than surrounding tissues.

Source.

This effect is used in medicine. For example pulse oximeters measure the pulse and oxygen content of the blood. The pulse is measured by monitoring the change in absorbed NIR light intensity due to blood flowing into fingers after heart contraction. And it is possible to estimate the oxygen from the measurement at several wavelengths due to the difference in absorption spectra of non-oxygenated hemoglobin and oxygenated hemoglobin.

Other examples are devices which help nurses to locate veins of patients during drawing blood or administering drugs through veins. The IR illumination is not visible to human eyes and is not distracting but on the IR images the veins are clearly visible and can be detected using image processing algorithms. The vein contours can be then projected on the patient.

How realistic is the look of Giedi Prime?

Getting the visual perception as it is depicted in the movie is not very realistic. Human eye is not adapted to see in the infrared, only in the visible spectrum. It is the reason why the visible spectrum is separated from infrared and ultraviolet.

The light in the human eye is detected by the retina, and the sensitive elements in the retina are two types of photoreceptor cells: rods and cones. The rods have high sensitivity and they are responsible for the black and white night vision at low illumination. The cones work at high illumination and are responsible for color vision. There are 3 types of cones most sensitive respectively to red, green and blue light.

At good illumination the eye is best at detecting radiation with the wavelength 555 nm, which corresponds to the green color. And at low illumination the green light corresponding to the peak of the rods sensitivity will be best visible and the peak of the night vision sensitivity is 507 nm.

As human eyes are most sensitive to the green light, green colored objects are perceived to be brighter than objects of other colors even though they give off the same amount of light energy. Digital cameras have to account for that and collect more green light, otherwise the green object on pictures will look unnaturally dim. So the photosensor arrays are designed to have more green sensitive elements than red and blue elements. Usually one pixel of the image is created by 4 elements of the array: red, blue and 2 green making a Bayer filter. This filter is visible on the photo of the array above. One small square element of the array has 2 green elements on the diagonal and red and blue elements on the other diagonal.

There is no hard cut off for the photoreceptor cell sensitivity. The human eye in principle is able to detect near infrared radiation and a human see in the near infrared. Some studies even show an increase in sensitivity to the NIR at 1000 nm wavelength due to two-photon absorption.

Let’s speculate about possible scenarios of how to make the Giedi Prime visuals. If the Black Sun is the monochrome light source with 720 nm wavelength then at high enough intensity a human may see dim gray with a red tint image. If the Black Sun radiates with a black-body spectrum like a normal star and the peak of emission is at 720 nm then part of the intensity will be in the red-orange part of the visible spectrum. A human will see everything in red-orange colors.

All in all, it is not realistic to get the same perceived image by an unmodified human eye as it is shown in the movie.

Other species on Earth have more advanced eyes. For example birds have the 4th type of cone cells which allow them to see in the ultraviolet. Mantis shrimps have 16 types of photoreceptor cells in their eyes, they can see in both UV and IR. I think it is impossible for a human to imagine how they perceive the world.

Another type of vision in the IR is thermal vision. Thermal vision works in a far infrared with wavelength around 10 um, which corresponds to the radiation from bodies with room temperature, around 30 ℃. Some snake species can “see” in far infrared and humans can use thermographic cameras to see the thermal radiation.

High sensitivity of human eyes to the green light is probably connected to our Sun. Surface temperature of the Sun is 5800 K, which means the Sun emits a black-body radiation with a peak at 500 nm wavelength, hitting the green part of visible spectrum and the peak of the rods sensitivity.

In the Dune universe Giedi Prime is assigned to the real life star - 36 Ophiuchi B - the orange dwarf star with spectral class K2. 36 Ophiuchi B is slightly colder than the Sun, its surface temperature is around 5000 K and a peak of its emission spectrum is around 580 nm in orange part of the visible spectrum. For life to evolve eyes with a peak of a sensitivity spectrum in the near infrared with wavelength 720 nm as in the movie, it has to begin on a planet orbiting a much colder star, one with a surface temperature of 4100 K.