It is easy to try a static code analyzer. But it requires skills to introduce it in the development of an old large project. If the approach is incorrect, the analyzer can add work, slow down development, and demotivate the team. Let's briefly discuss how to properly integrate static analysis into the development process and start using it as part of CI/CD.

Introduction

Recently I got interested in the post "Getting Started With Static Analysis Without Overwhelming the Team". On the one hand, this is a decent article that is worth reading. On the other hand, it seems to me that it didn't provide a complete answer on how to safely adopt static analysis in a project with a large amount of legacy code. The article says that you can put up with technical debt and work only with new code, but there it doesn't cover the question what to do with this technical debt later.

Our PVS-Studio team offers our own view on this topic. Let's look at how the problem of embedding a static code analyzer arises in general, how to overcome this problem, and how to gradually eliminate technical debt seamlessly.

Problematics

It is usually easy to start the analyzer and see how it works [1]. You can see some intriguing bugs, or even scary potential vulnerabilities in the code. You can even fix something, but after this many programmers give up.

All static analyzers issue false positives. This is a particularity of the static code analysis methodology, and nothing can be done about it. So this is an unsolvable problem, which is confirmed by the Rice's theorem [2]. Machine learning algorithms will not help either [3]. If even a person can't always tell whether a particular code is wrong, you shouldn't expect this from the program :).

False positives aren't a problem if the static analyzer is already configured:

- Irrelevant rule sets are disabled;

- Individual irrelevant diagnostics are disabled;

- If we are talking about C or C++, macros containing specific constructs (that cause useless objects to appear in every place where such macros are used) are marked up;

- Custom functions that perform actions similar to system functions are marked up (custom analog of memcpy or printf) [4];

- False positives are selectively disabled using the comments;

- And so on.

In this case, we can expect a low level of false positives on the order of 10-15% [5]. In other words, 9 out of 10 analyzer warnings will indicate a real problem in the code, or at least «code with a strong smell». Agree, this scenario is extremely pleasant, and the analyzer becomes a real friend of the programmer.

In reality, in a large project, the initial picture will be quite different. The analyzer issues hundreds or thousands of warnings for legacy code. It is impossible to quickly get, which of these warnings are relevant and which are not. It's not rational to sit down and start dealing with all these warnings, since the main work in this case will stop for days or weeks. As a rule, the team can't indulge in following such a scenario. Also there will be a huge number of diffs that spoil the history of changes. A quick mass fixing of such a large number of fragments in the code will inevitably result in new typos and errors.

Most importantly, such a feat in the fight against warnings makes little sense. You have to admit that since the project has been successfully running for many years, most of the critical errors in it have already been fixed. Yes, these fixes were very expensive, you had to debug them, get negative user reviews about bugs, and so on. A static analyzer would help fix many of these errors at the code writing stage, quickly and cheaply. But at the moment, these errors are fixed anyway, and the analyzer mainly detects non-critical errors in the old code. This code may not be used, it may be used very rarely, and an error in it may not lead to noticeable consequences. It is possible that somewhere the shadow of the button is the wrong color, but this doesn't prevent anyone from using the product.

Of course, even minor mistakes are still mistakes. And sometimes the error can hide a real vulnerability. However, it seems a dubious idea to give up everything and spend days/weeks dealing with defects that don't reveal themselves obviously.

Programmers keep looking and looking at all these warnings on the old working code… And they think: let's do without static analysis. Let's go write a new useful feature.

In their own way, they are right. They think they should get rid of all these warnings first. Only after this they will be able benefit from regular use of the code analyzer. Otherwise, the new warnings will simply sink into the old ones, and no one will pay attention to them.

This is the same analogy as with compiler warnings. It's no accident that it's recommended to have 0 compiler warnings. If there are 1000 warnings, then when they become 1001, no one will pay attention to this, and it is not clear where to look for this newest warning.

The worst part of this story is if someone from upstairs forces you to use static code analysis at this point. This only demotivates the team, since from their point of view there will be an additional bureaucratic complexity that only hinders their work. The analyzer reports will not be viewed by anyone, and all usage will only be «on paper». That is, formally, analysis is built into the DevOps process, but in practice, this doesn't benefit anyone. We have heard detailed stories from conference visitors when chatting at the booths. Such experience can discourage programmers from using static analysis tools for a long time, if not always.

Analyzer introduction and elimination of technical debt

In fact, there is nothing difficult or scary about integrating static analysis even in a large old project.

CI/CD

Moreover, the analyzer can be immediately made part of the continuous development process. For example, the PVS-Studio distribution has utilities for convenient viewing of the report in the format you need, and notifications to developers who wrote problematic sections of code. For those who are more interested in running PVS-Studio from CI/CD systems, I recommend reading the corresponding section of the documentation and a series of articles:

- PVS-Studio in the Clouds: Azure DevOps

- PVS-Studio in the Clouds: Travis CI

- PVS-Studio in the Clouds: GitLab CI/CD

- PVS-Studio in the Clouds: CircleCI

But let's return to the issue of a large number of false positives in the first stages of implementing code analysis tools.

Freezing existing technical debt and working with new warnings

Modern commercial static analyzers allow you to review only new warnings that appear in new or modified code. The implementation of this mechanism differs, but the essence is the same. In the PVS-Studio static analyzer, this functionality is implemented as follows.

To quickly start using static analysis, we suggest that PVS-Studio users apply the mass warning suppression mechanism [6]. The general idea is the following. Imagine, the user has started the analyzer and received many warnings. Since a project that has been developed for many years, is alive, still developing and bringing money, then most likely there won't be many warnings in the report indicating critical defects. In other words, critical bugs have already been fixed due to more expensive ways or with the help of feedback from customers. Thus, everything that the analyzer now finds can be considered technical debt, which is impractical to try to eliminate immediately.

You can tell PVS-Studio to consider all these warnings irrelevant so far (to postpone the technical debt for later), and not to show them any more. The analyzer creates a special file where it stores information about as-yet-uninteresting errors. From now on, PVS-Studio will issue warnings only for new or modified code. By the way, it's all implemented in a very smart way. If an empty line is added at the beginning of a file, the analyzer will size up the situation as if nothing has really changed and will remain quiet. You can put the markup file in the version control system. Even though the file is large, it's not a problem, as there's no need to upload it very often.

From this point, developers will see only warnings related to newly written or modified code. So you can start using the analyzer, as they say, from the next day. You can get back to technical debt later and gradually correct errors and tweak the analyzer.

So, the first problem with introducing the analyzer in a large old project is solved. Now let's figure out what to do with technical debt.

Error fixing and refactoring

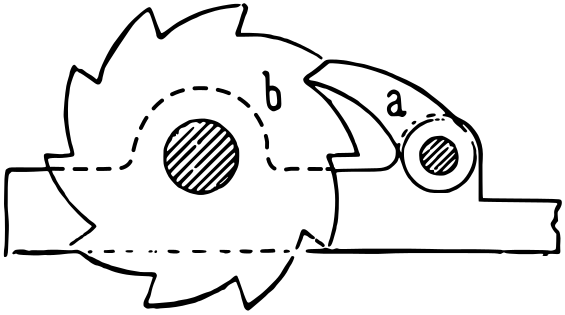

The simplest and most natural thing is to spend some time analyzing massively suppressed analyzer warnings and gradually deal with them. In some cases you should fix bugs in code, in others — perform refactoring to tell the analyzer that the code is not problematic. A simple example:

if (a = b)Most C++ compilers and analyzers complain about such code, since there is high probability that they actually wanted to write (a == b). But there is an unspoken agreement, and this is usually noted in the documentation, that if there are additional brackets, it is considered that the programmer deliberately wrote such code, and there is no need to trigger a warning for it. For example, the PVS-Studio documentation for the V559 (CWE-481) diagnostic clearly states that the next line will be considered correct and safe:

if ((a = b))Another example: Is break forgotten in this C++ code or not?

case A:

foo();

case B:

bar();

break;The PVS-Studio analyzer will issue the V796 (CWE-484) warning here. This may not be an error, and then you should give the analyzer a hint by adding the [[fallthrough]] attribute or, for example, __attribute__((fallthrough)):

case A:

foo();

[[fallthrough]];

case B:

bar();

break;We can say that code changes of such kind don't really fix errors. Yes, this is true, but there are still two useful consequences. First, the analyzer report gets rid of false positives. Secondly, the code becomes more understandable for people who are involved in its maintenance. Which is extremely significant! For the sake of this alone, it is already worth going for small refactoring, so that the code becomes clearer and easier to maintain. Since the analyzer doesn't understand whether «break» is needed or not, it will also be unclear to fellow programmers.

In addition to bug fixes and refactoring, you can selectively suppress false warnings of the analyzer. You can disable some irrelevant diagnostics. For example, some consider V550 warnings about comparing float/double values meaningless. And some consider them significant and worthy of study [7]. It is up to the development team to decide which warnings are relevant and which are not.

There are other ways to suppress false warnings. For example, the macro markup which was mentioned earlier. All this is described in more detail in the documentation. The most vital thing is to understand that if you gradually and consistently work with false positives, there is nothing scary about them. The vast majority of uninteresting warnings disappear after configuration, leaving only places that really require careful study and some changes in the code.

Also, we always help our clients set up PVS-Studio if there are any difficulties. Moreover, there were cases when we ourselves eliminated false warnings and corrected errors [8]. Just in case, I decided to mention that this option of extended cooperation is also possible :).

Ratchet method

There is another interesting approach of gradually improving the quality of the code by eliminating static analyzer warnings. The bottom line is that the number of warnings can only decrease.

The number of warnings issued by the static analyzer gets counted. Quality gate is configured in such a way that now you can only commit code that doesn't increase the number of triggers. As a result, the process of consistent reducing of the warnings number starts by configuring the analyzer and editing errors.

Even if a person wants to cheat a little and decides to pass quality gate not by eliminating warnings in their new code, but by improving the old third-party code, it's not a big deal. All the same, the ratchet turns in one direction and gradually the number of defects will decrease. Even if a person doesn't want to edit their own new defects, they will still have to improve something in the neighboring code. At some point, the easy ways to reduce the number of warnings end, and there comes a moment when real errors will be fixed.

This methodology is described in more detail in a very interesting article by Ivan Ponomarev "Introduce Static Analysis in the Process, Don't Just Search for Bugs with It", which I recommend to read to anyone who is interested in improving the quality of code.

Conclusion

I hope that after checking out this article, readers will be more friendly to static analysis tools and will want to introduce them in the development process. If you still have questions, we are always ready to consult users of our PVS-Studio static analyzer and help with its implementation.

There are other typical doubts about whether a static analyzer can really be convenient and useful. I tried to dispel most of these doubts in the publication «Why You Should Choose the PVS-Studio Static Analyzer to Integrate into Your Development Process» [9].

Thank you for your attention! Come download and try the PVS-Studio analyzer.

Additional links

- Andrey Karpov. How to quickly check out interesting warnings given by the PVS-Studio analyzer for C and C++ code?

- Wikipedia. Rice's theorem.

- Andrey Karpov, Victoria Khanieva. Machine Learning in Static Analysis of Program Source Code.

- PVS-Studio. Documentation. Additional diagnostics configuration.

- Andrey Karpov. Characteristics of PVS-Studio Analyzer by the Example of EFL Core Libraries, 10-15% of False Positives.

- PVS-Studio. Documentation. Mass suppression of analyzer warnings.

- Ivan Andryashin. How We Tried Static Analysis on Our X-Ray Endovascular Surgery Training Simulator Project.

- Paul Eremeev, Svyatoslav Razmyslov. How the PVS-Studio Team Improved Unreal Engine's Code.

- Andrey Karpov. Why You Should Choose the PVS-Studio Static Analyzer to Integrate into Your Development Process.