Тема, насколько старая, настолько же и актуальная. Поделюсь, своим концептом на архитектуру провиженинга тестовых субд, с целью получения комментариев по существу - может кто ходил уже этой дорогой. И знает более короткий/удобный путь.

Сразу дисклайминг: всё нижеследующее - только идея, конструктивную критику за/против которой мне интересно получить. Я никого не призываю поступать именно так, мой интерес - сугубо академический. Ниже будет упоминаться se-standby - это не поддерживаемое вендором решение, а любой вариант получения-использования DR-env, подразумевающий копирование/синхронизацию/мирроринг данных коммерческих редакций oracle-субд продовых субд - должен быть пролицензирован.

В общем, вводная по use-case такова. Есть компания, с набором SE/SE2 баз. Баз достаточно много и они достаточно большие (по несколько терабайт каждая база), для ручного ведения тестовых баз от них, например, используя rman-бэкапы. Ещё важный момент: продовые бд - все названы одинаково (sid одинаковый). Информационные системы отстроены на использовании хранимок в продовых субд.

Соотв-но к этому и поэтому: существует большой набор plsql-разработчиков, поделённый на группы. С одной стороны: группы - работают с разными проектами, в разных стадиях работы с проектами, групп много, а с другой стороны - всё запихано в одних и тех же базах - группы работают практически с одними и теми же объектами бд и с разными целями этой работы, включая разрушающие тесты.

Такое бытие определяет потребности каждой группы в выделенной, для этой группы, тестовой бд. А то и: прямо для каждого разработчика.

Иначе говоря: бизнес-функциональные требования, по провиженингу тестовых баз такие:

Тестовых баз нужно много, потому что групп разработки много, десятки, а проектов - ещё больше. Провайдить тестовые базы нужно быстро, у разработки - свои сроки есть. Провайдить тестовые база - надо дёшево, по аппаратным ресурсам, человеко-часам.

Заранее не известно - от какой именно продовой бд потребуется слепить тестовую базу

Синтетическое наполнение тестовых бд данными - не подойдёт. Специфика проектов такая - либо пилят/перепиливают фичи к уже имеющемуся функционалу. А значит надо таблицы/данные/хранимки из продовой бд. А поскольку вся прикладная логика - в базе - там чёрт ногу сломит во взаимосвязях объектов возникает запрос: "мы не знаем что нам надо, сделай чтобы было всё. И актуальное."

Речь идёт о тестовых бд под функциональное тестирование.

Соответственно возникает инженерно-технический вопрос - как такое сделать. Лепить тестовые базы из рман-бэкапов продовых баз - ну, повторюсь, учитывая вводную на кол-во/размеры продовых баз и БФТ по работе с тестовыми базами - тут будут астрономического масштаба потребности в дисковых ёмкостях и человеко-часах, на такую деятельность.

Вариант с контейнерной бд - не подходит, SE-редакция и версия. Была бы EE и >=12.2: там можно до 4k подключаемых баз. Начиная с 18c сами подключаемые субд - лепить помощью снапшот-клонов.

Вариант с oracle-clonedb: тоже не подходит и тоже потому что SE;

Вариант проапгрейдить всё до 12.2 и EE - ну он есть конечно, в теории. На практике это вопросы к другим людям, не совсем, и совсем не к дба.

Для исходной ситуации попроще в природе есть такой вариант, для рапид/лоукост-провиженинга тестовых баз в SE-редакции:

От продовой SE-базы: по известной народной технологии, лепится

physical standbyбд, на отдельно стоящей виртуалке (дисклайминг про использование se-standby: в начале статьи).На этой, отдельно стоящей вм:

zfs, физ-компонента standby-бд - разворачивается в датасете, внутри zfs-пула. Пусть, условно, это путь/standbydbУ датасета: задано сжатие, это сильно экономит потребность в дисковой ёмкость, под физ-компоненту.В момент времени Ч: процесс актуализации standby-бд - останавливается.

От файловой системы, в которой живёт физ-компонента standby-бд: готовится cow-снапшот (snapshot+clon в терминах

zfs). Пусть cow-снапшот, условно, это путь/cowsnapshotЗапускается экземпляр субд, с отдельным файлом параметров, в котором, в качестве контрольника, указывается тот контрольник, который видно в

/cowsnapshotЗапущенный экземпляр - монтируется, с этого контрольника. Выполняется переименование датафайлов/логфайлов с путей в

/standbydbна пути в/cowsnapshotВыполняется смена

dbid, на какое то значение иdbname, ну пусть условно наtestdb1physical-standby - запускается и продолжает акутализироваться, уже, получается - как отдельный экземпляр.

Новая субд

testdb1, какими то скриптами, доводится от состояния побитовой копии продовой бд, к статусу тестовой бд.

Тут: всё прекрасно - бодро, экономно по дисковой ёмкости, просто. До тех пор пока хватает аппаратных ресурсов (прежде всего - память/цпу), вот на этой вм, на лепление/работу дополнительных тестовых баз, по этому сценарию, т.е.: testdb2, testdb3,... testdbN;

В ЕЕ - можно было бы, выкрутиться, в такой схеме и когда аппаратных ресурсов не хватает, а N - нужно делать ещё больше: cdb (тематический доклад с HL-конференции); Т.е.: поставить, на этой вм, контейнерную базу. И, после лепления очередной testdbN - не оставлять её, этуtestdbN, работать в виде стандалон-экземпляра, а заводить в контейнерную базу, в виде pdb; Тогда, на этой вм, по большому счёту, будут работать, постоянно, только две бд: вот эта physical standby,как само-осовременивающаяся копия продовой бд, конситетнтная/актуальная, и cdb; Ну и кратковременно - testdbN, в тот период времени когда её: уже создали, но ещё не завели в cdb, как pdb;

Но и этого, с момента времени когда dev-группы начнут требовать "а нам вчера нада базу от вот такого прода. сегодня - от другого. завтра от третьего", хватать перестанет - оно такого не обеспечит. Ибо, тут всё построено на наличии и использовании physical standby. А это - задаёт взаимно-однозначную связь между данной продовой бд и данной серверной площадкой, для лепления тестовых баз, от этой продовой бд. Т.е.: хочешь тестовых баз, от другой продовой бд - сделай такую же, отдельную, серверную площадку, с physical standby от этой продовой бд, cdb и прочее и прочее и прочее. Лепить несколько standby-баз, на этой площадке - не получится: и ресурсов быстро станет мало, и единая точка отказа, и, см. выше исходные: продовые бд, в этой компнии - все имеют одинаковое имя.

Т.е.: такое не подходит, под исходные БФТ данного юзекейса.

Ну и вот, тут, моя идея.

Берём какую то распределённую фс. Типа glusterfs, ceph; Пусть это будет ceph, мне он, в этом контексте, импонирует больше, возможностью подавать свои ресурсы на клиентские (по отношению к ceph-кластеру) вм в виде блочного устройства.

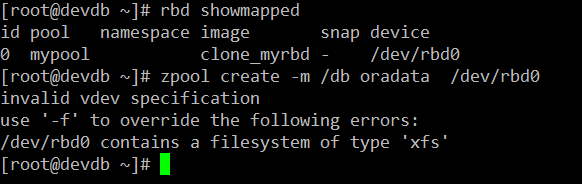

Как угодно, в этот ceph-кластер сливаем образы продовых бд. Ну, навскидку: создаём, в ceph-е, пул. В пуле, для каждой продовой бд: rbd-image, нужного размера. rbd-image - мапим, как дисковое уст-во, на какую то виртуалку(и), отдельной от продовой среды. Где разворачиваем, вот такой же народный se-standby, от данной продовой бд, а физ-компоненту этой standby-бд: заводим вот на это блочное уст-во, от ceph-а. Пусть, для определённости, здесь и далее это блочное устройство называется:

/dev/rbd0. Да, конечно: на этой, клиентской вм, сначала создаём какую то файловую систему, на этом/dev/rbd0монтируем её куда то, на этой вм. Потом лепим se-standby, с размещением её физ-компоненты в этой фс. Пусть, здесь и далее, такая вм называетсяdb;Т.о., в ceph-е, можно получить актуальные, консистентные и, что важно и приятно: само-акутализирующиеся, образы набора продовых баз. Можно и по другому как то, например инкрементально-обновляемые image-copy бэкапы. Тут не суть важно: как именно, допустим что есть такие образы баз в ceph-е.

Далее, готовится отдельная вм (здесь и далее:

devdb), под тестовую бд, от заданной продовой бд. В ceph-е, готовится клон, от данного пула/rbd-имиджа в котором расположена физ-компонента от standby-базы, данной продовой бд. Этот клон мапится наdebdbи становится там виден, как блочное устройство. Причём, на этом блочном уст-ве - уже есть файловая система, как итог действий на db-вм. Т.е., на стороне devdb-вм: просто монтируем это блочное устройство. Желательно в точку монтирования такую же как на db-вм, c которой, в ceph, в данный rbd-image ведётся физ-компонента standby-базы. Тогда - пути к датафайлам, на сторонеdevdbпод тестовую базу, получаться такие же, как пути к датафайлам, на стороне db-вм где живёт standby-бд.Ну. Собственно, всё: активировать, на стороне db-вм, образ standby-бд, доработать его скриптами, от фактического состояния побитовой копии прода, к статусу тестовой бд - спойлинг, перебивка паролей, режимов работы субд, обработка дблинок/джобов/acl-ей и прочее и прочее и прочее.

Собственно, пруф-оф-концепт я уже сделал. На oracle-xe базах, только standbу-базу не лепил, просто, на db-вм, расположил физ-компоненту субд в блочном устройстве поданном с ceph-а и остановил базу. Создал клон, от rbd-имиджа, в ceph-е, подал его, как блочное устройство на devdb-вм. Там замонтировал, в ту же точку монтирования. Запустил там xe-субд, с работой с образом базы, видимого через это блочное уст-во.

Запустилось, погонял SLOB-утилкой тесты. Работает.

Да тормозит, ибо - работа идёт не с локальным-честным дисковым устройством, а, с де-факто - сетевым ресурсом, мимикрирующим под блочное устройство.

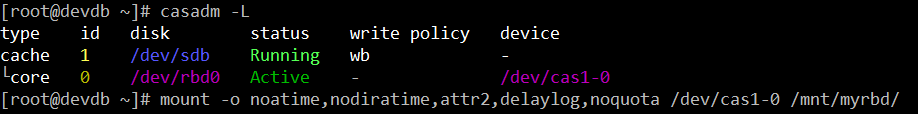

Но тут: тоже нашёлся вариант Open CAS - несколько выправляет ситуацию; Т.е., можно сделать типа вот так:

Здесь /dev/sdb - локальное-честное дисковое уст-во, devdb-вм, выступающее как кеш-девайс, для io с/на бакэнд-девайс (core-девайс, в терминах opencas) /dev/rbd0; wb: writeback-мода; Потестил, при прочих равных, с работой xe-субд, с образом бд на, непосредственно, /dev/rbd0;

Cтатспак-отчёт, для этого теста.

STATSPACK report for

Database DB Id Instance Inst Num Startup Time Release RAC

~~~~~~~~ ----------- ------------ -------- --------------- ----------- ---

2945358617 XE 1 12-Feb-21 18:26 11.2.0.2.0 NO

Host Name Platform CPUs Cores Sockets Memory (G)

~~~~ ---------------- ---------------------- ----- ----- ------- ------------

devdb Linux x86 64-bit 4 2 1 7.6

Snapshot Snap Id Snap Time Sessions Curs/Sess Comment

~~~~~~~~ ---------- ------------------ -------- --------- ------------------

Begin Snap: 13 12-Feb-21 19:08:41 34 1.8

End Snap: 14 12-Feb-21 19:13:45 24 1.3

Elapsed: 5.07 (mins) Av Act Sess: 8.8

DB time: 44.80 (mins) DB CPU: 0.31 (mins)

Cache Sizes Begin End

~~~~~~~~~~~ ---------- ----------

Buffer Cache: 424M Std Block Size: 8K

Shared Pool: 168M Log Buffer: 5,148K

Load Profile Per Second Per Transaction Per Exec Per Call

~~~~~~~~~~~~ ------------------ ----------------- ----------- -----------

DB time(s): 8.8 17.5 0.66 53.76

DB CPU(s): 0.1 0.1 0.00 0.37

Redo size: 32,571.0 64,296.1

Logical reads: 13,168.2 25,994.4

Block changes: 74.0 146.1

Physical reads: 13,039.7 25,740.8

Physical writes: 28.7 56.7

User calls: 0.2 0.3

Parses: 0.3 0.6

Hard parses: 0.0 0.0

W/A MB processed: 0.1 0.1

Logons: 0.0 0.0

Executes: 13.5 26.6

Rollbacks: 0.0 0.0

Transactions: 0.5

Instance Efficiency Indicators

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

Buffer Nowait %: 100.00 Redo NoWait %: 100.00

Buffer Hit %: 26.55 Optimal W/A Exec %: 100.00

Library Hit %: 100.00 Soft Parse %: 100.00

Execute to Parse %: 97.60 Latch Hit %: 99.99

Parse CPU to Parse Elapsd %: 0.00 % Non-Parse CPU: 100.00

Shared Pool Statistics Begin End

------ ------

Memory Usage %: 86.27 85.77

% SQL with executions>1: 76.71 75.29

% Memory for SQL w/exec>1: 81.30 80.15

Top 5 Timed Events Avg %Total

~~~~~~~~~~~~~~~~~~ wait Call

Event Waits Time (s) (ms) Time

----------------------------------------- ------------ ----------- ------ ------

db file sequential read 91,396 1,605 18 59.4

direct path read 60,032 1,031 17 38.2

db file parallel read 650 19 29 .7

CPU time 18 .7

resmgr:cpu quantum 5,969 12 2 .4

-------------------------------------------------------------

Host CPU (CPUs: 4 Cores: 2 Sockets: 1)

~~~~~~~~ Load Average

Begin End User System Idle WIO WCPU

------- ------- ------- ------- ------- ------- --------

3.09 3.51 0.58 3.29 95.85 68.88

Instance CPU

~~~~~~~~~~~~ % Time (seconds)

-------- --------------

Host: Total time (s): 1,170.2

Host: Busy CPU time (s): 48.5

% of time Host is Busy: 4.1

Instance: Total CPU time (s): 18.7

% of Busy CPU used for Instance: 38.6

Instance: Total Database time (s): 2,704.2

%DB time waiting for CPU (Resource Mgr): 0.0

Virtual Memory Paging

~~~~~~~~~~~~~~~~~~~~~

KB paged out per sec: 264.0

KB paged in per sec: 103,847.6

Memory Statistics Begin End

~~~~~~~~~~~~~~~~~ ------------ ------------

Host Mem (MB): 7,812.1 7,812.1

SGA use (MB): 1,019.4 1,019.4

PGA use (MB): 109.2 60.7

% Host Mem used for SGA+PGA: 14.4 13.8

-------------------------------------------------------------

Time Model System Stats DB/Inst: XE/XE Snaps: 13-14

-> Ordered by % of DB time desc, Statistic name

Statistic Time (s) % DB time

----------------------------------- -------------------- ---------

sql execute elapsed time 2,687.8 100.0

DB CPU 18.3 .7

PL/SQL execution elapsed time 0.1 .0

connection management call elapsed 0.0 .0

parse time elapsed 0.0 .0

DB time 2,687.9

background elapsed time 16.4

background cpu time 0.4

-------------------------------------------------------------

Foreground Wait Events DB/Inst: XE/XE Snaps: 13-14

-> Only events with Total Wait Time (s) >= .001 are shown

-> ordered by Total Wait Time desc, Waits desc (idle events last)

Avg %Total

%Tim Total Wait wait Waits Call

Event Waits out Time (s) (ms) /txn Time

---------------------------- ------------ ---- ---------- ------ -------- ------

db file sequential read 91,396 0 1,605 18 593.5 59.4

direct path read 60,032 0 1,031 17 389.8 38.2

db file parallel read 650 0 19 29 4.2 .7

resmgr:cpu quantum 5,969 0 12 2 38.8 .4

control file sequential read 340 0 1 2 2.2 .0

log file sync 2 0 0 18 0.0 .0

asynch descriptor resize 2,339 100 0 0 15.2 .0

SQL*Net message from client 33 0 0 0 0.2

-------------------------------------------------------------

Background Wait Events DB/Inst: XE/XE Snaps: 13-14

-> Only events with Total Wait Time (s) >= .001 are shown

-> ordered by Total Wait Time desc, Waits desc (idle events last)

Avg %Total

%Tim Total Wait wait Waits Call

Event Waits out Time (s) (ms) /txn Time

---------------------------- ------------ ---- ---------- ------ -------- ------

db file parallel write 777 0 6 7 5.0 .2

log file parallel write 188 0 4 19 1.2 .1

control file sequential read 172 0 3 20 1.1 .1

control file parallel write 99 0 2 22 0.6 .1

rdbms ipc message 1,385 87 3,012 2174 9.0

shared server idle wait 40 100 1,200 30009 0.3

Space Manager: slave idle wa 122 100 610 5000 0.8

DIAG idle wait 606 100 606 1000 3.9

Streams AQ: qmn coordinator 22 50 308 14005 0.1

Streams AQ: qmn slave idle w 11 0 308 28009 0.1

pmon timer 99 101 300 3033 0.6

dispatcher timer 5 100 300 60011 0.0

smon timer 1 100 300 ###### 0.0

SQL*Net message from client 14 0 0 0 0.1

-------------------------------------------------------------

Wait Events (fg and bg) DB/Inst: XE/XE Snaps: 13-14

-> s - second, cs - centisecond, ms - millisecond, us - microsecond

-> %Timeouts: value of 0 indicates value was < .5%. Value of null is truly 0

-> Only events with Total Wait Time (s) >= .001 are shown

-> ordered by Total Wait Time desc, Waits desc (idle events last)

Avg %Total

%Tim Total Wait wait Waits Call

Event Waits out Time (s) (ms) /txn Time

---------------------------- ------------ ---- ---------- ------ -------- ------

db file sequential read 91,396 0 1,605 18 593.5 59.4

direct path read 60,032 0 1,031 17 389.8 38.2

db file parallel read 650 0 19 29 4.2 .7

resmgr:cpu quantum 5,969 0 12 2 38.8 .4

db file parallel write 777 0 6 7 5.0 .2

control file sequential read 512 0 4 8 3.3 .1

log file parallel write 188 0 4 19 1.2 .1

control file parallel write 99 0 2 22 0.6 .1

log file sync 2 0 0 18 0.0 .0

asynch descriptor resize 2,340 100 0 0 15.2 .0

rdbms ipc message 1,385 87 3,012 2174 9.0

shared server idle wait 40 100 1,200 30009 0.3

Space Manager: slave idle wa 122 100 610 5000 0.8

DIAG idle wait 606 100 606 1000 3.9

Streams AQ: qmn coordinator 22 50 308 14005 0.1

Streams AQ: qmn slave idle w 11 0 308 28009 0.1

pmon timer 99 101 300 3033 0.6

dispatcher timer 5 100 300 60011 0.0

smon timer 1 100 300 ###### 0.0

SQL*Net message from client 47 0 0 0 0.3

-------------------------------------------------------------

Wait Event Histogram DB/Inst: XE/XE Snaps: 13-14

-> Total Waits - units: K is 1000, M is 1000000, G is 1000000000

-> % of Waits - column heading: <=1s is truly <1024ms, >1s is truly >=1024ms

-> % of Waits - value: .0 indicates value was <.05%, null is truly 0

-> Ordered by Event (idle events last)

Total ----------------- % of Waits ------------------

Event Waits <1ms <2ms <4ms <8ms <16ms <32ms <=1s >1s

-------------------------- ----- ----- ----- ----- ----- ----- ----- ----- -----

Disk file operations I/O 4 100.0

LGWR wait for redo copy 1 100.0

asynch descriptor resize 2340 100.0

control file parallel writ 99 35.4 6.1 12.1 27.3 19.2

control file sequential re 512 74.6 2.3 .4 .2 3.7 8.2 10.5

db file parallel read 650 .5 1.1 1.4 3.1 13.5 42.2 38.3

db file parallel write 775 33.7 7.6 12.1 14.8 16.9 11.2 3.6

db file sequential read 91K 10.1 22.3 3.0 2.9 14.8 27.5 19.3

direct path read 60K 1.1 .2 .8 12.8 43.9 28.9 12.3

latch: In memory undo latc 3 100.0

log file parallel write 188 14.9 31.9 15.4 16.5 21.3

log file sync 2 50.0 50.0

resmgr:cpu quantum 5965 69.9 3.1 7.4 14.6 4.0 .5 .4

DIAG idle wait 606 100.0

SQL*Net message from clien 47 95.7 4.3

SQL*Net message to client 46 100.0

Space Manager: slave idle 122 100.0

Streams AQ: qmn coordinato 22 50.0 50.0

Streams AQ: qmn slave idle 11 100.0

dispatcher timer 5 100.0

pmon timer 100 100.0

rdbms ipc message 1385 .6 .1 .1 .1 .6 32.9 65.5

shared server idle wait 40 100.0

smon timer 1 100.0

-------------------------------------------------------------

SQL ordered by CPU DB/Inst: XE/XE Snaps: 13-14

-> Total DB CPU (s): 18

-> Captured SQL accounts for 196.0% of Total DB CPU

-> SQL reported below exceeded 1.0% of Total DB CPU

CPU CPU per Elapsd Old

Time (s) Executions Exec (s) %Total Time (s) Buffer Gets Hash Value

---------- ------------ ---------- ------ ---------- --------------- ----------

9.53 1,872 0.01 52.0 1047.76 3,847,673 2922983071

Module: SQL*Plus

SELECT COUNT(C2) FROM CF2

7.32 1,906 0.00 39.9 1511.20 124,514 1900182548

Module: SQL*Plus

SELECT COUNT(C2) FROM CF1 WHERE ( CUSTID > ( :B1 - :B2 ) ) AND (

CUSTID < :B1 )

2.81 1 2.81 15.3 268.97 873,550 3562924056

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '1'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

1.80 1 1.80 9.8 268.95 371,759 2294594620

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '5'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

1.76 1 1.76 9.6 268.86 371,614 1815383075

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '9'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

1.74 1 1.74 9.5 268.42 363,476 1410152490

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '4'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

1.70 1 1.70 9.2 269.01 357,436 3704050312

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '8'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

1.68 1 1.68 9.1 268.07 352,430 203624297

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '7'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

1.65 1 1.65 9.0 267.82 337,416 2698804698

SQL ordered by CPU DB/Inst: XE/XE Snaps: 13-14

-> Total DB CPU (s): 18

-> Captured SQL accounts for 196.0% of Total DB CPU

-> SQL reported below exceeded 1.0% of Total DB CPU

CPU CPU per Elapsd Old

Time (s) Executions Exec (s) %Total Time (s) Buffer Gets Hash Value

---------- ------------ ---------- ------ ---------- --------------- ----------

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '10'; v_update_pc

t PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0';

v_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '3

2768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2

1.63 1 1.63 8.9 268.16 331,738 3879266389

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '6'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

1.60 1 1.60 8.7 269.00 327,016 3125669980

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '2'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

1.57 1 1.57 8.6 268.11 317,989 72207027

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '3'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

0.78 152 0.01 4.2 118.58 11,041 789159957

Module: SQL*Plus

UPDATE CF1 SET C2 = :B3 , C20 = :B3 WHERE ( CUSTID > ( :B1 - :B2

)) AND (CUSTID < :B1 )

0.38 1 0.38 2.1 2.42 3,171 588252891

Module: SQL*Plus

BEGIN :SNAP := STATSPACK.SNAP; END;

-------------------------------------------------------------

SQL ordered by Elapsed time for DB: XE Instance: XE Snaps: 13 -14

-> Total DB Time (s): 2,688

-> Captured SQL accounts for 199.6% of Total DB Time

-> SQL reported below exceeded 1.0% of Total DB Time

Elapsed Elap per CPU Old

Time (s) Executions Exec (s) %Total Time (s) Physical Reads Hash Value

---------- ------------ ---------- ------ ---------- --------------- ----------

1511.20 1,906 0.79 56.2 7.32 98,887 1900182548

Module: SQL*Plus

SELECT COUNT(C2) FROM CF1 WHERE ( CUSTID > ( :B1 - :B2 ) ) AND (

CUSTID < :B1 )

1047.76 1,872 0.56 39.0 9.53 3,842,048 2922983071

Module: SQL*Plus

SELECT COUNT(C2) FROM CF2

269.01 1 269.01 10.0 1.70 354,796 3704050312

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '8'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

269.00 1 269.00 10.0 1.60 324,997 3125669980

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '2'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

268.97 1 268.97 10.0 2.81 859,964 3562924056

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '1'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

268.95 1 268.95 10.0 1.80 368,875 2294594620

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '5'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

268.86 1 268.86 10.0 1.76 368,679 1815383075

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '9'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

268.42 1 268.42 10.0 1.74 360,329 1410152490

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '4'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

268.16 1 268.16 10.0 1.63 329,464 3879266389

SQL ordered by Elapsed time for DB: XE Instance: XE Snaps: 13 -14

-> Total DB Time (s): 2,688

-> Captured SQL accounts for 199.6% of Total DB Time

-> SQL reported below exceeded 1.0% of Total DB Time

Elapsed Elap per CPU Old

Time (s) Executions Exec (s) %Total Time (s) Physical Reads Hash Value

---------- ------------ ---------- ------ ---------- --------------- ----------

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '6'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

268.11 1 268.11 10.0 1.57 316,193 72207027

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '3'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

268.07 1 268.07 10.0 1.68 349,745 203624297

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '7'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

267.82 1 267.82 10.0 1.65 335,483 2698804698

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '10'; v_update_pc

t PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0';

v_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '3

2768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2

118.58 152 0.78 4.4 0.78 7,419 789159957

Module: SQL*Plus

UPDATE CF1 SET C2 = :B3 , C20 = :B3 WHERE ( CUSTID > ( :B1 - :B2

)) AND (CUSTID < :B1 )

-------------------------------------------------------------

SQL ordered by Gets DB/Inst: XE/XE Snaps: 13-14

-> End Buffer Gets Threshold: 10000 Total Buffer Gets: 4,003,140

-> Captured SQL accounts for 99.5% of Total Buffer Gets

-> SQL reported below exceeded 1.0% of Total Buffer Gets

CPU Elapsd Old

Buffer Gets Executions Gets per Exec %Total Time (s) Time (s) Hash Value

--------------- ------------ -------------- ------ -------- --------- ----------

3,847,673 1,872 2,055.4 ###### 9.53 1047.76 2922983071

Module: SQL*Plus

SELECT COUNT(C2) FROM CF2

873,550 1 873,550.0 601.1 2.81 268.97 3562924056

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '1'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

371,759 1 371,759.0 255.8 1.80 268.95 2294594620

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '5'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

371,614 1 371,614.0 255.7 1.76 268.86 1815383075

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '9'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

363,476 1 363,476.0 250.1 1.74 268.42 1410152490

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '4'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

357,436 1 357,436.0 246.0 1.70 269.01 3704050312

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '8'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

352,430 1 352,430.0 242.5 1.68 268.07 203624297

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '7'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

337,416 1 337,416.0 232.2 1.65 267.82 2698804698

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '10'; v_update_pc

t PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0';

v_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '3

2768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2

SQL ordered by Gets DB/Inst: XE/XE Snaps: 13-14

-> End Buffer Gets Threshold: 10000 Total Buffer Gets: 4,003,140

-> Captured SQL accounts for 99.5% of Total Buffer Gets

-> SQL reported below exceeded 1.0% of Total Buffer Gets

CPU Elapsd Old

Buffer Gets Executions Gets per Exec %Total Time (s) Time (s) Hash Value

--------------- ------------ -------------- ------ -------- --------- ----------

331,738 1 331,738.0 228.3 1.63 268.16 3879266389

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '6'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

327,016 1 327,016.0 225.0 1.60 269.00 3125669980

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '2'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

317,989 1 317,989.0 218.8 1.57 268.11 72207027

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '3'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

124,514 1,906 65.3 85.7 7.32 1511.20 1900182548

Module: SQL*Plus

SELECT COUNT(C2) FROM CF1 WHERE ( CUSTID > ( :B1 - :B2 ) ) AND (

CUSTID < :B1 )

-------------------------------------------------------------

SQL ordered by Reads DB/Inst: XE/XE Snaps: 13-14

-> End Disk Reads Threshold: 1000 Total Disk Reads: 3,964,080

-> Captured SQL accounts for 99.6% of Total Disk Reads

-> SQL reported below exceeded 1.0% of Total Disk Reads

CPU Elapsd

Physical Rds Executions Rds per Exec %Total Time (s) Time (s) Hash Value

--------------- ------------ -------------- ------ -------- --------- ----------

3,842,048 1,872 2,052.4 96.9 9.53 1047.76 2922983071

Module: SQL*Plus

SELECT COUNT(C2) FROM CF2

859,964 1 859,964.0 21.7 2.81 268.97 3562924056

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '1'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

368,875 1 368,875.0 9.3 1.80 268.95 2294594620

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '5'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

368,679 1 368,679.0 9.3 1.76 268.86 1815383075

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '9'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

360,329 1 360,329.0 9.1 1.74 268.42 1410152490

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '4'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

354,796 1 354,796.0 9.0 1.70 269.01 3704050312

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '8'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

349,745 1 349,745.0 8.8 1.68 268.07 203624297

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '7'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

335,483 1 335,483.0 8.5 1.65 267.82 2698804698

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '10'; v_update_pc

t PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0';

v_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '3

2768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2

SQL ordered by Reads DB/Inst: XE/XE Snaps: 13-14

-> End Disk Reads Threshold: 1000 Total Disk Reads: 3,964,080

-> Captured SQL accounts for 99.6% of Total Disk Reads

-> SQL reported below exceeded 1.0% of Total Disk Reads

CPU Elapsd

Physical Rds Executions Rds per Exec %Total Time (s) Time (s) Hash Value

--------------- ------------ -------------- ------ -------- --------- ----------

329,464 1 329,464.0 8.3 1.63 268.16 3879266389

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '6'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

324,997 1 324,997.0 8.2 1.60 269.00 3125669980

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '2'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

316,193 1 316,193.0 8.0 1.57 268.11 72207027

Module: SQL*Plus

DECLARE v_default_schema_number PLS_INTEGER := '3'; v_update_pct

PLS_INTEGER := '4'; v_max_loop_iterations PLS_INTEGER := '0'; v

_seconds_to_run PLS_INTEGER := '300'; v_scale PLS_INTEGER := '32

768'; v_work_unit PLS_INTEGER := '64' ; v_redo_stress VARCHAR2(

98,887 1,906 51.9 2.5 7.32 1511.20 1900182548

Module: SQL*Plus

SELECT COUNT(C2) FROM CF1 WHERE ( CUSTID > ( :B1 - :B2 ) ) AND (

CUSTID < :B1 )

-------------------------------------------------------------

SQL ordered by Executions DB/Inst: XE/XE Snaps: 13-14

-> End Executions Threshold: 100 Total Executions: 4,091

-> Captured SQL accounts for 96.6% of Total Executions

-> SQL reported below exceeded 1.0% of Total Executions

CPU per Elap per Old

Executions Rows Processed Rows per Exec Exec (s) Exec (s) Hash Value

------------ --------------- ---------------- ----------- ---------- ----------

1,906 1,912 1.0 0.00 0.79 1900182548

Module: SQL*Plus

SELECT COUNT(C2) FROM CF1 WHERE ( CUSTID > ( :B1 - :B2 ) ) AND (

CUSTID < :B1 )

1,872 1,876 1.0 0.01 0.56 2922983071

Module: SQL*Plus

SELECT COUNT(C2) FROM CF2

152 9,576 63.0 0.01 0.78 789159957

Module: SQL*Plus

UPDATE CF1 SET C2 = :B3 , C20 = :B3 WHERE ( CUSTID > ( :B1 - :B2

)) AND (CUSTID < :B1 )

-------------------------------------------------------------

SQL ordered by Parse Calls DB/Inst: XE/XE Snaps: 13-14

-> End Parse Calls Threshold: 1000 Total Parse Calls: 98

-> Captured SQL accounts for 13.3% of Total Parse Calls

-> SQL reported below exceeded 1.0% of Total Parse Calls

% Total Old

Parse Calls Executions Parses Hash Value

------------ ------------ -------- ----------

10 10 10.20 1307714173

Module: SQL*Plus

BEGIN DBMS_OUTPUT.GET_LINES(:LINES, :NUMLINES); END;

2 2 2.04 2275360153

select /*+ connect_by_filtering */ privilege#,level from sysauth

$ connect by grantee#=prior privilege# and privilege#>0 start wi

th grantee#=:1 and privilege#>0

1 1 1.02 588252891

Module: SQL*Plus

BEGIN :SNAP := STATSPACK.SNAP; END;

-------------------------------------------------------------

Instance Activity Stats DB/Inst: XE/XE Snaps: 13-14

Statistic Total per Second per Trans

--------------------------------- ------------------ -------------- ------------

Batched IO (bound) vector count 0 0.0 0.0

Batched IO (full) vector count 0 0.0 0.0

Batched IO block miss count 0 0.0 0.0

Batched IO buffer defrag count 0 0.0 0.0

Batched IO double miss count 0 0.0 0.0

Batched IO same unit count 0 0.0 0.0

Batched IO vector block count 0 0.0 0.0

Batched IO vector read count 0 0.0 0.0

Block Cleanout Optim referenced 14 0.1 0.1

CCursor + sql area evicted 0 0.0 0.0

CPU used by this session 1,844 6.1 12.0

CPU used when call started 2,088 6.9 13.6

CR blocks created 0 0.0 0.0

Cached Commit SCN referenced 3,861,311 12,701.7 25,073.5

Commit SCN cached 0 0.0 0.0

DB time 300,602 988.8 1,952.0

DBWR checkpoint buffers written 0 0.0 0.0

DBWR checkpoints 0 0.0 0.0

DBWR tablespace checkpoint buffer 0 0.0 0.0

DBWR thread checkpoint buffers wr 0 0.0 0.0

DBWR transaction table writes 0 0.0 0.0

DBWR undo block writes 582 1.9 3.8

Effective IO time 1,030,603,929 3,390,144.5 6,692,233.3

HSC Heap Segment Block Changes 9,775 32.2 63.5

Heap Segment Array Inserts 134 0.4 0.9

Heap Segment Array Updates 0 0.0 0.0

IMU Flushes 143 0.5 0.9

IMU Redo allocation size 1,487,020 4,891.5 9,656.0

IMU commits 11 0.0 0.1

IMU contention 142 0.5 0.9

IMU ktichg flush 0 0.0 0.0

IMU recursive-transaction flush 1 0.0 0.0

IMU undo allocation size 2,068,656 6,804.8 13,432.8

Number of read IOs issued 62,141 204.4 403.5

RowCR - row contention 0 0.0 0.0

RowCR attempts 1 0.0 0.0

RowCR hits 1 0.0 0.0

SQL*Net roundtrips to/from client 33 0.1 0.2

active txn count during cleanout 109 0.4 0.7

application wait time 0 0.0 0.0

background checkpoints completed 0 0.0 0.0

background checkpoints started 0 0.0 0.0

background timeouts 1,206 4.0 7.8

buffer is not pinned count 125,027 411.3 811.9

buffer is pinned count 119,205 392.1 774.1

bytes received via SQL*Net from c 7,694 25.3 50.0

bytes sent via SQL*Net to client 9,280 30.5 60.3

calls to get snapshot scn: kcmgss 4,290 14.1 27.9

calls to kcmgas 209 0.7 1.4

calls to kcmgcs 5,909 19.4 38.4

cell physical IO interconnect byt 32,569,245,184 107,135,675.0 ############

change write time 9 0.0 0.1

cleanout - number of ktugct calls 112 0.4 0.7

cleanouts only - consistent read 1 0.0 0.0

cluster key scan block gets 0 0.0 0.0

cluster key scans 0 0.0 0.0

Instance Activity Stats DB/Inst: XE/XE Snaps: 13-14

Statistic Total per Second per Trans

--------------------------------- ------------------ -------------- ------------

commit cleanout failures: block l 0 0.0 0.0

commit cleanout failures: callbac 9 0.0 0.1

commit cleanouts 9,909 32.6 64.3

commit cleanouts successfully com 9,900 32.6 64.3

commit txn count during cleanout 48 0.2 0.3

concurrency wait time 0 0.0 0.0

consistent changes 0 0.0 0.0

consistent gets 3,989,523 13,123.4 25,906.0

consistent gets - examination 2,367 7.8 15.4

consistent gets direct 3,857,814 12,690.2 25,050.7

consistent gets from cache 131,709 433.3 855.3

consistent gets from cache (fastp 45,515 149.7 295.6

cursor authentications 0 0.0 0.0

data blocks consistent reads - un 0 0.0 0.0

db block changes 22,496 74.0 146.1

db block gets 13,616 44.8 88.4

db block gets from cache 13,616 44.8 88.4

db block gets from cache (fastpat 928 3.1 6.0

deferred (CURRENT) block cleanout 9,334 30.7 60.6

dirty buffers inspected 8,129 26.7 52.8

enqueue conversions 0 0.0 0.0

enqueue releases 567 1.9 3.7

enqueue requests 557 1.8 3.6

enqueue timeouts 0 0.0 0.0

enqueue waits 0 0.0 0.0

execute count 4,091 13.5 26.6

file io wait time 1,916,918,899 6,305,654.3 ############

free buffer inspected 112,825 371.1 732.6

free buffer requested 107,530 353.7 698.3

heap block compress 0 0.0 0.0

hot buffers moved to head of LRU 12,620 41.5 82.0

immediate (CR) block cleanout app 1 0.0 0.0

immediate (CURRENT) block cleanou 122 0.4 0.8

index crx upgrade (positioned) 0 0.0 0.0

index fast full scans (full) 1 0.0 0.0

index fetch by key 159 0.5 1.0

index scans kdiixs1 2,098 6.9 13.6

leaf node 90-10 splits 1 0.0 0.0

leaf node splits 47 0.2 0.3

lob reads 0 0.0 0.0

lob writes 0 0.0 0.0

lob writes unaligned 0 0.0 0.0

logons cumulative 1 0.0 0.0

max cf enq hold time 0 0.0 0.0

messages received 946 3.1 6.1

messages sent 946 3.1 6.1

min active SCN optimization appli 21 0.1 0.1

no work - consistent read gets 3,979,853 13,091.6 25,843.2

non-idle wait count 167,913 552.4 1,090.3

non-idle wait time 268,075 881.8 1,740.8

opened cursors cumulative 4,203 13.8 27.3

parse count (failures) 0 0.0 0.0

parse count (hard) 0 0.0 0.0

parse count (total) 98 0.3 0.6

parse time cpu 0 0.0 0.0

parse time elapsed 1 0.0 0.0

Instance Activity Stats DB/Inst: XE/XE Snaps: 13-14

Statistic Total per Second per Trans

--------------------------------- ------------------ -------------- ------------

physical read IO requests 168,897 555.6 1,096.7

physical read bytes 32,473,743,360 106,821,524.2 ############

physical read total IO requests 169,417 557.3 1,100.1

physical read total bytes 32,486,031,360 106,861,945.3 ############

physical read total multi block r 62,163 204.5 403.7

physical reads 3,964,080 13,039.7 25,740.8

physical reads cache 106,742 351.1 693.1

physical reads cache prefetch 14,721 48.4 95.6

physical reads direct 3,857,338 12,688.6 25,047.7

physical reads direct temporary t 0 0.0 0.0

physical reads prefetch warmup 0 0.0 0.0

physical write IO requests 7,821 25.7 50.8

physical write bytes 71,491,584 235,169.7 464,231.1

physical write total IO requests 8,110 26.7 52.7

physical write total bytes 83,213,824 273,729.7 540,349.5

physical write total multi block 25 0.1 0.2

physical writes 8,727 28.7 56.7

physical writes direct 0 0.0 0.0

physical writes from cache 8,727 28.7 56.7

physical writes non checkpoint 8,664 28.5 56.3

pinned buffers inspected 1 0.0 0.0

prefetch warmup blocks aged out b 0 0.0 0.0

prefetched blocks aged out before 0 0.0 0.0

process last non-idle time 304 1.0 2.0

recursive calls 5,842 19.2 37.9

recursive cpu usage 1,821 6.0 11.8

redo blocks checksummed by FG (ex 11,095 36.5 72.1

redo blocks written 19,727 64.9 128.1

redo entries 9,748 32.1 63.3

redo size 9,901,596 32,571.0 64,296.1

redo synch long waits 2 0.0 0.0

redo synch time 3 0.0 0.0

redo synch time (usec) 35,960 118.3 233.5

redo synch writes 11 0.0 0.1

redo wastage 43,772 144.0 284.2

redo write time 357 1.2 2.3

redo writes 188 0.6 1.2

rollback changes - undo records a 0 0.0 0.0

rollbacks only - consistent read 0 0.0 0.0

rows fetched via callback 12 0.0 0.1

scheduler wait time 1,163 3.8 7.6

session connect time 334 1.1 2.2

session cursor cache hits 4,118 13.6 26.7

session logical reads 4,003,140 13,168.2 25,994.4

session uga memory max 12,482,920 41,062.2 81,057.9

shared hash latch upgrades - no w 17 0.1 0.1

sorts (memory) 82 0.3 0.5

sorts (rows) 5,576 18.3 36.2

sql area evicted 0 0.0 0.0

sql area purged 0 0.0 0.0

summed dirty queue length 54,161 178.2 351.7

switch current to new buffer 1 0.0 0.0

table fetch by rowid 121,024 398.1 785.9

table fetch continued row 0 0.0 0.0

table scan blocks gotten 3,856,291 12,685.2 25,040.9

table scan rows gotten 3,856,423 12,685.6 25,041.7

Instance Activity Stats DB/Inst: XE/XE Snaps: 13-14

Statistic Total per Second per Trans

--------------------------------- ------------------ -------------- ------------

table scans (cache partitions) 0 0.0 0.0

table scans (direct read) 1,882 6.2 12.2

table scans (long tables) 1,882 6.2 12.2

table scans (short tables) 20 0.1 0.1

total cf enq hold time 0 0.0 0.0

total number of cf enq holders 0 0.0 0.0

total number of times SMON posted 0 0.0 0.0

undo change vector size 4,105,512 13,505.0 26,659.2

user I/O wait time 265,366 872.9 1,723.2

user calls 50 0.2 0.3

user commits 154 0.5 1.0

workarea executions - optimal 86 0.3 0.6

write clones created in foregroun 2 0.0 0.0

-------------------------------------------------------------

Instance Activity Stats DB/Inst: XE/XE Snaps: 13-14

-> Statistics with absolute values (should not be diffed)

Statistic Begin Value End Value

--------------------------------- --------------- ---------------

logons current 34 24

opened cursors current 61 31

session cursor cache count 1,770 1,820

-------------------------------------------------------------

Instance Activity Stats DB/Inst: XE/XE Snaps: 13-14

-> Statistics identified by '(derived)' come from sources other than SYSSTAT

Statistic Total per Hour

--------------------------------- ------------------ ---------

log switches (derived) 0 .00

-------------------------------------------------------------

OS Statistics DB/Inst: XE/XE Snaps: 13-14

-> ordered by statistic type (CPU use, Virtual Memory, Hardware Config), Name

Statistic Total

------------------------- ----------------------

BUSY_TIME 4,851

IDLE_TIME 112,172

IOWAIT_TIME 80,601

SYS_TIME 3,848

USER_TIME 676

RSRC_MGR_CPU_WAIT_TIME 982

VM_IN_BYTES 32,327,352,320

VM_OUT_BYTES 82,188,288

PHYSICAL_MEMORY_BYTES 8,191,578,112

NUM_CPUS 4

NUM_CPU_CORES 2

NUM_CPU_SOCKETS 1

GLOBAL_RECEIVE_SIZE_MAX 212,992

GLOBAL_SEND_SIZE_MAX 212,992

TCP_RECEIVE_SIZE_DEFAULT 87,380

TCP_RECEIVE_SIZE_MAX 6,291,456

TCP_RECEIVE_SIZE_MIN 4,096

TCP_SEND_SIZE_DEFAULT 16,384

TCP_SEND_SIZE_MAX 4,194,304

TCP_SEND_SIZE_MIN 4,096

-------------------------------------------------------------

OS Statistics - detail DB/Inst: XE/XE Snaps: 13-14

Snap Snapshot

Id Day Time Load %Busy %User %System %WIO %WCPU

------ --------------- ------ ------ ------ ------- ------ ------

13 Fri 12 19:08:41 3.1

14 Fri 12 19:13:45 3.5 4.1 .6 3.3 68.9

-------------------------------------------------------------

IO Stat by Function - summary DB/Inst: XE/XE Snaps: 13-14

->Data Volume values suffixed with M,G,T,P are in multiples of 1024,

other values suffixed with K,M,G,T,P are in multiples of 1000

->ordered by Data Volume (Read+Write) desc

---------- Read --------- --------- Write -------- --- Wait ----

Data Requests Data Data Requests Data Avg

Function Volume /sec Vol/sec Volume /sec Vol/sec Count Tm(ms)

--------------- ------ -------- -------- ------ -------- -------- ------ ------

Direct Reads 30G 205.6 99.7M 0.0

Buffer Cache Re 839M 353.0 2.8M 92K 0.0

Others 8M 1.7 .0M 2M .3 .0M 611 0.0

-------------------------------------------------------------

IO Stat by Function - detail DB/Inst: XE/XE Snaps: 13-14

->ordered by Data Volume (Read+Write) desc

----------- Read ---------- ----------- Write ---------

Small Large Small Large Small Large Small Large

Read Read Data Data Write Write Data Data

Function Reqs Reqs Read Read Reqs Reqs Writn Writn

------------------ ------ ------ ------ ------ ------ ------ ------ ------

Direct Reads 63K 30G

Buffer Cache Reads 107K 839M

Others 512 8M 99 2M

-------------------------------------------------------------

Tablespace IO Stats DB/Inst: XE/XE Snaps: 13-14

->ordered by IOs (Reads + Writes) desc

Tablespace

------------------------------

Av Av Av Av Buffer Av Buf

Reads Reads/s Rd(ms) Blks/Rd Writes Writes/s Waits Wt(ms)

-------------- ------- ------ ------- ------------ -------- ---------- ------

USERS

169,586 558 9.5 23.5 8,091 27 0 0.0

STATSPACK

131 0 11.1 1.0 106 0 0 0.0

UNDOTBS1

15 0 19.3 1.0 68 0 0 0.0

-------------------------------------------------------------

File IO Stats DB/Inst: XE/XE Snaps: 13-14

->Mx Rd Bkt: Max bucket time for single block read

->ordered by Tablespace, File

Tablespace Filename

------------------------ ----------------------------------------------------

Av Mx Av

Av Rd Rd Av Av Buffer BufWt

Reads Reads/s (ms) Bkt Blks/Rd Writes Writes/s Waits (ms)

-------------- ------- ----- --- ------- ------------ -------- ---------- ------

STATSPACK /mnt/myrbd/XE/statspack.dbf

131 0 11.1 64 1.0 106 0 0

UNDOTBS1 /mnt/myrbd/XE/undotbs1.dbf

15 0 19.3 64 1.0 68 0 0

USERS /mnt/myrbd/XE/users.dbf

169,586 558 9.5 ### 23.5 8,091 27 0

-------------------------------------------------------------

File Read Histogram Stats DB/Inst: XE/XE Snaps: 13-14

->Number of single block reads in each time range

->Tempfiles are not included

->ordered by Tablespace, File

Tablespace Filename

------------------------ ----------------------------------------------------

0 - 2 ms 2 - 4 ms 4 - 8 ms 8 - 16 ms 16 - 32 ms 32+ ms

------------ ------------ ------------ ------------ ------------ ------------

UNDOTBS1 /mnt/myrbd/XE/undotbs1.dbf

4 1 0 1 6 3

STATSPACK /mnt/myrbd/XE/statspack.dbf

66 4 5 17 25 14

USERS /mnt/myrbd/XE/users.dbf

45,130 2,737 2,647 13,602 25,254 17,671

-------------------------------------------------------------

Instance Recovery Stats DB/Inst: XE/XE Snaps: 13-14

-> B: Begin snapshot, E: End snapshot

Targt Estd Log File Log Ckpt Log Ckpt

MTTR MTTR Recovery Actual Target Size Timeout Interval

(s) (s) Estd IOs Redo Blks Redo Blks Redo Blks Redo Blks Redo Blks

- ----- ----- ---------- --------- --------- ---------- --------- ------------

B 0 0 1713 5924 82944 82944

E 0 0 3512 24343 9877 82944 9877

-------------------------------------------------------------

Memory Target Advice DB/Inst: XE/XE Snaps: 13-14

-> Advice Reset: if this is null, the data shown has been diffed between

the Begin and End snapshots. If this is 'Y', the advisor has been

reset during this interval due to memory resize operations, and

the data shown is since the reset operation.

Memory Size Est. Advice

Memory Size (M) Factor DB time (s) Reset

--------------- ----------- ------------ ------

512 .5 2,698

768 .8 2,698

1,024 1.0 2,698

1,280 1.3 2,698

1,536 1.5 2,698

1,792 1.8 2,698

2,048 2.0 2,698

-------------------------------------------------------------

Memory Dynamic Components DB/Inst: XE/XE Snaps: 13-14

-> Op - memory resize Operation

-> Cache: D: Default, K: Keep, R: Recycle

-> Mode: DEF: DEFerred mode, IMM: IMMediate mode

Begin Snap End Snap Op Last Op

Cache Size (M) Size (M) Count Type/Mode Last Op Time

---------------------- ---------- -------- ------- ---------- ---------------

D:buffer cache 424 0 INITIA/

PGA Target 412 0 STATIC

SGA Target 612 0 STATIC

java pool 4 0 STATIC

large pool 4 0 STATIC

shared pool 168 0 STATIC

-------------------------------------------------------------

Buffer Pool Statistics DB/Inst: XE/XE Snaps: 13-14

-> Standard block size Pools D: default, K: keep, R: recycle

-> Default Pools for other block sizes: 2k, 4k, 8k, 16k, 32k

-> Buffers: the number of buffers. Units of K, M, G are divided by 1000

Free Writ Buffer

Pool Buffer Physical Physical Buffer Comp Busy

P Buffers Hit% Gets Reads Writes Waits Wait Waits

--- ------- ---- -------------- ------------ ----------- ------- ---- ----------

D 52K 27 145,694 107,012 8,951 0 0 0

-------------------------------------------------------------

PGA Aggr Target Stats DB/Inst: XE/XE Snaps: 13-14

-> B: Begin snap E: End snap (rows identified with B or E contain data

which is absolute i.e. not diffed over the interval)

-> PGA cache hit % - percentage of W/A (WorkArea) data processed only in-memory

-> Auto PGA Target - actual workarea memory target

-> W/A PGA Used - amount of memory used for all WorkAreas (manual + auto)

-> %PGA W/A Mem - percentage of PGA memory allocated to WorkAreas

-> %Auto W/A Mem - percentage of WorkArea memory controlled by Auto Mem Mgmt

-> %Man W/A Mem - percentage of WorkArea memory under Manual control

PGA Cache Hit % W/A MB Processed Extra W/A MB Read/Written

--------------- ---------------- -------------------------

100.0 22 0

PGA Aggr Target Histogram DB/Inst: XE/XE Snaps: 13-14

-> Optimal Executions are purely in-memory operations

Low High

Optimal Optimal Total Execs Optimal Execs 1-Pass Execs M-Pass Execs

------- ------- -------------- ------------- ------------ ------------

2K 4K 59 59 0 0

64K 128K 2 2 0 0

128K 256K 1 1 0 0

256K 512K 2 2 0 0

512K 1024K 14 14 0 0

4M 8M 2 2 0 0

-------------------------------------------------------------

PGA Memory Advisory DB/Inst: XE/XE End Snap: 14

-> When using Auto Memory Mgmt, minimally choose a pga_aggregate_target value

where Estd PGA Overalloc Count is 0

Estd Extra Estd

PGA Aggr W/A MB Estd Time PGA Estd PGA

Target Size W/A MB Read/Written to Process Cache Overalloc

Est (MB) Factr Processed to Disk Bytes (s) Hit % Count

---------- ------ -------------- -------------- ---------- ------ ----------

52 0.1 306 0 0.1 100.0 2

103 0.3 306 0 0.1 100.0 0

206 0.5 306 0 0.1 100.0 0

309 0.8 306 0 0.1 100.0 0

412 1.0 306 0 0.1 100.0 0

494 1.2 306 0 0.1 100.0 0

577 1.4 306 0 0.1 100.0 0

659 1.6 306 0 0.1 100.0 0

742 1.8 306 0 0.1 100.0 0

824 2.0 306 0 0.1 100.0 0

1,236 3.0 306 0 0.1 100.0 0

1,648 4.0 306 0 0.1 100.0 0

2,472 6.0 306 0 0.1 100.0 0

3,296 8.0 306 0 0.1 100.0 0

-------------------------------------------------------------

Process Memory Summary Stats DB/Inst: XE/XE Snaps: 13-14

-> B: Begin snap E: End snap

-> All rows below contain absolute values (i.e. not diffed over the interval)

-> Max Alloc is Maximum PGA Allocation size at snapshot time

Hist Max Alloc is the Historical Max Allocation for still-connected processes

-> Num Procs or Allocs: For Begin/End snapshot lines, it is the number of

processes. For Category lines, it is the number of allocations

-> ordered by Begin/End snapshot, Alloc (MB) desc

Hist Num

Avg Std Dev Max Max Procs

Alloc Used Freeabl Alloc Alloc Alloc Alloc or

Category (MB) (MB) (MB) (MB) (MB) (MB) (MB) Allocs

- -------- --------- --------- -------- -------- ------- ------- ------ ------

B -------- 109.2 64.1 18.5 2.8 2.6 12 12 39

Other 84.0 2.2 2.2 12 12 39

Freeable 18.5 .0 2.1 1.9 6 9

SQL 5.0 4.5 .2 .4 1 6 18

PL/SQL 1.7 1.6 .0 .1 0 0 34

E -------- 60.7 40.7 13.9 2.1 2.7 12 12 29

Other 46.0 1.6 2.2 12 12 29

Freeable 13.9 .0 2.0 2.3 6 7

PL/SQL .5 .5 .0 .1 0 0 24

SQL .3 .1 .0 .0 0 6 8

-------------------------------------------------------------

Top Process Memory (by component) DB/Inst: XE/XE Snaps: 13-14

-> ordered by Begin/End snapshot, Alloc (MB) desc

Alloc Used Freeabl Max Hist Max

PId Category (MB) (MB) (MB) Alloc (MB) Alloc (MB)

- ------ ------------- ------- ------- -------- ---------- ----------

B 11 LGWR -------- 11.7 10.8 .2 11.7 11.7

Other 11.5 11.5 11.5

Freeable .2 .0 .2

PL/SQL .0 .0 .0 .0

40 ------------ 8.1 1.5 6.2 8.1 8.1

Freeable 6.2 .0 6.2

Other 1.6 1.6 1.6

PL/SQL .2 .2 .2 .2

SQL .1 .1 .1 6.2

10 DBW0 -------- 7.3 6.8 .3 7.3 7.3

Other 7.0 7.0 7.0

Freeable .3 .0 .3

PL/SQL .0 .0 .0 .0

28 ------------ 5.3 2.7 .0 5.3 5.3

Other 5.1 5.1 5.1

PL/SQL .1 .1 .1 .1

SQL .0 .0 .0 1.8

32 ------------ 5.3 2.7 .0 5.3 5.3

Other 5.1 5.1 5.1

PL/SQL .1 .1 .1 .1

SQL .0 .0 .0 1.8

13 SMON -------- 4.8 1.0 3.6 4.8 4.8

Freeable 3.6 .0 3.6

Other 1.2 1.2 1.2

PL/SQL .0 .0 .0 .0

SQL .0 .0 .0 2.1

29 ------------ 4.8 2.1 2.4 4.8 4.8

Freeable 2.4 .0 2.4

Other 2.2 2.2 2.2

PL/SQL .1 .1 .1 .1

SQL .0 .0 .0 1.8

33 ------------ 4.8 2.3 .0 4.8 4.8

Other 4.6 4.6 4.6

PL/SQL .1 .1 .1 .1

SQL .0 .0 .0 1.8

34 ------------ 4.8 2.3 .0 4.8 4.8

Other 3.5 3.5 3.5

SQL 1.1 1.1 1.1 1.8

PL/SQL .1 .1 .1 .1

35 ------------ 4.8 2.3 .0 4.8 4.8

Other 4.6 4.6 4.6

PL/SQL .1 .1 .1 .1

SQL .0 .0 .0 1.8

36 ------------ 4.8 2.1 2.4 4.8 4.8

Freeable 2.4 .0 2.4

Other 2.2 2.2 2.2

PL/SQL .1 .1 .1 .1

SQL .0 .0 .0 1.8

39 ------------ 4.8 2.3 .0 4.8 4.8

Other 3.5 3.5 3.5

SQL 1.1 1.1 1.1 1.8

PL/SQL .1 .1 .1 .1

30 ------------ 4.7 2.3 .0 4.7 4.7

Top Process Memory (by component) DB/Inst: XE/XE Snaps: 13-14

-> ordered by Begin/End snapshot, Alloc (MB) desc

Alloc Used Freeabl Max Hist Max

PId Category (MB) (MB) (MB) Alloc (MB) Alloc (MB)

- ------ ------------- ------- ------- -------- ---------- ----------

B 30 Other 3.5 3.5 3.5

SQL 1.1 1.1 1.1 1.7

PL/SQL .1 .1 .1 .1

31 ------------ 4.7 2.3 .0 4.7 4.7

Other 3.5 3.5 3.5

SQL 1.1 1.1 1.1 1.7

PL/SQL .1 .1 .1 .1

15 MMON -------- 4.5 1.9 2.3 4.5 4.5

Freeable 2.3 .0 2.3

Other 2.1 2.1 2.1

PL/SQL .1 .1 .1 .1

SQL .0 .0 .0 1.1

22 TNS V1-V3 --- 2.9 1.6 .9 2.9 6.8

Other 1.8 1.8 3.4

Freeable .9 .0 .9

SQL .1 .0 .1 2.5

PL/SQL .0 .0 .0 .0

25 Q000 -------- 1.6 1.1 .3 1.6 1.6

Other 1.0 1.0 1.0

Freeable .3 .0 .3

PL/SQL .2 .2 .2 .2

SQL .0 .0 .0 .2

12 CKPT -------- 1.5 .8 .0 1.5 1.5

Other 1.5 1.5 1.5

PL/SQL .0 .0 .0 .0

17 D000 -------- 1.3 1.2 .0 1.3 1.3

Other 1.3 1.3 1.3

8 DIA0 -------- 1.2 1.0 .0 1.2 1.2

Other 1.2 1.2 1.2

PL/SQL .0 .0 .0 .0

E 11 LGWR -------- 11.7 10.8 .2 11.7 11.7

Other 11.5 11.5 11.5

Freeable .2 .0 .2

PL/SQL .0 .0 .0 .0

28 ------------ 8.1 1.5 6.3 8.1 8.1

Freeable 6.3 .0 6.3

Other 1.6 1.6 1.6

PL/SQL .2 .2 .2 .2

SQL .1 .1 .1 6.2

10 DBW0 -------- 7.3 6.8 .3 7.3 7.3

Other 7.0 7.0 7.0

Freeable .3 .0 .3

PL/SQL .0 .0 .0 .0

13 SMON -------- 4.8 1.0 3.6 4.8 4.8

Freeable 3.6 .0 3.6

Other 1.1 1.1 1.1

PL/SQL .0 .0 .0 .0

SQL .0 .0 .0 2.1

15 MMON -------- 4.5 1.9 2.3 4.5 4.5

Freeable 2.3 .0 2.3

Other 2.1 2.1 2.1

PL/SQL .1 .1 .1 .1

SQL .0 .0 .0 1.1

Top Process Memory (by component) DB/Inst: XE/XE Snaps: 13-14

-> ordered by Begin/End snapshot, Alloc (MB) desc

Alloc Used Freeabl Max Hist Max

PId Category (MB) (MB) (MB) Alloc (MB) Alloc (MB)

- ------ ------------- ------- ------- -------- ---------- ----------

E 22 TNS V1-V3 --- 2.9 1.6 .9 2.9 6.8

Other 1.8 1.8 3.4

Freeable .9 .0 .9

SQL .1 .0 .1 2.5

PL/SQL .0 .0 .0 .0

25 Q000 -------- 1.6 1.1 .3 1.6 1.6

Other 1.0 1.0 1.0

Freeable .3 .0 .3

PL/SQL .2 .2 .2 .2

SQL .0 .0 .0 .2

12 CKPT -------- 1.5 .8 .0 1.5 1.5

Other 1.5 1.5 1.5

PL/SQL .0 .0 .0 .0

17 D000 -------- 1.3 1.2 .0 1.3 1.3

Other 1.3 1.3 1.3

8 DIA0 -------- 1.2 1.0 .0 1.2 1.2

Other 1.2 1.2 1.2

PL/SQL .0 .0 .0 .0

14 RECO -------- 1.1 .8 .0 1.1 1.1

Other 1.1 1.1 1.1

PL/SQL .0 .0 .0 .0

SQL .0 .0 .0 .1

27 W001 -------- 1.1 .8 .0 1.1 1.1

Other 1.1 1.1 1.1

SQL .0 .0 .0 .0

PL/SQL .0 .0 .0 .0

7 DBRM -------- 1.0 .8 .0 1.0 1.0

Other 1.0 1.0 1.0

PL/SQL .0 .0 .0 .0

SQL .0 .0 .0 .1

-------------------------------------------------------------

Latch Activity DB/Inst: XE/XE Snaps: 13-14

->"Get Requests", "Pct Get Miss" and "Avg Slps/Miss" are statistics for

willing-to-wait latch get requests

->"NoWait Requests", "Pct NoWait Miss" are for no-wait latch get requests

->"Pct Misses" for both should be very close to 0.0

Pct Avg Wait Pct

Get Get Slps Time NoWait NoWait

Latch Requests Miss /Miss (s) Requests Miss

------------------------ -------------- ------ ------ ------ ------------ ------

AQ deq hash table latch 1 0.0 0 0

ASM db client latch 198 0.0 0 0

ASM map operation hash t 1 0.0 0 0

ASM network state latch 5 0.0 0 0

Change Notification Hash 101 0.0 0 0

Consistent RBA 188 0.0 0 0

DML lock allocation 446 0.0 0 0

Event Group Locks 2 0.0 0 0

FOB s.o list latch 38 0.0 0 0

File State Object Pool P 1 0.0 0 0

I/O Staticstics latch 1 0.0 0 0

IPC stats buffer allocat 1 0.0 0 0

In memory undo latch 36,893 0.0 1.0 0 154 0.0

JS Sh mem access 1 0.0 0 0

JS queue access latch 1 0.0 0 0

JS slv state obj latch 1 0.0 0 0

KFC FX Hash Latch 1 0.0 0 0

KFC Hash Latch 1 0.0 0 0

KFCL LE Freelist 1 0.0 0 0

KGNFS-NFS:SHM structure 1 0.0 0 0

KGNFS-NFS:SVR LIST 1 0.0 0 0

KJC message pool free li 1 0.0 0 0

KJCT flow control latch 1 0.0 0 0

KMG MMAN ready and start 100 0.0 0 0

KTF sga latch 1 0.0 0 87 0.0

KWQP Prop Status 1 0.0 0 0

Locator state objects po 1 0.0 0 0

Lsod array latch 1 0.0 0 0

MQL Tracking Latch 0 0 6 0.0

Memory Management Latch 1 0.0 0 100 0.0

Memory Queue 1 0.0 0 0

Memory Queue Message Sub 1 0.0 0 0

Memory Queue Message Sub 1 0.0 0 0

Memory Queue Message Sub 1 0.0 0 0

Memory Queue Message Sub 1 0.0 0 0

Memory Queue Subscriber 1 0.0 0 0

MinActiveScn Latch 2 0.0 0 0

Mutex 1 0.0 0 0

Mutex Stats 1 0.0 0 0

OS process 34 0.0 0 0

OS process allocation 614 0.0 0 0

OS process: request allo 12 0.0 0 0

PL/SQL warning settings 14 0.0 0 0

PX hash array latch 1 0.0 0 0

QMT 1 0.0 0 0

SGA IO buffer pool latch 6 0.0 0 6 0.0

SGA blob parent 1 0.0 0 0

SGA bucket locks 1 0.0 0 0

SGA heap locks 1 0.0 0 0

SGA pool locks 1 0.0 0 0

Latch Activity DB/Inst: XE/XE Snaps: 13-14

->"Get Requests", "Pct Get Miss" and "Avg Slps/Miss" are statistics for

willing-to-wait latch get requests

->"NoWait Requests", "Pct NoWait Miss" are for no-wait latch get requests

->"Pct Misses" for both should be very close to 0.0

Pct Avg Wait Pct

Get Get Slps Time NoWait NoWait

Latch Requests Miss /Miss (s) Requests Miss

------------------------ -------------- ------ ------ ------ ------------ ------

SQL memory manager latch 12 0.0 0 99 0.0

SQL memory manager worka 6,765 0.0 0 0

Shared B-Tree 15 0.0 0 0

Streams Generic 1 0.0 0 0

Testing 1 0.0 0 0

Token Manager 1 0.0 0 0

WCR: sync 1 0.0 0 0

Write State Object Pool 1 0.0 0 0

XDB NFS Security Latch 1 0.0 0 0

XDB unused session pool 1 0.0 0 0

XDB used session pool 1 0.0 0 0

active checkpoint queue 888 0.0 0 0

active service list 140 0.0 0 250 0.0

buffer pool 1 0.0 0 0

business card 1 0.0 0 0

cache buffer handles 358 0.0 0 0

cache buffers chains 477,546 0.0 0 132,590 0.0

cache buffers lru chain 10,477 0.0 0.0 0 125,954 0.2

call allocation 25 0.0 0 0

cas latch 1 0.0 0 0

change notification clie 1 0.0 0 0

channel handle pool latc 13 0.0 0 0

channel operations paren 1,614 0.0 0 0

checkpoint queue latch 22,169 0.0 0.0 0 10,320 0.0

client/application info 12 0.0 0 0

compile environment latc 1 0.0 0 0

cp cmon/server latch 1 0.0 0 0

cp pool latch 1 0.0 0 0

cp server hash latch 1 0.0 0 0

cp sga latch 5 0.0 0 0

cvmap freelist lock 1 0.0 0 0

deferred cleanup latch 5 0.0 0 0

dml lock allocation 5 0.0 0 0

done queue latch 1 0.0 0 0

dummy allocation 13 0.0 0 0

enqueue hash chains 1,117 0.0 0 0

enqueues 282 0.0 0 0

fifth spare latch 1 0.0 0 0

flashback copy 1 0.0 0 0

fourth Audit Vault latch 1 0.0 0 0

gc element 1 0.0 0 0

gcs commit scn state 1 0.0 0 0

gcs partitioned table ha 1 0.0 0 0

gcs pcm hashed value buc 1 0.0 0 0

gcs resource freelist 1 0.0 0 0

gcs resource hash 1 0.0 0 0

gcs resource scan list 1 0.0 0 0

gcs shadows freelist 1 0.0 0 0

ges domain table 1 0.0 0 0

ges enqueue table freeli 1 0.0 0 0

Latch Activity DB/Inst: XE/XE Snaps: 13-14

->"Get Requests", "Pct Get Miss" and "Avg Slps/Miss" are statistics for

willing-to-wait latch get requests

->"NoWait Requests", "Pct NoWait Miss" are for no-wait latch get requests

->"Pct Misses" for both should be very close to 0.0

Pct Avg Wait Pct

Get Get Slps Time NoWait NoWait

Latch Requests Miss /Miss (s) Requests Miss

------------------------ -------------- ------ ------ ------ ------------ ------

ges group table 1 0.0 0 0

ges process hash list 1 0.0 0 0

ges process parent latch 1 0.0 0 0

ges resource hash list 1 0.0 0 0

ges resource scan list 1 0.0 0 0

ges resource table freel 1 0.0 0 0

ges value block free lis 1 0.0 0 0

global KZLD latch for me 1 0.0 0 0

global tx hash mapping 1 0.0 0 0

granule operation 1 0.0 0 0

heartbeat check 1 0.0 0 0

intra txn parallel recov 1 0.0 0 0

io pool granule metadata 1 0.0 0 0

job workq parent latch 1 0.0 0 0

k2q lock allocation 1 0.0 0 0

kdlx hb parent latch 1 0.0 0 0

kgb parent 1 0.0 0 0

kgnfs mount latch 1 0.0 0 0

ksfv messages 1 0.0 0 0

ksim group membership ca 1 0.0 0 0

kss move lock 1 0.0 0 0

ksuosstats global area 23 0.0 0 0

ksv allocation latch 5 0.0 0 0

ksv class latch 1 0.0 0 0

ksv msg queue latch 1 0.0 0 0

ksz_so allocation latch 12 0.0 0 0

ktm global data 3 0.0 0 0

kwqbsn:qsga 14 0.0 0 0

lgwr LWN SCN 207 0.0 0 0

list of block allocation 650 0.0 0 0

loader state object free 3,766 0.0 0 0

lob segment dispenser la 1 0.0 0 0

lob segment hash table l 1 0.0 0 0

lob segment query latch 1 0.0 0 0

lock DBA buffer during m 1 0.0 0 0

logical standby cache 1 0.0 0 0

logminer context allocat 1 0.0 0 0

logminer work area 1 0.0 0 0

longop free list parent 1 0.0 0 0

mapped buffers lru chain 1 0.0 0 0

message pool operations 1 0.0 0 0

messages 4,494 0.0 0 0

mostly latch-free SCN 211 0.0 0 0

msg queue latch 1 0.0 0 0

multiblock read objects 1,648 0.0 0 0

name-service namespace b 1 0.0 0 0

ncodef allocation latch 5 0.0 0 0

object queue header heap 3,562 0.0 0 476 0.0

object queue header oper 261,485 0.0 0.0 0 0

object stats modificatio 11 0.0 0 0

Latch Activity DB/Inst: XE/XE Snaps: 13-14

->"Get Requests", "Pct Get Miss" and "Avg Slps/Miss" are statistics for

willing-to-wait latch get requests

->"NoWait Requests", "Pct NoWait Miss" are for no-wait latch get requests

->"Pct Misses" for both should be very close to 0.0

Pct Avg Wait Pct

Get Get Slps Time NoWait NoWait

Latch Requests Miss /Miss (s) Requests Miss

------------------------ -------------- ------ ------ ------ ------------ ------

parallel query alloc buf 41 0.0 0 0

parallel query stats 1 0.0 0 0

parameter list 15 0.0 0 0

parameter table manageme 12 0.0 0 0

peshm 1 0.0 0 0

pesom_free_list 1 0.0 0 0

pesom_hash_node 1 0.0 0 0

post/wait queue 6 0.0 0 2 0.0

process allocation 12 0.0 0 1 0.0

process group creation 12 0.0 0 0

process queue 1 0.0 0 0

process queue reference 1 0.0 0 0

qmn task queue latch 44 0.0 0 0

query server freelists 1 0.0 0 0

queued dump request 1 0.0 0 0

queuing load statistics 1 0.0 0 0

recovery domain hash lis 1 0.0 0 0

redo allocation 10,461 0.1 0.0 0 0

redo copy 1 0.0 0 9,757 0.1

redo writing 1,651 0.0 0 0

resmgr group change latc 3 0.0 0 0

resmgr:active threads 13 0.0 0 111 0.0

resmgr:actses change gro 2 0.0 0 0

resmgr:actses change sta 1 0.0 0 0

resmgr:free threads list 12 0.0 0 0

resmgr:plan CPU method 1 0.0 0 0

resmgr:resource group CP 12,068 0.2 0.0 0 0

resmgr:schema config 1 0.0 0 5 0.0

resmgr:session queuing 1 0.0 0 0

rm cas latch 1 0.0 0 0

row cache objects 1,411 0.0 0 0

rules engine rule set st 100 0.0 0 0

second Audit Vault latch 1 0.0 0 0

second spare latch 1 0.0 0 0

sequence cache 6 0.0 0 0

session allocation 194 0.0 0 171 0.0

session idle bit 281 0.0 0 0

session queue latch 1 0.0 0 0

session state list latch 14 0.0 0 0

session switching 61 0.0 0 0

session timer 100 0.0 0 0

shared pool 741 0.0 0 0

shared pool sim alloc 1 0.0 0 0

shared pool simulator 84 0.0 0 0

sim partition latch 1 0.0 0 0

simulator hash latch 1 0.0 0 0

simulator lru latch 1 0.0 0 0

sort extent pool 6 0.0 0 0

space background task la 345 0.6 0.0 0 202 0.0

tablespace key chain 1 0.0 0 0

Latch Activity DB/Inst: XE/XE Snaps: 13-14

->"Get Requests", "Pct Get Miss" and "Avg Slps/Miss" are statistics for

willing-to-wait latch get requests

->"NoWait Requests", "Pct NoWait Miss" are for no-wait latch get requests

->"Pct Misses" for both should be very close to 0.0

Pct Avg Wait Pct

Get Get Slps Time NoWait NoWait

Latch Requests Miss /Miss (s) Requests Miss

------------------------ -------------- ------ ------ ------ ------------ ------

test excl. parent l0 1 0.0 0 0

test excl. parent2 l0 1 0.0 0 0

third spare latch 1 0.0 0 0

threshold alerts latch 6 0.0 0 0

transaction allocation 16 0.0 0 0

undo global data 1,647 0.0 0 0

virtual circuit buffers 1 0.0 0 0

virtual circuit holder 1 0.0 0 0

virtual circuit queues 1 0.0 0 0

-------------------------------------------------------------

Latch Sleep breakdown DB/Inst: XE/XE Snaps: 13-14

-> ordered by misses desc

Get Spin

Latch Name Requests Misses Sleeps Gets

-------------------------- --------------- ------------ ----------- -----------

In memory undo latch 36,893 3 3 0

-------------------------------------------------------------

Latch Miss Sources DB/Inst: XE/XE Snaps: 13-14

-> only latches with sleeps are shown

-> ordered by name, sleeps desc

NoWait Waiter

Latch Name Where Misses Sleeps Sleeps

------------------------ -------------------------- ------- ---------- --------

In memory undo latch ktiFlush: child 0 3 0

-------------------------------------------------------------

Dictionary Cache Stats DB/Inst: XE/XE Snaps: 13-14

->"Pct Misses" should be very low (< 2% in most cases)

->"Final Usage" is the number of cache entries being used in End Snapshot

Get Pct Scan Pct Mod Final

Cache Requests Miss Reqs Miss Reqs Usage

------------------------- ------------ ------ ------- ----- -------- ----------

dc_awr_control 5 0.0 0 0 1

dc_global_oids 30 0.0 0 0 34

dc_objects 31 0.0 0 0 1,590

dc_profiles 1 0.0 0 0 1

dc_rollback_segments 43 0.0 0 0 22

dc_tablespaces 33 0.0 0 0 7

dc_users 137 0.0 0 0 59

global database name 203 0.0 0 0 1

-------------------------------------------------------------

Library Cache Activity DB/Inst: XE/XE Snaps: 13-14

->"Pct Misses" should be very low

Get Pct Pin Pct Invali-

Namespace Requests Miss Requests Miss Reloads dations

--------------- ------------ ------ -------------- ------ ---------- --------

ACCOUNT_STATUS 2 0.0 0 0 0

BODY 7 0.0 18 0.0 0 0

DBLINK 2 0.0 0 0 0

EDITION 1 0.0 2 0.0 0 0

SCHEMA 1 0.0 0 0 0

SQL AREA 23 0.0 4,105 0.0 0 0

TABLE/PROCEDURE 14 0.0 35 0.0 0 0

-------------------------------------------------------------

Rule Sets DB/Inst: XE/XE Snaps: 13-14

-> * indicates Rule Set activity (re)started between Begin/End snaps

-> Top 25 ordered by Evaluations desc

No-SQL SQL

Rule * Eval/sec Reloads/sec Eval % Eval %

----------------------------------- - ------------ ----------- ------ ------

SYS.ALERT_QUE_R 0 0 0 0

-------------------------------------------------------------

Shared Pool Advisory DB/Inst: XE/XE End Snap: 14

-> SP: Shared Pool Est LC: Estimated Library Cache Factr: Factor

-> Note there is often a 1:Many correlation between a single logical object

in the Library Cache, and the physical number of memory objects associated

with it. Therefore comparing the number of Lib Cache objects (e.g. in

v$librarycache), with the number of Lib Cache Memory Objects is invalid

Est LC Est LC Est LC Est LC

Shared SP Est LC Time Time Load Load Est LC

Pool Size Size Est LC Saved Saved Time Time Mem

Size (M) Factr (M) Mem Obj (s) Factr (s) Factr Obj Hits

---------- ----- -------- ------------ ------- ------ ------- ------ -----------

128 .8 5 413 3,134 1.0 43 1.7 14,398

148 .9 25 1,914 3,139 1.0 38 1.5 54,776

168 1.0 43 3,083 3,151 1.0 26 1.0 55,144

188 1.1 54 3,644 3,151 1.0 26 1.0 55,150

208 1.2 54 3,644 3,151 1.0 26 1.0 55,150

228 1.4 54 3,644 3,151 1.0 26 1.0 55,150

248 1.5 54 3,644 3,151 1.0 26 1.0 55,150

268 1.6 54 3,644 3,151 1.0 26 1.0 55,150

288 1.7 54 3,644 3,151 1.0 26 1.0 55,150

308 1.8 54 3,644 3,151 1.0 26 1.0 55,150

328 2.0 54 3,644 3,151 1.0 26 1.0 55,150

348 2.1 54 3,644 3,151 1.0 26 1.0 55,150

-------------------------------------------------------------