This article compares the performance of several different web servers for a Laravel-based application. What follows is a lot of graphs, configuration settings, and my personal conclusions which do not pretend to represent universal truth in any way.

I myself have been working with NGINX Unit (+ Lumen) for a while, but I still see PHP-FPM being used in new projects quite often. When I suggest switching to NGINX Unit, a question naturally ensues, "How exactly is it better?". A search for relevant articles on the Internet yields few results: mostly quite old and uninformative articles on obscure websites and a 2019 article on Habr that does a similar comparison of several web servers for a Symfony-based application. But, first, PHP and NGINX Unit have already gone far ahead since its publication; second, I was interested in performance for Laravel and Lumen. So, I cobbled together a simple test bench with several web servers and did some load testing, the results of which I now share.

About the testing bench

Test bench stats:

CPU: AMD Ryzen 9 5900X 12-Core

RAM: DDR4 4000 MHz 32GiB x2

SSD: Samsung SSD 980 PRO 500GB nvme

OS: xubuntu 20.04

Testing applications:

Laravel 8.69.0

Laravel is one of the most popular frameworks for PHP. Out of the box, it contains lots of functionality that solves almost any business needs in a reasonable time. Meanwhile, it is flexible enough: almost any task can be implemented in many ways, starting with designing the application itself (although there is a minus here: some ways of solving a task can cause lots of problems ranging from the need to support abysmal code to performance declines and memory leaks).

Lumen 8.3.1

A lightweight version of Laravel, intended mainly for API implementation. In terms of functionality, it kept the most important components of Laravel, trimming what's not critically important (for example, facades). At the same time, it performs better than Laravel but doesn't solve all tasks equally well. As it usually happens, there is a tool of choice for each specific task. In some tasks, Laravel does better, in others, it's Lumen, while for some PHP is not an option at all.

Web servers:

Php-fpm

Standard PHP process manager. Each request requires framework initialization.

NGINX Unit

An application web server developed by the nginx team. Each request requires framework initialization.

Laravel Octane

Strictly speaking, this is not a web server but an application management package from the Laravel team. Under the hood, it uses the Swoole or the RoadRunner web server. The framework is initialized with the first request, then stored in memory and does not reinitialize at subsequent requests.

PHP version in all builds: 8.0.12

Testing tools:

Yandex.Tank for load testing. The most recent Docker image available is used.

Telegraf (included in the Yandex.Tank toolset) to collect resource usage statistics for applications.

Overload (also from the Yandex.Tank kit) for plotting.

Configuration

Applications

All applications have a standard configuration. Error logging is disabled. The cache is collected at the first access only for Laravel/Lumen components (such as the router, etc.), and there is no cache for controllers. The application has a single endpoint that processes the request when accessed to return a built-in JSON response containing a random number, a configuration setting, a static string, and request headers.

Example service response:

{

"app_env": "prod",

"type": "unit-lumen",

"number": 1527706674,

"headers": {

"accept-language": [

"ru-RU,ru;q=0.9,en-US;q=0.8,en;q=0.7"

],

"accept-encoding": [

"gzip, deflate"

],

"accept": [

"text\/html,application\/xhtml+xml,application\/xml;q=0.9,image\/avif,image\/webp,image\/apng,*\/*;q=0.8,application\/signed-exchange;v=b3;q=0.9"

],

"user-agent": [

"Mozilla\/5.0 (X11; Linux x86_64) AppleWebKit\/537.36 (KHTML, like Gecko) Chrome\/93.0.4577.99 Safari\/537.36"

],

"upgrade-insecure-requests": [

"1"

],

"connection": [

"keep-alive"

],

"host": [

"10.100.9.3"

],

"content-length": [

""

],

"content-type": [

""

]

}

}All applications run in Docker containers. All applications use a custom php.ini with the following settings:

upload_max_filesize = 50M

post_max_size = 50M

opcache.enable=1

opcache.interned_strings_buffer=64

opcache.max_accelerated_files=100000

opcache.memory_consumption=128

opcache.save_comments=1

opcache.revalidate_freq=0

opcache.validate_timestamps=0

opcache.max_wasted_percentage=10

apc.enable_cli=1

memory_limit=256MIndividual changes, if any, are noted for each application.

To test PHP-FPM, an additional nginx container is used since PHP-FPM works via the FastCGI protocol. I used the official nginx:1.19.6-alpine image.

Nginx Configuration

nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

worker_connections 2048;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

resolver_timeout 10s;

server_tokens off;

keepalive_timeout 3;

reset_timedout_connection on;

client_body_timeout 2;

send_timeout 1;

server_names_hash_bucket_size 128;

client_max_body_size 32m;

proxy_buffers 4 512k;

proxy_buffer_size 256k;

proxy_busy_buffers_size 512k;

gzip on;

gzip_comp_level 9;

gzip_disable "msie6";

gzip_types text/plain text/css application/json application/x-javascript text/xml application/xml application/xml+rss text/javascript application/javascript image/svg+xml;

include /etc/nginx/conf.d/*.conf;

}default.conf

server {

listen 80;

server_name localhost;

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

location / {

try_files $uri /index.php$is_args$args;

}

location ~ ^/index\.php(/|$) {

fastcgi_pass 10.100.9.100:9000;

fastcgi_split_path_info ^(.+\.php)(/.*)$;

include fastcgi_params;

fastcgi_param SCRIPT_FILENAME /var/www/public/$fastcgi_script_name;

}

}Guinea pigs a.k.a. containers I've built

1. nginx + PHP-FPM + Laravel

Configuration

PHP-FPM: the official php:8.0.12-fpm image is used.

Changes in php.ini:

memory_limit=512M

pm = static

pm.max_children = 100

pm.max_requests = 1000Worth mentioning right away: initially I planned that each web server would have 16 workers per the number of logical cores (more on this below), but some problems arose with PHP-FPM during testing, so I had to enable more workers.

2. nginx + PHP-FPM + Lumen

The configuration is completely identical to nginx + PHP-FPM + Laravel

3. NGINX Unit + Laravel

It so happened that a little earlier I had already built a container with NGINX Unit for PHP8 since there were no ready-made containers that met my production tasks. Without much hesitation, I used the same container as the basis for the test, slightly modifying it. You can see that the build is based on ubuntu:hirsute; admittedly, I am not on such friendly terms with apline, and there was no time to deal with it then. I needed a build here and now, and I had no plans for it to reach production in its original form. Although, given the test results, it may now happen.

Configuration

NGINX Unit config:

{

"listeners": {

"*:80": {

"pass": "routes"

}

},

"routes": [

{

"match": {

"uri": [

"*.manifest",

"*.appcache",

"*.html",

"*.json",

"*.rss",

"*.atom",

"*.jpg",

"*.jpeg",

"*.gif",

"*.png",

"*.ico",

"*.cur",

"*.gz",

"*.svg",

"*.svgz",

"*.mp4",

"*.ogg",

"*.ogv",

"*.webm",

"*.htc",

"*.css",

"*.js",

"*.ttf",

"*.ttc",

"*.otf",

"*.eot",

"*.woff",

"*.woff2",

"/robot.txt"

]

},

"action": {

"share": "/var/www/public"

}

},

{

"action": {

"pass": "applications/php"

}

}

],

"applications": {

"php": {

"type": "php 8.0",

"limits": {

"requests": 1000,

"timeout": 60

},

"processes": {

"max": 16,

"spare": 16,

"idle_timeout": 30

},

"user": "www-data",

"group": "www-data",

"working_directory": "/var/www/",

"root": "/var/www/public",

"script": "index.php",

"index": "index.php"

}

},

"access_log": "/dev/stdout"

}4. NGINX Unit + Lumen

The configuration is completely identical to NGINX Unit + Laravel

5. Laravel Octane (Swoole) + Laravel

I was prompted to add Octane to the application test suite by a message from Taylor (creator of Laravel) on Twitter. I was confused by his statements of Octane being faster than Lumen and a slight increase in speed. In this regard, I decided to conjure a container with Swoole-based Laravel Octane for the tests.

Besides Swoole, Laravel Octane supports RoadRunner; initially, I planned to test it as well. But strangely enough, I could not get the latter to work normally even with crutches inside Docker (to be precise, I succeeded, but it couldn't make it through auto-deploy, only starting manually), so I axed it. However, Swoole was enough for my purposes. In addition, Laravel Octane + Swoole adds additional tools that may be useful in solving a number of my tasks.

Configuration

.env

OCTANE_SERVER=swooleAll other settings are passed directly to the server startup command:

php artisan octane:start --port=80 --workers=16 --max-requests=1000 --host=host=host.docker.internalYandex.Tank

For testing, I decided to use two different load profiles reflecting two situations: "standard" for daily frequent loads, "stress" for understanding the limits of system performance.

"Standard" load profile

The goal of this test is to understand how effectively the application will cope with the regular loads that I meet all the time.

phantom:

address: 10.100.9.101

uris:

- /

load_profile:

load_type: rps

schedule: step(5, 100, 5, 10s) const(100, 2m30s)

timeout: 2s

console:

enabled: true

telegraf:

config: 'monitoring-nginx-unit-laravel.xml'

enabled: true

kill_old: false

package: yandextank.plugins.Telegraf

ssh_timeout: 30s

overload:

enabled: true

package: yandextank.plugins.DataUploader

token_file: 'overload_token.txt'"Stress" load profile

The idea of the test is to determine what each particular web service is capable of and how many users it can reliably sustain without failures. Load profile

phantom:

address: 10.100.9.101

uris:

- /

load_profile:

load_type: rps # schedule load by defining requests per second

schedule: line(1, 1000, 10m)

timeout: 2s

console:

enabled: true

telegraf:

config: 'monitoring-nginx-unit-laravel.xml'

enabled: true

kill_old: false

package: yandextank.plugins.Telegraf

ssh_timeout: 30s

overload:

enabled: true

package: yandextank.plugins.DataUploader

token_file: 'overload_token.txt'The timeout of 2 seconds was not chosen randomly. First, in API applications, the endpoint taking too long to respond is most often fatal for the application's performance (I regularly use an API gateway within the microservice architecture, always rigidly setting the request timeouts: depending on the task in hand, from 1 to 3 seconds, but no more. But at the same time, if the endpoint implements really heavyweight logic, I always extract such things into jobs). Second, when testing PHP-FPM with a default timeout of 11 seconds, Yandex.Tank didn't have enough load capacity because it waited too long for a response (more on this later).

Telegraf

Everything is simple here; I created a minimal configuration to collect data on resource consumption.

Configuration

<Monitoring>

<Host address="10.100.9.101" interval="1" username="root">

<CPU/> <Kernel/> <Net/> <System/> <Memory/> <Disk/> <Netstat /> <Nstat/>

</Host>

</Monitoring>Load testing and results

For testing, all participants were strictly limited by the number of logical processor cores:

Container with the application: 16 cores

Nginx (for PHP-FPM tests): 2 cores

Yandex.Tank: 4 cores (2 cores weren't enough to run with PHP-FPM even with a reduced timeout)

The extremely important and valuable process of forwarding /dev/zero to /dev/null (questions are unnecessary, it's my personal crutch): strictly 1 fully loaded kernel

All OS processes: the remaining 1 core, actually free, in reserve

All applications were cold-started, i.e. the endpoint was not pre-heated and the cache was not collected before the test was started.

Next, the testing graphs. If there is no error graph, there were no errors.

I did not add the memory load graph to avoid bloating the post indefinitely. In synthetic tests, memory consumption is almost zero, and the difference is minimal. For approximately the same reasons, the containers had no restrictions on memory usage, since there is always enough of it.

"Standard" load profile

1. nginx + PHP-FPM + Laravel

2. nginx + PHP-FPM + Lumen

3. NGINX Unit + Laravel

4. NGINX Unit + Lumen

5. Octane (Swoole) + Laravel

Response time percentiles (ms)

99% | 98% | 95% | 90% | 85% | 80% | 75% | 50% | HTTP OK % | |

nginx + php-fpm + laravel | 60 | 59 | 56 | 52 | 48 | 46 | 45 | 44 | 100 |

nginx + php-fpm + lumen | 18 | 18 | 17 | 16 | 16 | 15 | 15 | 14 | 100 |

nginx-unit + laravel | 7.6 | 7 | 6.5 | 5.8 | 5.3 | 5.2 | 5.2 | 4.059 | 100 |

nginx-unit + lumen | 1.930 | 1.870 | 1.640 | 1.520 | 1.460 | 1.410 | 1.320 | 1.070 | 100 |

octane (swoole) + laravel | 1.230 | 1.200 | 1.160 | 1.110 | 1.050 | 1.010 | 0.980 | 0.800 | 100 |

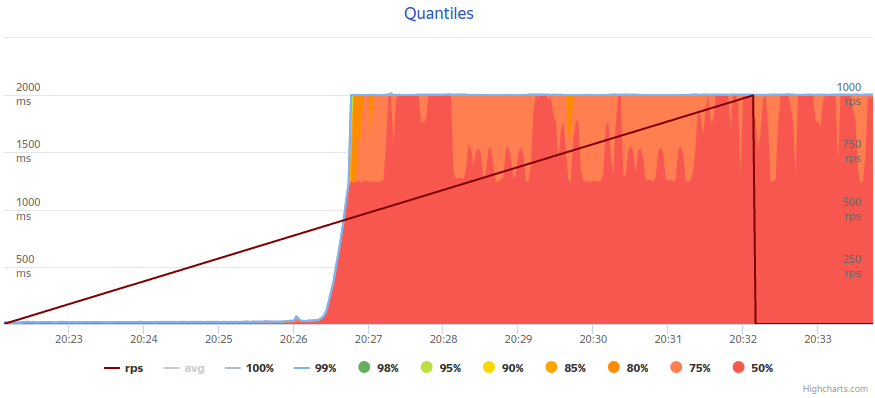

"Stress" load profile

1. nginx + PHP-FPM + Laravel

Testing details of this build

I had to test it several times. The first time, PHP-FPM was allocated 16 workers and nginx had default settings, so this whole assembly collapsed into a black hole and at 120 rps started yelling about errors and insufficient number of workers (both at the same time). After that, I brought nginx to an adequate configuration, and PHP was allocated 100 and 250 workers respectively (2 tests, it eventually stopped at 100 because there were not enough logical cores for 250 of them). Since I tested this load profile first, this configuration was later used for all other tests. Also, I came across the fact that at around 120 rps PHP-FPM abruptly stops coping with loads, requests go hanging, and Yandex.Tank with a default request timeout of 11 seconds starts collapsing because it simply does not have enough resources to provide for this number of requests. Accordingly, I allocated 4 cores for Tank (originally, there were 2) and lowered the timeout to 2 seconds. The graph clearly shows this 2-second cutoff where PHP-FPM goes sideways. But I'll get back to the conclusions later.

2. nginx + PHP-FPM + Lumen

3. NGINX Unit + Laravel

4. NGINX Unit + Lumen

Error summary

During testing, one http code 500 error arose from the application itself. Due to logs being disabled, it is impossible to understand exactly what happened, and it was no longer reproduced during later runs. However, considering that for 300299 successful requests only one turned erroneous, I thought this could be safely ignored.

5. Octane (Swoole) + Laravel

Response time percentiles (ms)

* An asterisk in the table will mark PHP-FPM builds that could not bear the load at all. They will indicate the rps cutoff where they began to collapse. Respectively, the percentiles are given under this cutoff. I decided to add them to the table anyway, even in this form.

The Laravel Octane build is listed twice. It has full stats indicating where the server could not withstand the load, and further data up to the point of collapse. As with PHP-FPM, an asterisk indicates the threshold rps.

99% | 98% | 95% | 90% | 85% | 80% | 75% | 50% | HTTP OK % | |

* nginx + PHP-FPM + Laravel ~120 rps | 62 | 59 | 55 | 52 | 49 | 47 | 45 | 43 | - |

* nginx + PHP-FPM + Lumen ~400 rps | 34 | 25 | 19 | 17 | 16 | 16 | 15 | 14 | - |

NGINX Unit + Laravel | 6.6 | 6 | 5.5 | 5.2 | 4.96 | 4.7 | 4.5 | 3.79 | 100 |

NGINX Unit + Lumen | 1.77 | 1.56 | 1.4 | 1.25 | 1.17 | 1.13 | 1.08 | 0.91 | 100 |

Octane (Swoole) + Laravel | 18 | 8.3 | 4.85 | 3.88 | 3.45 | 3.1 | 2.85 | 1.87 | 82.807 |

* Octane (Swoole) + Laravel ~600 rps | 3.23 | 3.01 | 2.85 | 2.55 | 2.2 | 2.029 | 1.92 | 0.78 | - |

Conclusions

The results of the testing, I must admit, quite surprised me. Of course, I expected PHP-FPM to be slower than NGINX Unit, but not to such a degree: the difference in response generation time is almost 10 times. I was also surprised to see PHP-FPM being the only one to choke completely during the stress test. Perhaps this is due to the fact that I am not an expert in nginx-PHP configuration, but the rest of the servers running on the same config passed the stress test, albeit with losses. As a result, I concluded that my switch to NGINX Unit a long time ago was for the better.

As for the performance comparison: if we put aside PHP-FPM, NGINX Unit is the only server that passed the stress test without losses, although I had high hopes for Octane. Consequently, I will not take PHP-FPM into account any further.

Bursts in execution time for the 100% percentile can be observed in both NGINX Unit and Octane + Swoole. In both cases, they aren't catastrophic, and the reason can be that the testing was done in an environment that, putting it mildly, wasn't up to this task. Again, all the tests were purely synthetic; in real conditions, when the application will, among other things, run business logic, access the database and churn the cache, the situation will be different.

Technically, Octane will be faster in real conditions due to application state and all connections being preserved. In addition, Swoole has its own high-performance cache implementations and some other features to speed up heavy business logic. But there is also a price: first, everything rests upon the fact that Octane needs more memory (I understand that the price of RAM in data centers is not high for large businesses, but it's worth considering); second, what's more importantly, Octane is extremely unfriendly to bad code; it simply doesn't forgive mistakes. There is even a section on the official website with implementation examples that work on other servers but cause the application to fail on Octane.

On the other hand, NGINX Unit proved to be more stable under high loads. Meanwhile, Unit in conjunction with Lumen yields almost no ground to Octane in synthetic tests, so I doubted Taylor's words for a reason. Lumen will still live and continue to surprise (as for me, I did not expect it to be so fast compared to Laravel).

As a result, I concluded for myself that NGINX Unit is the go-to choice for an extremely stable service that will go on even where others can't survive. And for it to run really well, it still makes sense to use Lumen, API-wise. However, if you need a superfast service to run complex business logic on the fly, the choice is Octane - if resources allow (factoring in both the memory considerations and the fact that when high loads are expected, the load balancer and horizontal scaling should be taken care of well in advance). In this case, Octane is the proverbial "weapon of mass destruction" that is not to be used indiscriminately, in which Taylor is absolutely right. For each task, its own tool.

That's it; I hope this will help you choose a tool that solves your problems.

Thanks for translate @aliensstolemycow and the rest of the NGINX Unit team