Introduction

First thing first, I should note that modern ML technologies still rely on human evaluation. Humans transcribe audio into text to adjust voice recognition algorithms; humans assess the relevance of reference documents to search queries, so that the search ranking formulas will be guided by them; humans categorize images so that, having trained on these examples, the neural network will be able to do it further without people and better than people.

Toloka can do all of the specified things. So, it’s time to tell you more about the platform.

The tasks I’ve talked about above are usually solved by Yandex with the help of trained specialists - assessors. The assessors look at how well the search results match the query, find spam among the found web pages, classify it, and solve similar problems for other services.

The irony is that the newer technologies are developed, the greater the need for human evaluation grows. It's not enough to simply determine the relevance of a page to a search query. It's important to understand whether the page is littered with malicious ads? Does the page contain adult content? And if it does, does the user's query imply that this is the content they were looking for? In order to automatically account for all of these factors, you need to gather enough examples to train the search engine. And since everything on the Internet is constantly changing, training sets need to be constantly updated and kept up to date. In general, for search engine tasks alone, the need for human evaluations has been measured in the millions per month, and that number is only growing every year.

And not only that, finding more assessors in different countries is a hard process. But, for some tasks, you don’t even need professional training - that’s when we rely on crowdsourcing.

Toloka

There is nothing complicated about the fundamental logic of any crowdsourcing platform, and Toloka in particular. On the one hand, Toloka works with users, distributes tasks, makes payments, and on the other hand, Toloka helps clients get results with minimal effort.

Some companies need users to classify pictures, some need users to choose a specified object in the picture. Potentially, tasks could be anything in terms of input data, interface, and expected answers. For example, you can check this post (in Russian), where the author asked tolokers (users of Toloka) to take pictures of their water meters to make a dataset for a future neural network that inputs the measurements automatically.

How can everyone earn with Toloka?

Actually, it’s very simple! If you need people to evaluate data even with extra training, then Toloka has a lot of guides and ready-to-go solutions for setting up your tasks. You can choose every aspect of the task: price, quality of evaluation, ratings of the users, etc. But if you want to earn money with Toloka - here’s a quick guide for you. The best thing is, that you get paid almost instantly and can easily withdraw your earned money.

How do you ensure the quality of evaluation?

This question is a major concern for any crowdsourcing platform. In Toloka, there are diligent and attentive people, and there are lazy, unscrupulous people who can write scripts. The main task is to reward honest people and ban script users. To do this, Toloka has been trained to analyze the behavior of users. Clients now have the ability to automatically identify and limit those tolokers, who, for example, respond too quickly, or whose answers are inconsistent with those of others. Also, Toloka has the ability to use control tasks and mandatory acceptance before payment. And the acceptance can be simplified, too. Give tasks to some users, and the evaluation of their results to others.

The Improvements

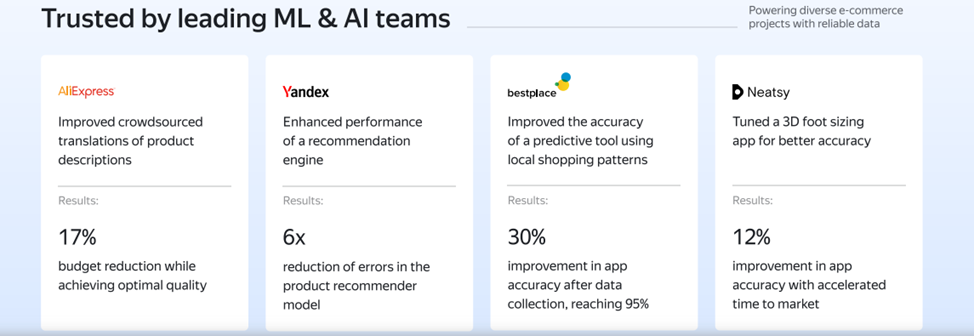

So, it’s time for me to tell you about the improvements to Yandex.Search and how Toloka has already helped a lot of companies to make their product better.

With the help of Toloka, Yandex created a new search algorithm called YATI (Yet Another Transformer with Improvements). Unlike previous algorithms, YATI is based on neural networks that have undergone serious training on real keyword phrases of users and the pages they open. In addition to self-training, the results are checked and supplemented by assessors - specialists who conduct expert evaluation of the quality of text ranking. The algorithm learns to divide the text document into zones that differ in their importance in the context of the user's entered query. Text fragments from the most important areas are also selected for ranking, while areas of least importance are ignored and have no influence on the positioning of the site in search results. If the page contains a small amount of text, then it will all affect the ranking of the document. According to the statements of Yandex representatives, YATI initially "pays attention" to the titles of the document - they must be relevant to certain user queries. Only after this is confirmed, the entire document begins to participate in the ranking.

What is a YATI Transformer?

Transformers are complex and large neural networks, whose work is aimed at solving problems of text processing and generation. This is a new round of neural network development, opening up huge opportunities for various spheres, in particular for the construction of search engine ranking algorithms. Now a search algorithm can segment text elements into parts based on various features, and process them separately. The element is a word, punctuation marks and other character sequences. As noted above, YATI has an attention mechanism, thanks to which fragments of the input text are separated and processed separately. For example, this will make it possible to understand which part of the text is really important for users and include it in the ranking factors, while excluding other, unimportant parts. This will allow you to significantly purify search results from documents with low-quality content.

Sequence of learning algorithm

Based on the tasks and features of the ranking, the network learns the rules of the language according to the principle of the masked language modeling. The input data is the user query and the title of the document. The purpose of the approach is to teach the algorithm to predict the probability of a document from the search results for a given keyword.

The next step is additional training of the algorithm with the help of assessors. First, the data is studied by users of the service Toloka. As you can understand, it is a low-quality assessment of the relevance of the query to the document. To improve these indicators, after tolokers, the data is rechecked by specialists of Yandex itself. As a result of these actions, the data get certain relevance scores.

Afterwards, the resulting analytics and the data themselves are sent for processing, in order to combine them into segments based on certain characteristics. Thanks to the collection of final metrics, the algorithm evaluates the level of relevance of the document and user query.

Results

According to Yandex, the quality of ranking has increased significantly since the algorithm was introduced and YATI became the most significant innovation of the last decade. The algorithm has learned to correctly search not only for short key phrases, but also for entire text fragments. It takes into account not only the order and shape of words, but also the context of the entered query, which is compared with the document under study. Such improvements will allow the algorithm to "understand" the naturalness of the language, to find the semantic links between words, etc. Therefore, if we look from the point of view of users of the Yandex search engine, YATI will greatly improve the quality of results. Now sites will be ranked with the maximum overlap in meaning. Now, SEO-optimizers will have to take into account all the subtleties of the algorithm, otherwise a big share of search traffic will be missed.

Summary

Toloka is a crowdsourcing platform that is very simple to use, has integrated python libraries and can enhance your AI with a great variety of tasks to create a perfect dataset. Toloka is multilingual and can gather data in a blink of an eye.

Thank you for reading and commenting on my post! I will be answering all related questions, so go ahead!