There are a lot of different articles on Kalman filter, but it is difficult to find the one which contains an explanation, where all filtering formulas come from. I think that without understanding of that this science becomes completely non understandable. In this article I will try to explain everything in a simple way.

Kalman filter is very powerful tool for filtering of different kinds of data. The main idea behind this that one should use an information about the physical process. For example, if you are filtering data from a car’s speedometer then its inertia give you a right to treat a big speed deviation as a measuring error. Kalman filter is also interesting by the fact that in some way it is the best filter. We will discuss precisely what does it mean. In the end of the article I will show how it is possible to simplify the formulas.

Preliminaries

At first, let’s memorize some definitions and facts from probability theory.

Random variable

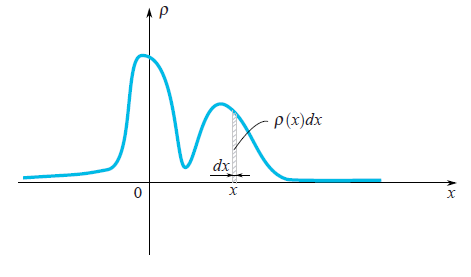

When one says that it is given a random variable

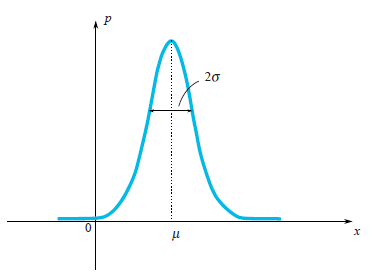

Quite often in our life, random variables have the Gauss Distribution, when the probability density is

We can see that the bell-shaped function

Since we are talking about the Gaussian Distribution, then it would be a sin not to mention from where does it come from. As well as the number

Let a random variable

Mean Value

By definition, a mean value of a random variable is a value which we get in a limit if we perform more and more experiments and calculate a mean of fallen values. A mean value is denoted in different ways: mathematicians denote by

For instance, a mean value of Gaussian Distribution

Variance

For Gaussian distribution, we clearly see that the random variable tends to fall within a certain region of its mean value

On the picture, one may see that a characteristic width of a region where values mostly fall is

A simpler approach (simple from calculation’s point of view) is to calculate

This value called variance and denoted by

For instance, one can compute that for the Gaussian distribution

Independent random variables

Random variables may depend on each other or not. Imagine that you are throwing a needle on the floor and measuring coordinates of its both ends. This two coordinates are random variables, but they depend on each other, since a distance between them should be always equal to the length of the needle. Random variables are independent from each other if falling results of the first one doesn’t depend on results of the second. For two independent variables

Proof

For instance to have blue eyes and finish a school with higher honors are independent random variables. Let say that there are  of people with blue eyes and

of people with blue eyes and  of people with higher honors. So there are

of people with higher honors. So there are  of people with blue eyes and higher honors. This example helps us to understand the following. For two independent random variables

of people with blue eyes and higher honors. This example helps us to understand the following. For two independent random variables  and

and  which are given by their density of probability

which are given by their density of probability  and

and  , the density of probability

, the density of probability  (the first variable falls at

(the first variable falls at  and the second at

and the second at  ) can by find by the formula

) can by find by the formula

It means that

As you see, the proof is done for random variables which have a continuous spectrum of values and are given by their density of probability function. The proof is similar for general case.

It means that

As you see, the proof is done for random variables which have a continuous spectrum of values and are given by their density of probability function. The proof is similar for general case.

Kalman filter

Problem statement

Let denote by

Let us start with a simple example, which will lead us to the formulation of the general problem. Imagine that you have a radio control toy car which can run only forward and backward. Knowing its mass, shape, and other parameters of the system we have computed how the way how a controlling joystick acts on a car’s velocity

The the coordinate of the car would by the following formula

In real life we can’t, we can’t have a precise formula for the coordinate since some small disturbances acting on the car as wind, bumps, stones on the road, so the real speed of the car will differ from the calculated one. So we add a random variable

We also have a GPS sensor on the car which tries to measure the coordinate of the car

Our aim is to find a good estimation for true coordinate

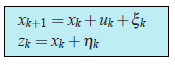

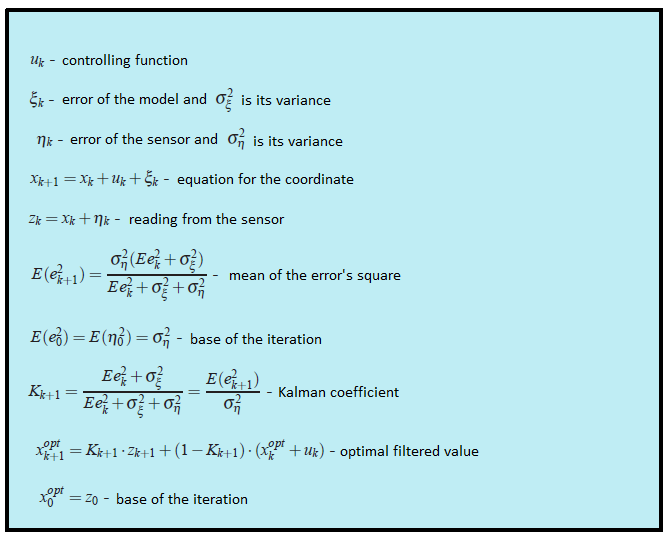

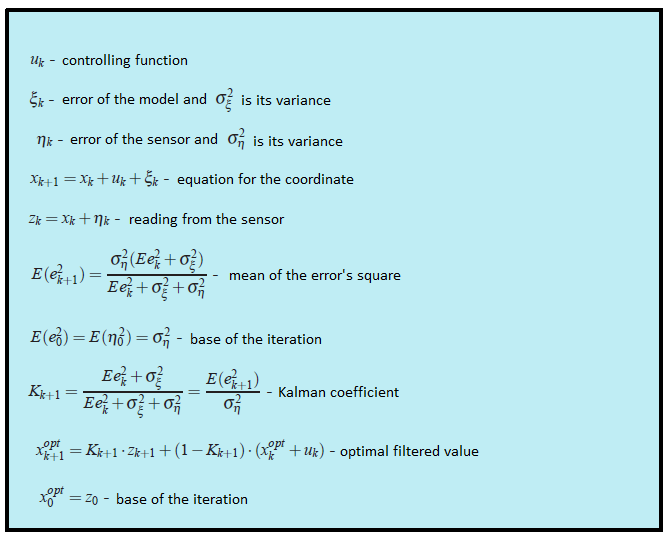

In general the coordinate

(1)

(1)Let us discuss, what do we know in these equations.

is a known value which controls an evolution of the system. We do know it from the model of the system.

- The random variable

represents the error in the model of the system and the random variable

is a sensor’s error. Their distribution laws don’t depend on time (on iteration index

).

- The means of errors are equal to zero:

.

- We might not know a distribution law of the random variables, but we do know their variances

and

. Note that the variances don’t depend on time (on

) since the corresponding distribution laws neither.

- We suppose that all random errors are independent from each other: the error at the time

doesn’t depend on the error at the time

.

Note that a filtration problem is not a smoothing problem. Our aim is not to smooth a sensor’s data, we just want to get the value which is as close as it is possible to the real coordinate

Kalman algorithm

We would use an induction. Imagine that at the step

Therefore before getting the sensor’s value we might state that it would show the value which is close to

The idea of Kalman is the following. To get the best estimation of the real coordinate

The coefficient

We should choose the Kalman coefficient in way that the estimated coordinate

For instance, if we do know that our sensor is very super precise then we would trust to its reading and give him a big weight (

In general situation, we should minimize the error of our estimation:

We use equations (1) (those which are on a blue frame), to rewrite the equation for the error:

Proof

Now it comes a time to discuss, what does it mean the expression “to minimize the error”? We know that the error is a random variable so each time it takes different values. Actually there is no unique answer on that question. Similarly it was in the case of the variance of a random variable, when we were trying to estimate the characteristic width of its probability density function. So we would choose a simple criterium. We would minimize a mean of the square:

Let us rewrite the last expression:

Key to proof

From the fact that all random variables in the equation for  don’t depend on each other and the mean values

don’t depend on each other and the mean values  , follows that all cross terms in

, follows that all cross terms in  become zeros:

become zeros:

Indeed for instance

Also note that formulas for the variances looks much simpler: and

and  (since

(since  )

)

Indeed for instance

Also note that formulas for the variances looks much simpler:

The last expression takes its minimal value, when its derivation is zero. So when:

Here we write the Kalman coefficient with its subscript, so we emphasize the fact that it do depends on the step of iteration. We substitute to the equation for the mean square error

So we have solved our problem. We got the iterative formula for computing the Kalman coefficient.

All formulas in one place

Example

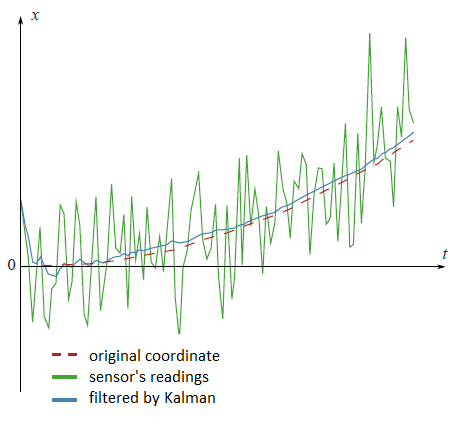

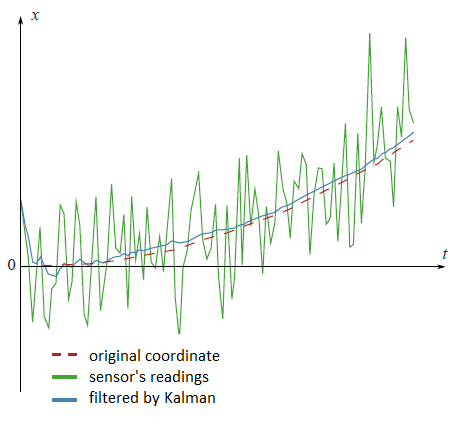

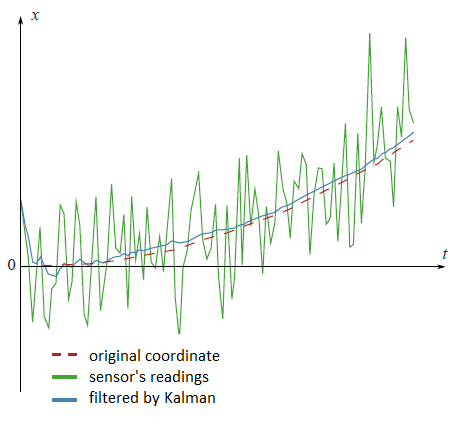

On the plot from the beginning of this article there are filtered datum from the fictional GPS sensor, settled on the fictional car, which moves with the constant acceleration

Look at the filtered results once again:

Code in matlab

clear all; N=100 % number of samples a=0.1 % acceleration sigmaPsi=1 sigmaEta=50; k=1:N x=k x(1)=0 z(1)=x(1)+normrnd(0,sigmaEta); for t=1:(N-1) x(t+1)=x(t)+a*t+normrnd(0,sigmaPsi); z(t+1)=x(t+1)+normrnd(0,sigmaEta); end; %kalman filter xOpt(1)=z(1); eOpt(1)=sigmaEta; % eOpt(t) is a square root of the error dispersion (variance). % It's not a random variable. for t=1:(N-1) eOpt(t+1)=sqrt((sigmaEta^2)*(eOpt(t)^2+sigmaPsi^2)/(sigmaEta^2+eOpt(t)^2+sigmaPsi^2)) K(t+1)=(eOpt(t+1))^2/sigmaEta^2 xOpt(t+1)=(xOpt(t)+a*t)*(1-K(t+1))+K(t+1)*z(t+1) end; plot(k,xOpt,k,z,k,x)

Analysis

If one look at how the Kalman coefficient

In the next example we would discuss how that can simplify our life.

Second example

In practice it happens that we don’t know almost anything from the physical model what we are filtering. Imagine you have decided to filter that measurements from your favourite accelerometer. Actually you don’t know in forward how the accelerometer would be moved. Only thing you might know is the variance of sensor’s error

Strictly speaking this kind of system doesn’t satisfy the condition that we have imposed on the random variable

But there is a much simpler way. We saw that with increasing of the step

On the next graph you can see the filtered by two different ways measurements from an imaginary sensor. The first method is the honest one, with all formulas from Kalman theory. The second method is the simplified one.

We see that there is not a big difference between two this methods. There is a small variation in the beginning, when the Kalman coefficient still is not stabilized.

Discussion

We have seen that the main idea of Kalman filter is to choose the coefficient

in average would be as close as possible to the real coordinate

That is a reason that the Kalman filter is called a linear filter. It is possible to prove that the Kalman filter is the best from all linear filters. The best in a sense that it minimizes a square mean of the error.

Multidimensional case

It is possible to generalise all the Kalman theory to the multidimensional case. Formulas there looks a bit more ellaborated but the idea of their deriving still remains the same as in a one dimension. For instance, In this nice video you can see the example.

Literature

The original article which is written by Kalman you can download here:

http://www.cs.unc.edu/~welch/kalman/media/pdf/Kalman1960.pdf