As we wrap up another year, it's time to look back at what our department has accomplished. 2025 brought us 42 published papers spanning fundamental ML theory, applied AI systems, and cutting-edge optimization methods—from transformer Hessians and generative models to hallucination detection and matrix-oriented optimizers.

Beyond publications, our students won competitions and defended their theses: 14 Bachelor's, 9 Master's, 3 PhD, and 1 DSc dissertations. They also launched ambitious group research projects. Three of our faculty and alumni received the prestigious Yandex ML Prize, and our head Konstantin Vorontsov was inducted into the Hall of Fame. If you read our summer overview of thesis defences or last winter's year-in-review for 2024, this post continues that story with the next chapter.

In this year-in-review, we dive into the research highlights, share stories from our educational programs, and celebrate the community that makes it all possible.

Research

Fundamental ML

Machine Learning Systems Dynamics and Theoretical Analysis

At the fundamental level, our researchers study both the global behaviour of learning systems and the local geometry of modern models.

In the paper A mathematical model of the hidden feedback loop effect in machine learning systems by our student Andrey Veprikov and his colleagues, large-scale ML systems are modelled as dynamical processes in which the environment gradually becomes dependent on the learner itself, breaking standard i.i.d. assumptions. Within this unified framework they describe phenomena such as error amplification, concept drift and echo chambers, and characterise limiting regimes for positive and negative feedback, offering a tool to reason about long-term risks of deployed models.

Zooming in from system dynamics to model internals, the paper Closing the Curvature Gap: Full Transformer Hessians and Their Implications for Scaling Laws by our students Egor Petrov, Nikita Kiselev, Vladislav Meshkov and their colleagues completes the Hessian analysis of Transformer blocks by deriving second‑order expressions for LayerNorm and feed‑forward sublayers. This allows them to trace how curvature propagates through the architecture and to connect these geometric properties to convergence behaviour and empirical scaling laws in large models.

Moving from artificial systems to human ones, the paper Coalescing Force of Group Pressure: Consensus in Nonlinear Opinion Dynamics by our student Zabarianska Iryna and her colleague extends a recent model of opinion dynamics with group pressure. They generalise it to time-varying, opinion-dependent interaction weights and multidimensional opinions, going well beyond classical bounded-confidence models. The work shows that under uniformly positive conformity, group pressure robustly drives consensus and provides tighter convergence guarantees, while allowing the final public opinion to take any value within the convex hull of initial views—capturing realistic scenarios such as polling with random participants.

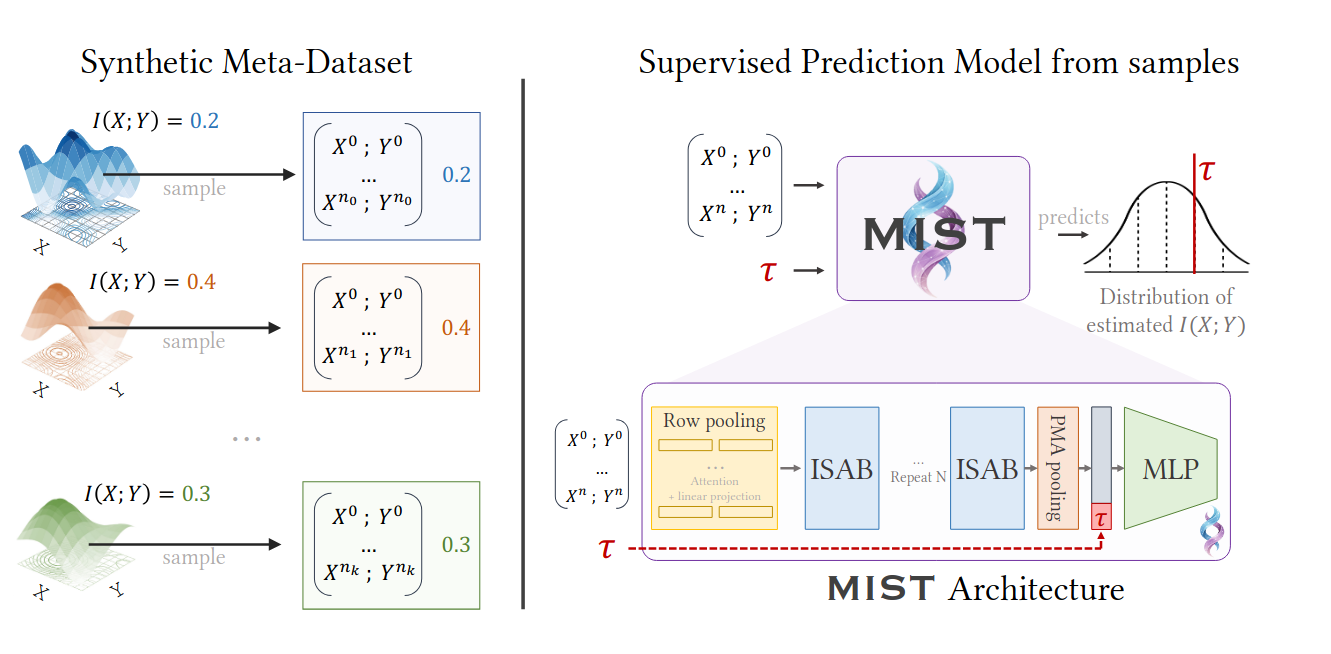

In parallel, our students are rethinking core information-theoretic tools used across ML. In the paper MIST: Mutual Information Via Supervised Training by our student German Gritsai and his colleagues, mutual information is estimated not via handcrafted objectives, but via a neural network meta-estimator trained on a massive synthetic corpus of distributions with known MI. Thanks to a permutation-invariant attention architecture and a quantile-regression training objective, their estimator provides both point estimates and calibrated uncertainty intervals, and empirically outperforms classical baselines across dimensions, sample sizes and even on unseen distribution families—all while being orders of magnitude faster at inference time.

Finally, at the model-selection level, the paper Selection of Optimal Autoencoder Structure Using Bayesian Optimization Methods Anton Bishuk and his colleague looks at how to automatically design effective autoencoder architectures. They treat the structure of the encoder–decoder model as a hyperparameter space and propose a two-stage Bayesian optimisation scheme that first filters promising candidates and then refines the choice based on learning dynamics. Experiments on CIFAR and Fashion-MNIST show that this principled search can reliably discover high-quality architectures without manual tuning.

Generative Models and Optimal Transport

Another fundamental direction of our research connects generative modeling with the theory of optimal transport and score-based methods.

In the paper Universal Inverse Distillation for Matching Models with Real-Data Supervision (No GANs) by our student Nikita Kornilov and his colleagues, the authors address the slow inference of diffusion, flow and other matching models by proposing RealUID—a universal, one‑step distillation framework that works across architectures. RealUID incorporates real data directly into the distillation process without adversarial training and unifies several previous approaches to flow and diffusion distillation under a single theoretical view.

Complementing this algorithmic view, the paper Generalization error bound for denoising score matching under relaxed manifold assumption by our student Konstantin Yakovlev and his colleague examines the theoretical properties of denoising score matching. They model the data distribution with a nonparametric Gaussian mixture and relax the standard manifold assumption, allowing samples to deviate from a low-dimensional manifold while still exploiting structure. The authors derive non-asymptotic bounds on approximation and generalisation errors, with rates governed by intrinsic dimension and remaining valid even when the ambient dimension grows polynomially with the sample size.

To make such models measurable and comparable, the paper Entering the Era of Discrete Diffusion Models: A Benchmark for Schrodinger Bridges and Entropic Optimal Transport by our student Grigoriy Ksenofontov and his colleagues introduced the first systematic benchmark for Schrödinger bridges on discrete spaces. Their construction provides pairs of distributions with analytically known SB solutions, enabling rigorous evaluation of existing and new algorithms, including their own DLightSB and DLightSB-M methods and an extended -CSBM algorithm. This benchmark lays the groundwork for reproducible comparisons of EOT/SB solvers in high-dimensional discrete settings.

Finally, in the note On the Equivalence of Optimal Transport Problem and Action Matching with Optimal Vector Fields by our student Nikita Kornilov and his colleague, the authors clarify the relationship between Flow Matching, Action Matching and Optimal Transport. They show that if one restricts attention to optimal vector fields, Action Matching can recover OT solutions while learning vector fields for entire sequences of distributions, rather than for a manually chosen interpolation. Together, these works connect practical generative methods with the underlying theory of optimal transport and stochastic bridges.

Applied ML

LLM Reasoning, Interpretability, and Text Processing

Within applied machine learning, a major focus of our group is on understanding and improving language models and NLP systems.

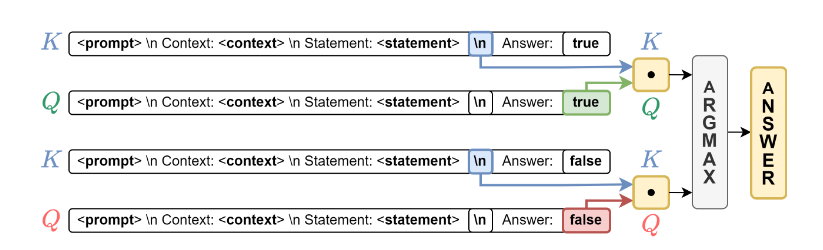

In the paper Quantifying Logical Consistency in Transformers via Query-Key Alignment by our student Anastasia Voznyuk and her colleagues, the authors introduce the “QK‑score” — a lightweight metric based on query–key alignments that predicts whether a model’s chain‑of‑thought is logically valid. They show that a small set of attention heads selected by this metric can predict logical correctness significantly better than raw model probabilities, revealing latent reasoning structure in transformers and offering an efficient alternative to ablation‑based analyses.

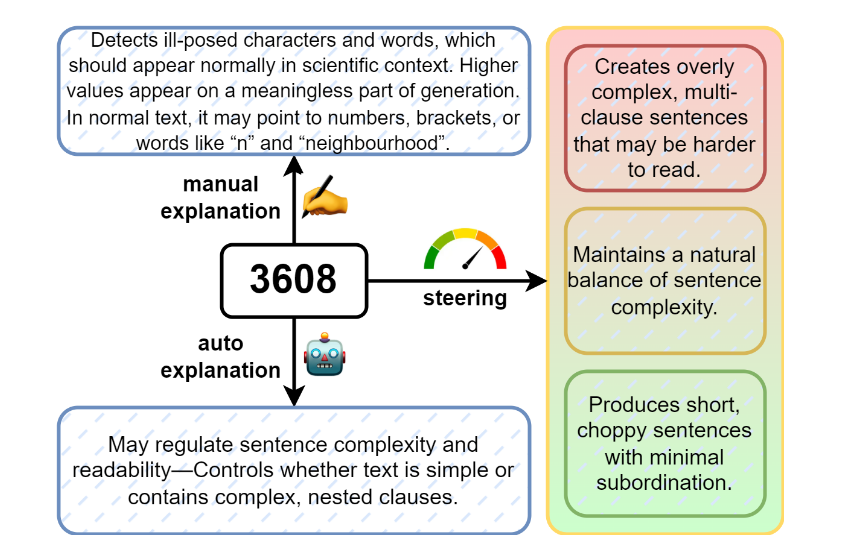

While QK-score focuses on internal attention patterns, the paper Interpreting transformer-based classifiers via clustering by our student German Gritsai and his colleague proposes a complementary interpretation pipeline based on clustering hidden representations. They translate model decisions into natural-language explanations by clustering layer activations, selecting representative examples, and using large language models to extract recurring textual patterns that support a prediction. In a case study on machine-generated text detection, this method reveals how classifiers rely on stylistic and structural cues, offering a more human-readable view of model behaviour.

Beyond model internals, several works tackle the structure and quality of linguistic data itself. The paper Text Tree Edit Distance: A Language Model-Based Metric for Text Hierarchies by our student Fedor Sobolevsky and his colleague introduces a new metric—text tree edit distance (TTED)—for comparing hierarchical text structures such as summaries or mind maps. Building on classical tree edit distance enriched with LLM-based semantic similarity, TTED better reflects both structure and meaning than previous baselines, as confirmed by extensive experiments.

Two papers by our student Ildar Khabutdinov and his colleagues focus on evaluation and correction of text. In The methodology of multi-criteria evaluation of text markup models based on inconsistent expert markup, they propose a richer markup formalism and a multi-criteria evaluation framework that remains meaningful even when expert annotations disagree, going beyond simple F1-based metrics. In Subword-Level Grammatical Error Correction: A Universal Approach, they design a fully automatic pipeline for generating training data and learning subword-level correction rules that are language-agnostic; applied to GECToR, this yields competitive performance on English benchmarks without manual rule design, suggesting a practical alternative to grammar-specific systems.

Hallucination and Artificial Content Detection

Another cluster of applied results is devoted to detecting hallucinations and artificial content produced by modern generative models.

In the paper Feature-level insights into artificial text detection with sparse autoencoders by our student Anastasia Voznyuk and her colleagues, Sparse Autoencoders are used to probe the residual stream of a modern LLM and extract interpretable features for artificial‑text detection. The authors show that these features capture systematic stylistic differences between human and machine‑generated texts and provide a more transparent basis for robust detectors.

Two works by Anastasia Voznyuk, German Gritsai and their colleagues focus on hallucinations and machine-generated content. Advacheck at SemEval-2025 Task 3: Combining NER and RAG to Spot Hallucinations in LLM Answers presents a competition-winning system that combines NER, retrieval-augmented generation and LLMs to identify hallucinated spans in LLM outputs, including subtle errors such as misspelled names. Team advacheck at PAN: multitasking does all the magic describing a multitask transformer-based detector that separates domains via auxiliary classification heads and achieves near-perfect validation performance and top ranks in a generative AI detection workshop.

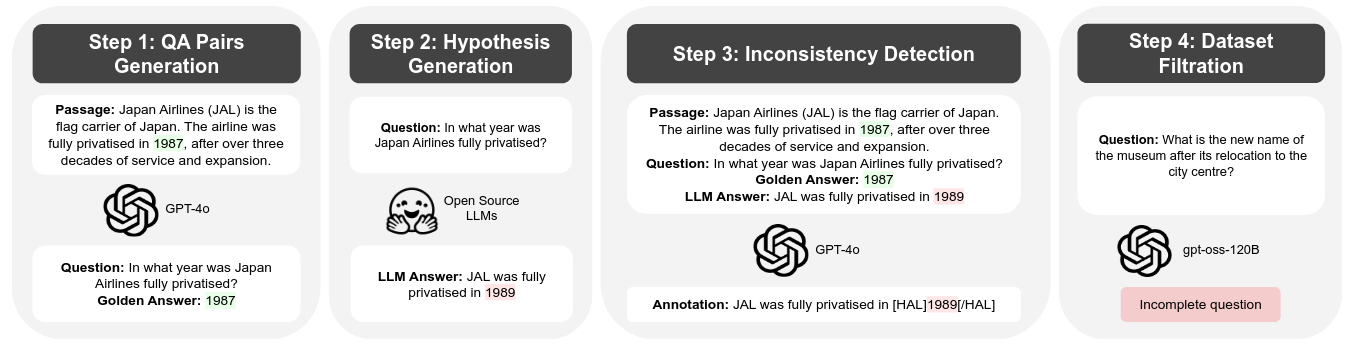

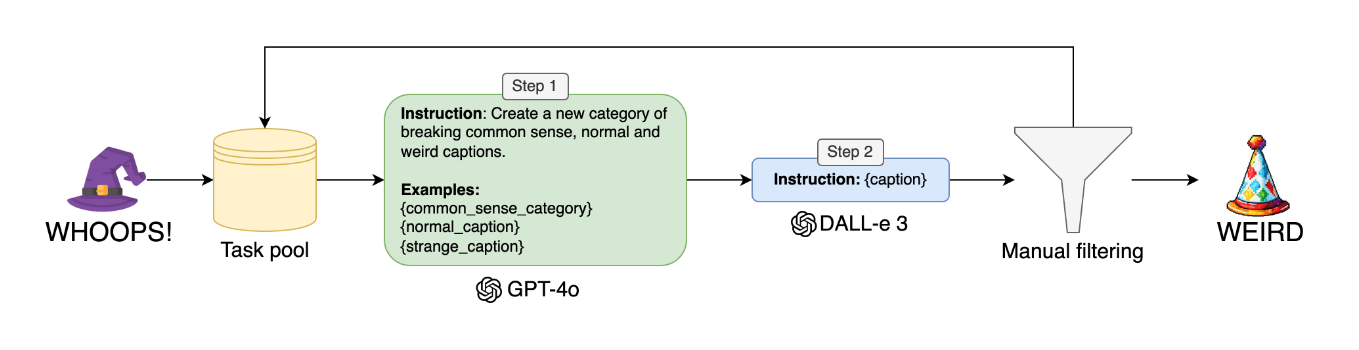

At the benchmark and dataset level, the paper When Models Lie, We Learn: Multilingual Span-Level Hallucination Detection with PsiloQA by our student Kseniia Petrushina and her colleagues introduces PsiloQA, a large-scale multilingual dataset with span-level hallucination annotations in 14 languages. Built via an automated pipeline that generates QA pairs, elicits potentially hallucinated answers, and labels hallucinated spans using GPT-4o and retrieved context, PsiloQA enables systematic comparison of hallucination detection methods. The authors show that encoder-based detectors achieve strong cross-lingual generalisation and transfer well to other benchmarks, at a fraction of the cost of manual annotation.

Image Generation and Detection

Another series of works investigates the generation and evaluation of visual content.

In the paper Kandinsky 5.0: A Family of Foundation Models for Image and Video Generation by our students Nikita Kiselev and their colleagues, the authors present Kandinsky 5.0, a line‑up of foundation models for high‑resolution image and short‑video generation. They describe the data curation pipeline, architectural choices and optimisation tricks that allow their 6B–19B parameter models to achieve strong quality and fast inference across a wide range of generative tasks.

On the detection side, the paper Beyond familiar domains: a study of the generalization capability of machine-generated image detectors by our student Daniil Dorin and his colleagues systematically evaluates how well current detectors of AI-generated images cope with unseen generators and domains. Studying popular architectures such as CLIP-based classifiers and mixture-of-experts models, they find that generalisation beyond the training domain is still very limited, highlighting the need for more robust visual detectors as generated content spreads into real-world workflows.

Two works by Kseniia Petrushina and her colleagues propose novel ways to quantify image realism and common-sense consistency. Through the Looking Glass: Common Sense Consistency Evaluation of Weird Images uses large vision–language models (LVLMs) to extract atomic facts from images and then trains a compact classifier on these facts to detect common-sense violations, achieving state-of-the-art results on WHOOPS! and WEIRD. Don't Fight Hallucinations, Use Them: Estimating Image Realism using NLI over Atomic Facts goes further by explicitly leveraging LVLM hallucinations: by computing entailment relations between true and hallucinated atomic facts via NLI, the method produces a single “reality score” that sharply separates realistic and implausible images in a zero-shot setting.

In the paper Pairwise image matching for plagiarism detection by our student Daniil Dorin and his colleagues, the authors address the critical task of plagiarism detection across various fields, including academic publishing, journalism, e-commerce, and media verification. While substantial attention focuses on identifying textual plagiarism, image plagiarism, particularly in biology and medicine, remains a significant concern. Automated retrieval systems often surface numerous potential candidates, but a high rate of false positives - pairs incorrectly flagged as plagiarism – necessitates highly accurate pairwise matching for verification. Manual alterations to images, such as rotations, mirroring, conversion to grayscale, and color distortion constitute forms of plagiarism. This work addresses the critical need for false positive rate (FPR) minimization in pairwise image plagiarism detection through rigorous analysis of similarity scoring models. The proposed approach employs a siamese network with three key components: a weight-shared encoder, a symmetric fusion module with order-invariant embedding combination, and a similarity classification head. Training employs a hybrid self-supervised strategy with plagiarism-mimicking augmentations, combining cross-entropy loss and contrastive regularization. Experimental validation across multi-domain images demonstrates that end-to-end trained models consistently outperform approaches using frozen state-of-the-art representations.

In a different but equally demanding visual setting, the paper Enhancing fMRI data decoding with spatiotemporal characteristics in limited dataset by our student Daniil Dorin and his colleagues investigates decoding functional MRI signals under severe data limitations. They propose an algorithm for extracting subject-specific activity masks to reduce spatial dimensionality and pair it with an encoder based on Riemannian geometry to capture spatiotemporal structure. The resulting classifier outperforms neural baselines on single-subject fMRI tasks and illustrates how careful architectural design can compensate for small, noisy datasets.

Optimization

Variational Inequalities

In optimisation, several works develop and analyse algorithms for variational inequalities — a unifying framework behind many equilibrium and game-theoretic problems.

In the paper Shuffling heuristic in variational inequalities: Establishing new convergence guarantees by our student Egor Petrov and his colleagues, the authors study the popular shuffling strategy, where data is permuted and processed sequentially instead of being sampled independently. They provide the first convergence guarantees for this heuristic in the VI setting and show experimentally that shuffling can lead to faster convergence than independent sampling on a range of benchmark problems.

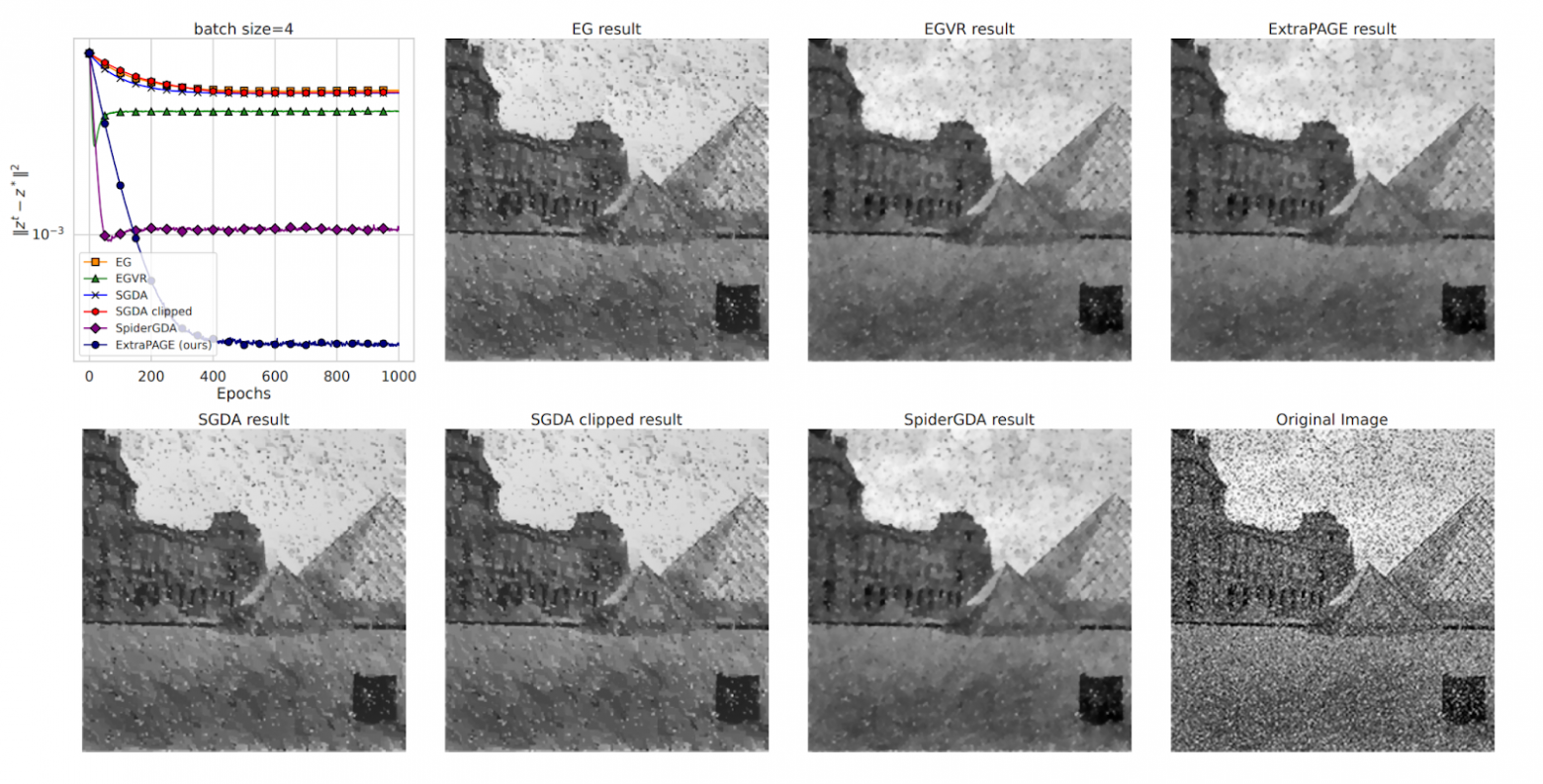

The companion paper When Extragradient Meets PAGE: Bridging Two Giants to Boost Variational Inequalities by our student Egor Petrov and his colleagues tackles stochastic VIs from a variance-reduction perspective. Combining the extragradient method with PAGE, they obtain a new stochastic algorithm with provable convergence under general assumptions and demonstrate its empirical advantages on a range of benchmarks, including denoising tasks—pushing the state of the art in both theory and practice.

In the paper Variance Reduction Methods Do Not Need to Compute Full Gradients: Improved Efficiency through Shuffling written by Alexey Rebrikov and his colleagues explores the No Full Gradient SARAH algorithm, a variance-reduction method for stochastic optimization that avoids computing full gradients. Its theoretical properties are analyzed in both convex and non-convex settings, with convergence proofs provided under standard smoothness assumptions. Extensive experiments are conducted on image classification tasks to evaluate the algorithm’s empirical performance. The results demonstrate that the method achieves competitive accuracy while reducing computational overhead. This work contributes to the development of efficient optimization techniques for large-scale machine learning. This paper also was a basis for his Bachelor thesis that he successfully defended in the summer 2025.

Sign-Based and Gradient Compression Methods

Another line of work investigates sign-based methods that are especially relevant for large-scale and distributed training.

In the paper Sign-SGD is the Golden Gate between Multi-Node to Single-Node Learning: Significant Boost via Parameter-Free Optimization by our student Egor Petrov and his colleagues, Sign‑SGD is treated as a unifying tool for memory‑efficient single‑node training and gradient compression in distributed setups. The authors design several practical, parameter‑free variants with momentum and demonstrate on real ML tasks that these methods can substantially reduce resource requirements while maintaining competitive accuracy.

From a robustness standpoint, the paper Sign Operator for Coping with Heavy-Tailed Noise in Non-Convex Optimization: High Probability Bounds Under (L0,L1)-Smoothness by our student Nikita Kornilov and his colleagues studies sign-based updates under generalized smoothness and heavy-tailed noise. They establish the first high-probability convergence guarantees for such methods in this setting and show, both in theory and in large language model experiments, that sign-based optimisation can outperform popular alternatives like gradient clipping and normalisation when data is severely corrupted.

Matrix-Oriented Optimization

Several other optimization papers explore matrix-aware algorithms that treat neural network weights as matrices rather than flat vectors.

In the paper Sampling of semi-orthogonal matrices for the Muon algorithm by our students Egor Petrov and Andrey Veprikov and their colleagues, the authors study how to efficiently sample semi‑orthogonal matrices in the Muon optimiser, especially in a zero‑order setting where gradients are approximated via forward passes. They compare several sampling strategies for fine‑tuning large language models and show how these choices affect convergence speed, memory usage and final quality.

Building on the Muon paradigm, the paper The Ky Fan Norms and Beyond: Dual Norms and Combinations for Matrix Optimization by our student Alexey Kravatskiy and his colleagues explores duals of Ky Fan norms and their convex combinations as a basis for new Muon-like algorithms, called Fanions. Their F-Muon and S-Muon variants consistently match Muon’s performance and even outperform it on some synthetic problems, showing that richer matrix norms can lead to equally efficient but more flexible optimisers.

Two further works by our student Andrey Veprikov and his colleagues deepen this matrix-aware view. DyKAF: Dynamical Kronecker Approximation of the Fisher Information Matrix for Gradient Preconditioning proposes a projector-splitting approach for dynamically approximating the Fisher matrix in a Kronecker-factorised form, yielding better preconditioners and improved performance in large language model pre-training and fine-tuning. Preconditioned Norms: A Unified Framework for Steepest Descent, Quasi-Newton and Adaptive Methods introduces the notion of preconditioned matrix norms, showing that many popular optimisers—from SGD and Adam to Muon, KL-Shampoo and hybrid methods—fit into a single framework with clear conditions for affine and scale invariance.

LoRA, Robust Optimization, and Parameter-Efficient Fine-Tuning

Finally, a group of papers addresses parameter-efficient fine-tuning and robustness in deep learning.

In the paper Faster Than SVD, Smarter Than SGD: The OPLoRA Alternating Update by our student Andrey Veprikov and his colleagues, LoRA optimisation is reformulated as a low‑rank least‑squares subproblem that can be solved efficiently via a few alternating updates. Their OPLoRA optimiser closes much of the gap between standard LoRA and full low‑rank training (SVDLoRA), while keeping memory costs comparable to Adam.

The paper WeightLoRA: Keep Only Necessary Adapters by our student Andrey Veprikov and his colleagues tackle another limitation of LoRA-based fine-tuning: the need to decide where to place adapters. Their WeightLoRA method adaptively selects the most important LoRA heads during training, substantially reducing the number of trainable parameters while maintaining—or even improving—performance across a range of benchmarks and architectures.

Robustness to distribution shift is addressed in Aligning distributionally robust optimization with practical deep learning needs, where Andrey Veprikov and his collaborators propose ALSO, an adaptive loss-scaling optimiser for a modified distributionally robust objective. ALSO supports assigning weights not only to individual samples but also to groups (e.g. classes), comes with convergence guarantees for non-convex objectives, and empirically outperforms both traditional optimisers and existing DRO methods across diverse deep learning tasks.

Traffic and Network Optimization

Two applied optimization papers by our student Igor Ignashin focus on traffic and flow problems in networks. In the paper Stochastic Origin Frank-Wolfe for traffic assignment, the authors present the Stochastic Origin Frank-Wolfe (SOFW) method, a block-coordinate Frank-Wolfe variant for computing equilibrium traffic flows. By drastically reducing the number of shortest-path computations, SOFW achieves significant speedups over classical Frank-Wolfe methods on large-scale transportation networks, while retaining solid theoretical guarantees, including a new convergence proof for a batched variant.

The follow-up paper Modeling skiers flows via Wardrope equilibrium in closed capacitated networks applies similar equilibrium ideas to ski resorts. Here, users are assigned to cycles in a closed capacitated network, and queues form on lifts with limited capacity. The authors formulate the resulting equilibrium as a variational inequality and show that standard algorithms can efficiently compute waiting times and flows, illustrating how the same optimisation toolkit can address diverse real-world networks.

Stochastic Dynamics, Gradient Methods, and Expert Learning

The final group of optimisation results is devoted to stochastic dynamics, bandit-style decision making and learning with multiple experts. In the paper Functional multi-armed bandit and the best function identification problems by our student Ilgam Latypov and his colleagues, the authors introduce two new problem classes—functional multi-armed bandits and best function identification—where each arm is an unknown black-box function. They propose a reduction scheme that turns algorithms for nonlinear optimization into UCB-type bandit methods with provable regret bounds, and demonstrate that this framework is well suited to scenarios such as competitive LLM training.

At the level of optimisation dynamics themselves, the paper Why SGD is not Brownian Motion: A New Perspective on Stochastic Dynamics by our student Igor Ignashin challenges the common intuition that SGD behaves like Brownian motion. Instead, he derives a master equation and Fokker–Planck description showing that SGD follows deterministic dynamics in a fluctuating loss landscape, with non-stationary behaviour in valley-like regions. Experiments on vision and NLP tasks confirm these theoretical predictions and provide a more nuanced picture of how stochastic gradients explore complex loss surfaces.

The paper Intermediate Gradient Methods with Relative Inexactness by our student Nikita Kornilov and his colleagues then asks how gradient methods behave when the gradients themselves are noisy or inexact. Focusing on relative rather than absolute inexactness, they sharpen admissible error bounds for accelerated methods under strong convexity and show, via performance estimation, that these bounds are tight up to constants. Building on this, they design an adaptive “intermediate” gradient method whose convergence rate smoothly interpolates between robust non-accelerated and faster accelerated regimes, even when gradients are imperfect.

Finally, in UCB-type Algorithm for Budget-Constrained Expert Learning, Ilgam Latypov and his collaborators consider systems that must choose between several adaptive learning algorithms under a per-round training budget. Their M-LCB meta-algorithm allocates updates across experts while providing anytime regret guarantees, covering settings where each expert is itself a contextual bandit or online learner. Together, these works connect stochastic optimisation theory with practical schemes for choosing and combining learning algorithms in dynamic environments.

Scientific activities

MIPT Conference

An important part of scientific work is presenting one's scientific results to the community. One of the platforms for our students to present their work is the MIPT Conference. This year, 22 presentations from our students were presented at the section on Problems of Intelligent Data Analysis, Recognition, and Forecasting.

Thesis Defense

This year, several of our alumni successfully defended their PhD and DSc theses in artificial intelligence and optimisation:

Radoslav Neychev (PhD), supervised by Vadim Strijov: Prior information in semi-supervised learning.

Nikita Pletnev (PhD), supervised by Alexander Gasnikov: Gradient optimisation methods for solving inverse problems in mathematical physics.

Innokentiy Shibaev (PhD), supervised by Alexander Gasnikov: Derivative-free optimisation methods for functions with Hölder-continuous gradients.

Aleksandr Beznosikov (DSc), supervised by Alexander Gasnikov: Advanced topics in the complexity theory of numerical optimisation methods: distributed and stochastic settings and extensions to broader problem classes.

My First Scientific Paper

This year, we continued our traditional third-year course My First Scientific Paper, where every third-year student completes an individual research project under the supervision of our faculty. In total, 22 students finished their projects, covering topics from diffusion models and optimization with differential privacy to fMRI decoding, recommender systems, and robust detection of AI-generated images.

Most projects were written up as full research papers: at least 20 of them are already available as PDF preprints in our GitHub organization, typically accompanied by slide decks and open-source code. Together, these projects provide students with their first experience of conducting end-to-end research: formulating a problem, developing and implementing methods, and presenting results in the format of a scientific paper. Some of these projects have already evolved into published papers, which are included in the Research section above.

Creation Of Intelligent Systems

The Creation Of Intelligent Systems course is a continuation of My First Scientific Paper for fifth-year students, where they conduct scientific research in groups. This year, students worked on three projects.

Fidelity-Generalization Multi-teacher Knowledge Distillation. Multi-teacher knowledge distillation is widely used, yet it remains unclear how to balance two goals: keeping a student close to several teachers and improving its generalization on the target task. We address this setting and propose a fidelity–generalization framework. A simple decomposition shows that the average teacher–student discrepancy splits into a teacher-only diversity term and a term that depends only on the student and the ensemble centroid. Motivated by this, we formulate training as: improve task performance under explicit upper bounds on divergences to each teacher, using a practical objective that combines supervised learning with centroid-based distillation. This viewpoint explains why centroid distillation is central in the multi-teacher setting, unifies several existing objectives, and suggests diagnostics based on teacher diversity and fidelity profiles. This project was carried out by our students Altay Eynullayev, Sergey Firsov, Muhammadsharif Nabiev, and Denis Rubtsov.

Testing the Intermediate Layer Hypothesis in the Hidden Subspaces of Large Language Models. This paper investigates the geometric properties of hidden state representations in Large Language Models (LLMs), focusing on the intrinsic dimensionality of hidden subspaces. We conduct extensive experiments across multiple LLM architectures and layers, analyzing the relationship between geometric characteristics and model performance. Our findings reveal several consistent patterns in intrinsic dimensionality and layer informativeness evolution across different models and prompting conditions. We demonstrate that semantic information organizes in low-dimensional subspaces. The geometric properties of hidden states exhibit strong correlations with model performance on various tasks, providing new insights into the internal mechanisms of LLMs and offering geometric interpretability tools for model analysis. This project was carried out by our students Vladislav Meshkov, Ivan Papay, Fedor Sobolevsky, and Vladislav Minashkin.

Jacobian Analysis of a Recurrent Transformer Block. This work studies looped transformers — architectures in which the same transformer block is applied iteratively to the state, forming a discrete dynamical system. This approach strengthens the model's inductive biases and improves its reasoning capabilities. We analyze the stability of the iterative transformer block via its Jacobian, generalizing recent results on the dynamics of recurrent self-attention. In particular, we derive an upper bound on the spectral norm of the Jacobian of the full block (MSA + FFN + RMSNorm) and show that RMS normalization plays a key role in suppressing gradient growth as the update step increases. Moreover, we prove that for large values of the update-step parameter, the spectral norm of the Jacobian remains bounded, which ensures stability of the iterative dynamics — an effect arising from a compensating interaction between activation scaling and the structure of RMSNorm. We also conduct numerical experiments on synthetic data and on CIFAR-10 that confirm the Jacobian-based conclusions, investigate convergence of the iterative process, and compare theoretical bounds with empirical spectral norms. The results provide a deeper understanding of the stability mechanisms of looped transformers and pave the way for designing more robust and well-founded architectures for reasoning tasks. This project was carried out by our students Ilya Stepanov, Vadim Kasiuk, Dmitriy Vasilenko, and Gleb Karpeev.

While the students have just completed their research on these topics, we are very much looking forward to seeing these works reach publication and appear in our next year-in-review post for 2026!

Research code implementations

Beyond published papers, our students actively contribute to open-source research software that makes cutting-edge methods accessible to the broader community.

A team of our students — Ivan Papay, Vladislav Meshkov, Ilya Stepanov, and Vladislav Minashkin — extended a PyTorch library called relaxit, establishing version 1.2.0 which implements new methods for relaxing discrete variables: Rebar Relaxation, Decoupled Straight-Through Gumbel-Softmax, and Generalized Gumbel-Softmax. These methods are useful in generative models, like VAE for example. Aside from that, we have implemented the RELAX algorithm for reinforcement learning tasks. By this, we are extending the working area of the project, adding RL to generative tasks.

Kalman Filter and Extensions is a library with an implementation of Kalman filters and their modifications in PyTorch, which makes it compatible with a large number of deep learning frameworks. The library is implemented by Matvei Kreinin, Maria Nikitina, Petr Babkin, and Anastasia Voznyuk, and includes the classic Kalman filter, EKF, UKF, Variational Kalman Filter, and Deep Kalman Filter. These algorithms are designed to evaluate the hidden states of dynamic systems. They are based on a Bayesian approach to probabilistic modeling of the state space, where the system is described by linear equations of state transitions and observations with Gaussian noise. Kalman filters are widely used in navigation, robotics, signal processing, financial forecasting, and serve as the basis for more complex models such as Gaussian processes, hidden Markov models, and state space models.

Bensemble is a PyTorch-based library for Bayesian deep learning that brings ensemble and uncertainty-quantification methods under a unified training/inference interface. The library is implemented by Vadim Kasiuk, Muhammadsharif Nabiev, Fedor Sobolevsky, Dmitrii Vasilenko, and includes multiple canonical approaches e.g. variational inference, Laplace approximation, variational Renyi, probabilistic backpropagation and is packaged with documentation, tests and example notebooks for reproducible benchmarking.

Yandex ML Prize

This year, several members of our department received the Yandex ML Prize, which honors outstanding contributions to machine learning education in Russia.

Konstantin Vorontsov, head of our department, was inducted into the Hall of Fame, a special category for scientists and mentors who have fundamentally shaped the ML community in Russia and continue to inspire new generations.

Radoslav Neychev, a graduate of our department, became a laureate in the Instructors category for his work on open ML courses and long-term teaching activity.

Aleksandr Bogdanov, also a graduate of our department and now at MIPT, received the prize in the Young Instructors category for his early-career teaching achievements in optimization and machine learning.

Other awards

Denis Rubtsov actively participated in student olympiads. He took second place in Russia in the "Mathematical Modeling" category of the "I Am a Professional" Olympiad, thus earning a silver medal. Furthermore, he won a prize in the Skoltech Anti-Olympiad, which required students to create machine learning problems rather than solve them.

Alexey Kravatskiy received the Best Paper Award at the ICOMP conference for his work The Ky Fan Norms and Beyond: Dual Norms and Combinations for Matrix Optimization.