In Part 1, we became familiar with the concept of entropy.

In this part, I'm going to talk about Mutual Information - a concept that opens the doors to error-resistant coding, compression algorithms, and also provides a fresh perspective on regression and Machine Learning tasks.

This is a necessary component to transition to ML tasks as we move into the next part, treating them as challenges in extracting mutual information between features and the predicted variable. One way to explain the success of ML models is that they create a natural bottleneck, limited by a self-adjusting value of information bits, through which information about the input data is passed (distilled). But that's a topic for the next part.

Here, there will be three important visuals:

The first one is about visualizing the entropies of two random variables and their mutual information.

The second one is about understanding the concept of the dependency between two random variables and the fact that zero correlation doesn't necessarily mean independence.

And the third one is about how the capacity of an information channel has a straightforward geometric interpretation through the convexity measure of the entropy function.

We will also prove a simplified version of Shannon-Hartley's theorem regarding the maximum bandwidth of a noisy channel.

The material is quite complex, presented concisely, and is more like lecture notes. It is assumed that you will independently explore unclear points or ask me questions to clarify them in a more understandable and detailed manner.

2. Mutual Information

When you have two dependent variables, you can talk about how much information about one is contained in the other. The last tasks in Part 1 essentially revolved around this concept - Mutual Information between two random variables.

For example, let's consider a pair = (person's_weight, person's_height). For simplicity, let's assume these are integers measured in kilograms and centimeters, with a finite number of possible values. In theory, we could gather data from 7 billion people and build a two-dimensional distribution for the pair

- the distribution of two dependent random variables. We can separately construct the distribution only for weight

(ignoring height) and the distribution for height

(ignoring weight). These two distributions are called marginal distributions for the joint distribution on the plane

In this context, these marginal distributions are naturally referred to as prior distributions - they correspond to our knowledge of weight and height when we know nothing else about the person.

It's clear that information about a person's height will cause us to reconsider the distribution of weight. For example, the message "height = 2 meters 10 centimeters" will shift the weight distribution towards higher values. The new weight distribution after receiving this message is naturally called posterior. Accordingly, you can express the information gained in this message as the difference between the entropies of the prior and posterior distributions:

Here, outside the curly braces, I indicate the index to iterate within the curly braces to obtain a list, and if there are two indices, it's a matrix.

It's important to note that there's no guarantee that this value will be positive. It's possible to have a joint distribution where the conditional distribution

has higher entropy (uncertainty) than the marginal distribution

. However, on average, for dependent random variables, the value of

is positive, namely, the expected value of this quantity is positive:

This quantity is naturally referred to as information about in

. Interestingly, it turns out to be symmetric with respect to the permutation of the pair

.

Definition 2.1: Mutual information of two random variables is

or

or

These are three equivalent definitions. is the entropy of a discrete distribution where the values are not individual numbers but pairs of numbers

. We will prove the equivalence below.

There is a visualization of the MI value:

The entropies of random variables correspond to circles – green and reddish, with areas equal to and

respectively, while the brown area of their intersection is precisely

Entropy as a measure

This visualization, on one hand, is merely an illustration emphasizing that entropy is a non-negative quantity and that is also a non-negative quantity, which is less than or equal to both

and

. However, on the other hand, there are interesting results that allow us to construct a measure space in which a random variable corresponds to a subset, the union of subsets corresponds to the direct product of random variables (i.e., the union forms a pair), and the measure of subsets is precisely the entropy of the corresponding random variables.

By the way, in the context of machine learning, the image with green and red circles looks like this.

We are given some features, and need to predict the signal (target). For example, we need to forecast the air temperature tomorrow at 12:00 PM in the center of London with an accuracy of 0.5°C. Features can include data on the temperature in London and its surroundings for the past 10 years, the current date, current values of temperature, pressure, humidity, wind, as well as the position of the Moon and other data. It's a typical situation where the amount of information in the features is vast, but the target variable is low in entropy. The entire set of data in the features can be called a random variable "w," and the target variable is "h." These random variables have mutual information, and the essence of the prediction task is precisely to find this information within the features.

Lets describe the first expression in more detail:

The notation is simply a shorthand for

and is called conditional entropy.

For independent random variables,

because, by the definition of independence, for any

Therefore, for independent random variables, the mutual information is equal to 0.

It turns out that the reverse is also true, meaning the statement is equivalent to the independence of random variables. However, a similar statement would not hold true for the correlation of two random variables.

To see the equivalence of the MI definitions, it's convenient to introduce the following notations:

— probabilities that height and weight are equal to

.

— probabilities that height equals

(marginal distribution of height).

— probabilities that weight equals

(marginal distribution of weight).

Let's assume that all these numbers are non-zero.

Firstly, notice that

Then, by making substitutions and simple transformations, we obtain the equivalence between the first and third definitions:

The consideration of cases where some of the probabilities are equal to zero lets leave for textbooks.

Task 2.1: Provide an example of random variables for which the correlation is zero, but MI is not zero.

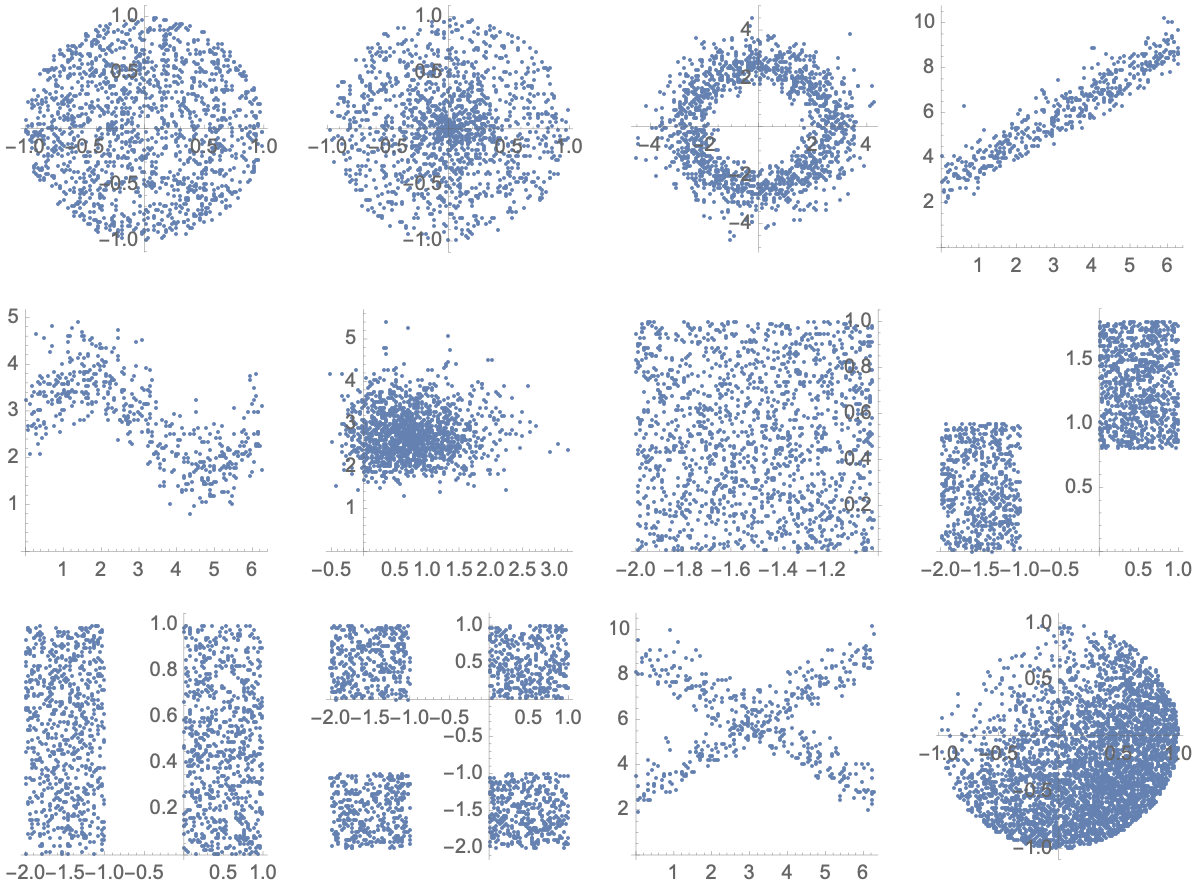

Task 2.2: Two random variables were measured multiple times, and points were plotted on a plane. Which pictures correspond to dependent random variables, and which ones correspond to independent random variables? For which of them is the correlation between x and y equal to 0?

On which ones of the 12 pictures are these two variables dependent?

Answers

Dependent: 3rd, 4th, 5th, 8th, 11th, 12th.

Correlation is zero for all except the 4th, 5th, 8th, and 12th.

Task 2.3: Calculate MI(w="number is divisible by a=6", h="number is divisible by b=15"). It is assumed that we randomly select one of the natural numbers, and all numbers are equally likely. To avoid dealing with the concept of a uniform distribution over natural numbers, consider that we are randomly selecting a number from the set {1, ..., 30}. Prove that if you take coprime numbers a and b, then MI=0.

Answer

We know the marginal distributions: R = {5/6, 1/6} and Q = {14/15, 1/15}. Furthermore, we know that the probability of being divisible by both 6 and 15 is 1/30. From this, we can derive the joint probability matrix:

We use the formula and get MI = 0.0311278.

Task 2.4: Prove that there is another equivalent definition

which means that MI measures how much less efficient the Huffman code for the stream of pairs , constructed under the assumption of independence of random variables (i.e., assuming that the joint distribution is equal to the product of marginals), is compared to the Huffman code constructed based on the actual joint distribution. It is measured in saved bits per symbol (symbol being an observation of the pair

).

We have been operating in the realm of discrete distributions so far. Transitioning to continuous variables is straightforward.

Let's recall task 1.7. Suppose we have real random variables , but we discretize them - the first one into bins of size

, and the second one into bins of size

. Substituting into the expression

an approximate formula instead of H (see task 1.7)

for the entropy of discretized continuous distribution, we will get that the first term contributes the second term contributes

and the third term (the subtracted one) contributes

and these three pieces will cancel each other out.

Thus, mutual information for continuous variables can be naturally defined as the limit of MI for discretized random variables as the bin sizes approach zero.

Definition 2.2: The mutual information of two continuous random variables is given by

Here, we use the H function from the definition 1.6 in part 1.

Task 2.5: Estimate for any forecasting task you have. Calculate

, and how close is this ratio to 1?

The estimation of MI between two random variables from a set of their measurements essentially boils down to calculating the joint distribution matrixfor discretized values of these random variables. The more bins in the discretization, the less inaccuracy associated with discretization, but the less statistics available for an accurate estimation of

.

The estimation of MI from observed measurements is a separate extensive topic, and we will return to it in problem 3.1.

Problem 2.6: Prove that for any strictly monotonic function, it holds true that

Answer

Let . Consider two formulas:

The first terms in both formulas are identical, but subtracted terms are equal since their physical interpretation is the average value of , where

are different random variables gotten from

when fixing the value of

or, equivalently, when fixing the value of

. The averaging is done over the distribution of values of

or

, which is not important.

Now, look at the integrals. The values and

are just identical, and in both formulas, you are averaging this quantity over the same probability measure

, which is just parameterized differently:

.

Definition 2.6: An information channel is a Markov chain of length 2, that defines the dependency between two related random variables, one of which is called the input, and the other is called the output. Often, when referring to an information channel, only the transition probability matrix is considered: , without specifying the input distribution. We will denote the input distribution as a vector

, and the distribution of output values as a vector

For discrete distributions, for each of the M possible inputs, we have a distribution over N possible output values. In essence, we have a matrix with M columns and N rows, where all numbers are non-negative, and the sum of numbers in each column equals 1.

Such matrices are called stochastic matrices, more precisely, left stochastic matrices (left stochastic matrix).

About left and right stochastic matrices:

In left stochastic matrices, the sum of numbers in each column equals 1, while in right stochastic matrices, it's the sum of numbers in each row that equals 1. There are also doubly stochastic matrices, where both columns and rows sum to 1. I have chosen the variant where the vector of input probabilities is considered as a column vector, and to obtain the vector of output probabilities, you perform the standard matrix multiplication: .

In English-language literature, it's common to consider the probability vector as a row vector, transpose the transition matrix (making it a right stochastic matrix), and perform matrix multiplication from the left: . I'm aware that this might be unfamiliar to many, so I've opted for the column vectors and left stochastic transition matrices.

Thus, an information channel is essentially a left stochastic matrix, denoted as T . By specifying the distribution at the input, you obtain the distribution

at the output simply by multiplying the matrix

by the vector

:

The capacityof an information channel

is defined as the maximum value of

achievable for some input distribution

at the input.

An information channel for transmitting bits, where 1 turns into 0 with a probability of and 0 turns into 1 with a probability of

, is defined by the matrix:

Task 2.7: Let's consider an information channel with noise where 1 transforms into 0 with a probability of and 0 transforms into 1 with a probability of

What is the value of

when the input is a random bit with distribution

? What input distribution maximizes

, and what is its value? In other words, what is the capacity of this channel?

Answer:

Here, it is convenient to use the formula:

We have:

Input distribution:

Output distribution:

Joint distribution, i.e., distribution over pairs (input, output):

And two conditional distributions:

Using the formula for MI, we get:

This formula is nothing but a measure of the convexity of the function (aka JSD, see below) on the interval

. In other words, it's the value of this function on the convex combination of the abscissas of the endpoints of this interval minus the convex combination of the values of this function at the endpoints (ordinates of the interval).

The figure shows the function . The KL interval is divided by point A in the ratio of

. The length of segment AB is the value of MI.

The maximum length of the segment is reached at a point where the derivativeequals the slope of the interval.

Generalizing problem 2.7 to the multidimensional case, we get:

Statement 2.1: The channel capacity is determined by the convexity measure of the function within a simplex. This simplex is the image of a stochastic transformation defined by the information channel. In particular, it's the maximum value of the difference between

from an affine (also known as convex) combination of the vertices of the simplex, the vertices of which are defined by the columns of the stochastic matrix, and the affine combination of the values of the function

at the vertices of the simplex.The maximum is taken over all possible weights that define the affine sum. In the definition below, the weights are denoted as

, and the vertices of the simplex are denoted as

.

Interestingly, the channel capacity can be viewed as a measure of the volume enclosed within the simplex, defined by the set of conditional distributions as its vertices.

Definition 2.4: Let there are several distributionsover the same set of values. The Jensen–Shannon Divergence (JSD) of this set with weights

is computed as

for:

so

The more the distributions differ from each other, the greater the JSD. The maximum

is the channel capacity of channel

Problem 2.8: Let there are two dependent normal random variableswith variances

and

, respectively. And let the first one is obtained from the second by adding independent normal noise with variance

:

Then

What is the mutual information

?

Answer

The entropies of and

are as follows:

The entropy of the pair of real random variables is:

The last expression is obtained by summing the entropies of the two-dimensional distribution of the pair(the entropy of independent pairs is the sum of their entropies). The entropy of the pair

is equal to the entropy of the pair

because a linear transformation of a vector of random variables using the matrix

results in a vector with the same entropy plus

(you can prove this!). The determinant of our linear transformation is equal to 1.

Explanation about the matrix: here, matrix A corresponds to the array {{1,0},{1,1}}:

and its determinant is 1. The value of the determinant is the factor by which the volume of a cube in

-dimensional space changes (in our case,

). The term

arises here for the same reason that the term

arises in the entropy formula in task 1.7 for discretized real random variables. End of explanation.

Finally, using the formula , we get:

Note 1: When the variance (power) of is equal to the variance (power) of the signal

, each measurement of

contains 0.5 bits of information about the value of

.

Note 2: In essence, this result is Shannon-Hartley theorem in a simplified form.

Task 2.9: Suppose there are two dependent random variables , where

follows an exponential distribution with parameter

, and

. What is the mutual information

? In other words, how much information is preserved when rounding an exponential random variable to a certain decimal place? Compare

and

Answer

Understanding task: The second random variable is uniquely determined by the first one. This means that the mutual information (MI) is simply equal to the entropy of the second variable. The distribution of the second random variable is a geometric progression with , and its entropy is

(see task 1.4).

Task 2.10: Let's consider two dependent random variables, where

follows a beta distribution with parameters

, and

is sampled from a binomial distribution with parameters

. What is the mutual information

? In other words, how many bits of information about the true click-through rate (CTR) of an advertisement can be extracted on average from the click statistics over k impressions?

Task 2.11: Two dependent random variablesare generated as follows – first, a random variable

is sampled from an exponential distribution with mean

, and then two Poisson random variables

are sampled with a parameter

. What is the mutual information

? One of the possible interpretations of this problem is as follows: what is the mutual information between the number of sales in one week and the number of sales in another week for a certain unknown product?

The last tasks are an example of how dependencies between random variables can be modeled using graphical probabilistic models, particularly Bayesian networks.

Task 2.12: The random variable is gotten from independent random variables

with a distribution of

using the formula:

The constant weightsare unknown to you, but your prior knowledge about them is that they were independently sampled from

. You decide to estimate the weights

using classical regression methods and make a prediction:

How will the mean squared error and the value of

change with an increase in the size of the training dataset? Analyze the answer for the case where

. .

Part 3 – ML & Mutual Information: Basics of ML in the context of information theory.