Using Xray as a VPN

Since I'm a fan of self-hosting, I have a home infrastructure:

Orange Pi - a media server;

Synology - a file dump;

Neptune 4 - a 3D printer with a web interface and a camera feed. And I'd like to have secure access to it externally via my phone and PC, while also having internet access outside the RF. I used to use OpenVPN for these needs, but it's no longer reliable. So I started studying the documentation for an excellent tool from our Chinese comrades - Xray!

What you'll need:

A server with an external IP for the infrastructure. In my case, it's an Orange Pi, hereinafter - Bridge

The server you want to access - Server

A server outside the RF for internet access. Hereinafter - Proxy

A client of your choice. Hereinafter - Client

Client and server on Linux - Xray-core, which can be installed via the official Xray installation script

Client for Android - v2rayNG

More clients can be found in the Xray-core repository

Let's take the VLESS-TCP-XTLS-Vision-REALITY configuration file as a base and start reading the Xray documentation

Routing is done on the client. For example, if the client accesses the xray.com domain, we route the traffic to the Bridge, and for all other connections - to the Proxy. Then the Bridge routes the traffic to the Server if the client accessed server.xray.com.

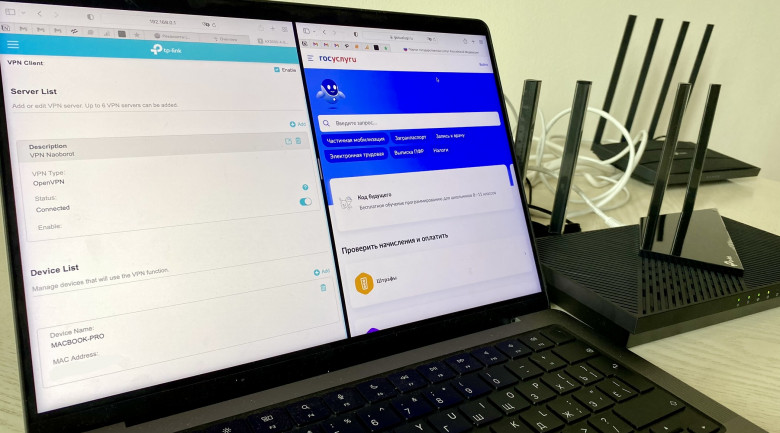

It looks like this: