Introduction

Network architecture at hyperscalers is a subject to constant innovation and is ever evolving to meet the demand. Network operators are constantly experimenting with solutions and finding new ways to keep it reliable and cost effective. Hyperscalers are periodically publishing their findings and innovations in a variety of scientific and technical groups.

The purpose of this article is to summarize the information about how hyperscalers design and manage networks. The goal here is to help connecting the dots, dissect and digest the data from a variety of sources including my personal experience working with hyperscalers.

DISCLAIMER: All information in this article is acquired from public resources. Due to the constant change in architecture at pretty much any hyperscaler, it is expected that some of the data in this document is not reflecting the current state at any mentioned network operator anymore as of June-2021. As an examples here is the evolution of Google’s network components and projects as it is outlined:

2006 - B2

2008 - Watchover, Freedome

2010 - Onix, BwE

2011 - B4

2012 - Open Optical Line System, Jupiter

2014 - OpenConfig, Andromeda

2017 - BBR

2018 - Espresso

2019 - Private Sub-sea Cables

Places in Network: Data Center

The design space for large, multipath datacenter networks is large and complex, and no design fits all purposes. Network architects must trade off many criteria to design cost effective, reliable, and maintainable networks. Let’s take a look on a common approach taken by several large scale network operators and see what can be easily adopted.

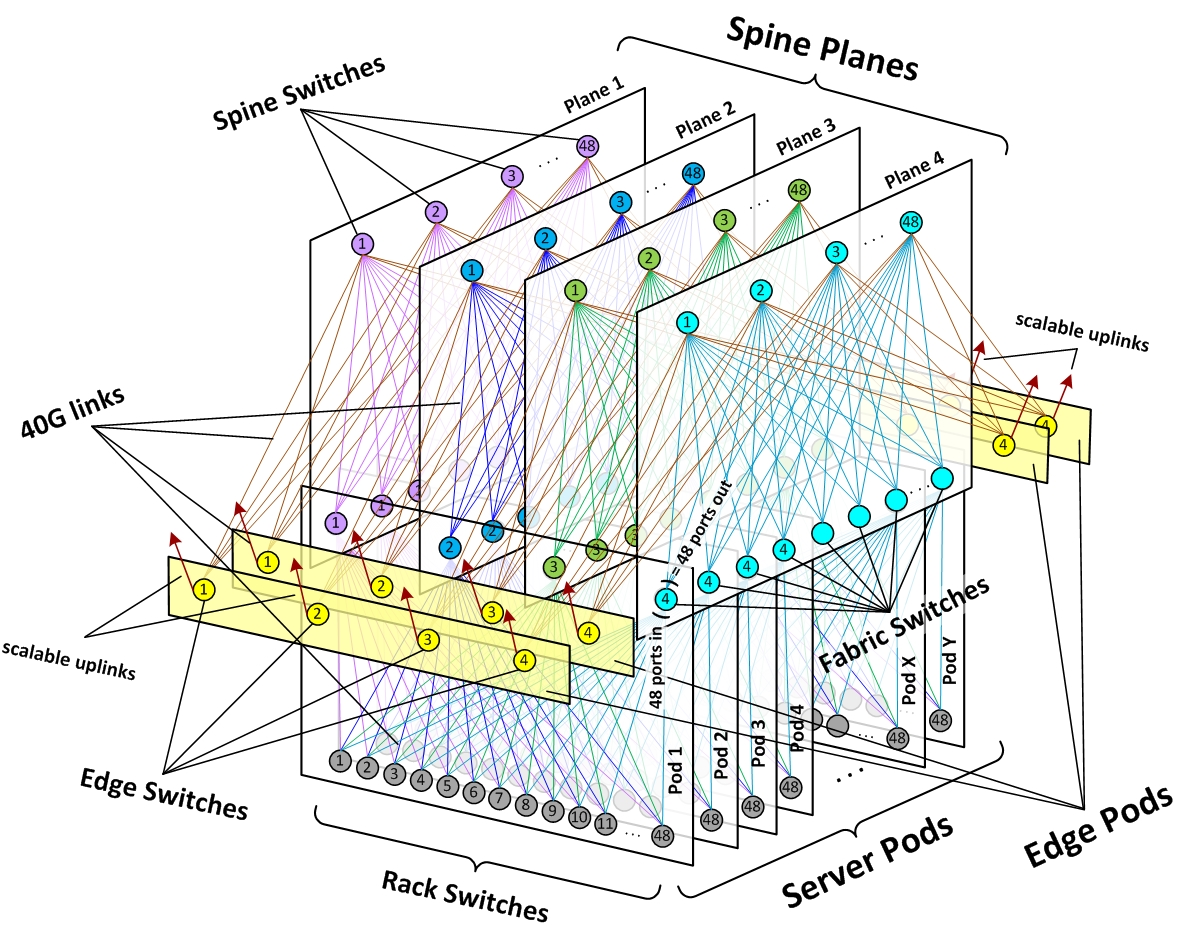

Topology

Clos - in the field for quite some time, now in hyper scale DCs, but comes from TDM-networks with classic phone services. Other topologies like: Xpander, FatClique, Jellyfish, Dragonfly are reported as a subject for different research efforts and even have some advantages over CLOS, but do not really seem to be widely deployed. In CLOS, the size of a cluster is limited by the port density of the cluster switch. Evolutionary transition to the next interface speed do not come at the same XXL densities as quick, so inter-cluster connectivity is oversubscribed, with much less bandwidth available between the clusters than within them.

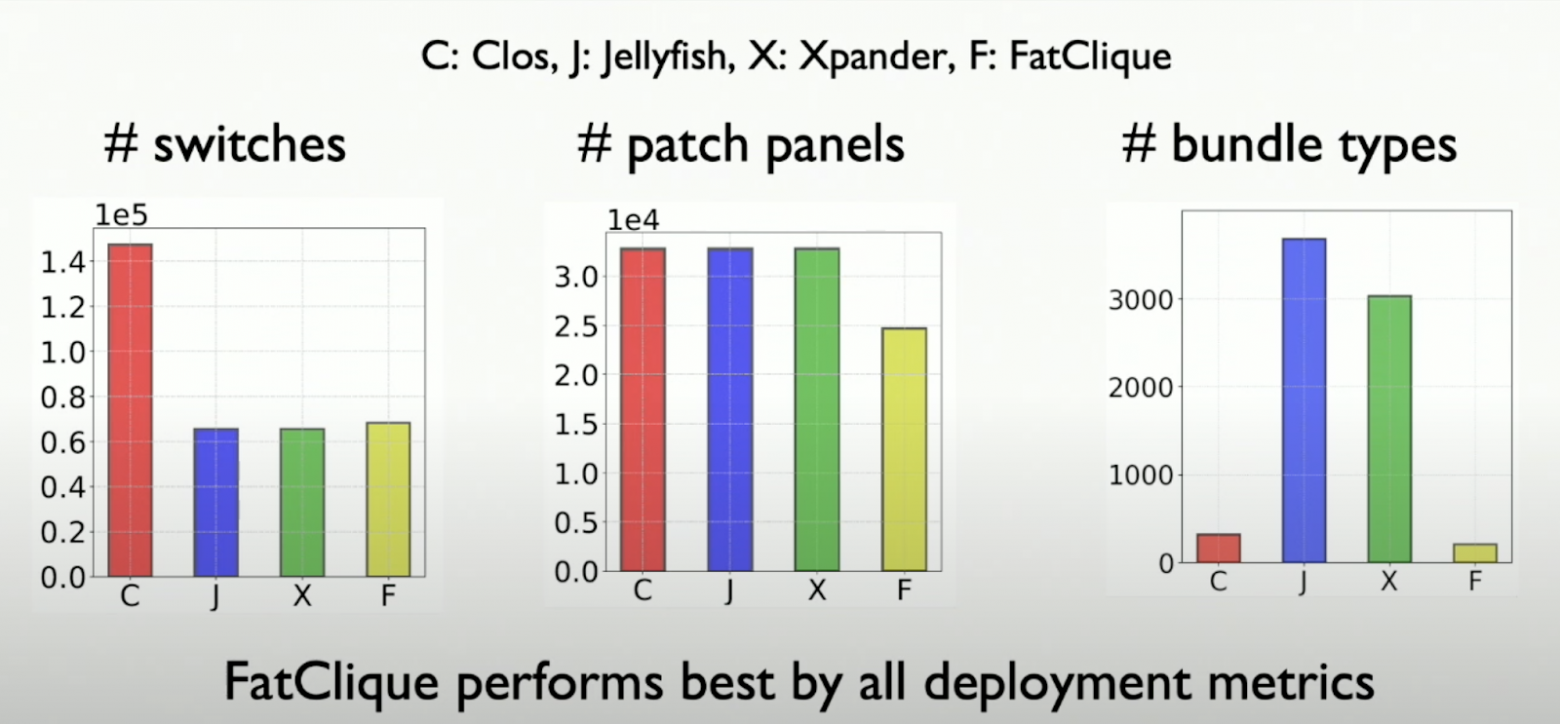

Based on the examples, it seems like Clos is used by at least a few hyperscalers, but what about alternatives and why Clos? To truly explore alternative topologies, the reader is invited to evaluate topologies with Condor framework: https://research.google/pubs/pub43839/ in addition to take a look at a paper explaining the lifecycle and management complexity of DC topologies: https://par.nsf.gov/servlets/purl/10095405

Efficient topologies do exist at small scales. Both RRG and DRing provide significant improvements over leaf-spine for many scenarios. There are flat networks (beyond expander graphs) such as DRing that are worth considering for small- and moderate scale DCs. These topologies might not be good at large scale but can be efficient for small scale. Some detail of running spineless DC are described in this document.

To summarise the paper, how does deployment complexity look like for each topology type? Packing switches into and interconnect within the rack and inter rack is complex. Inter-rack links are the main contributor to the complexity. Switch form factor and expansion capacity due to the fact that it is hard to move the cable bundles rewiring links on a patch panel - Jellyfish is better. While Clos is apparently better from the perspective of cable bundle types in capacity and length, patch panels. Hence, in this case Jellyfish is better.

Routing complexity due to irregularities of a topology also factoring in and Jellyfish is a random graph and highly irregular. Intra-rack links are cheap, Jellyfish does not have this property - more patch panel usage, hence it is complex.

Jellyfish has an advantage during the expansion as it is required to maintain at least 75% of the capacity and it is usually over-provisioned.

All in all - the complexity of the following remains an open topic

Control plane

Network Debuggability

Practical routing (FatClique requires non-shortest path routing)

Network latency

Blast Radius in case of failure

Routing Protocol

Since the initial publication of RFC7938, BGP has been the default option for large data center fabrics and is de-facto the standard. It leverages the performance and scalability of a distributed control plane for convergence, while offering tight and granular routing propagation management.

There is an argument that with a proper design, scale is not the reason to use BGP over OSPF.

If you are running a small Clos network and don’t have IPv6 or EVPN, OSPF might work for you as more straightforward and generally faster, as was the common practice before the advent of BGP in the data center. No matter what, you need to understand the choices that you are making and understand the tradeoffs.

If an IGP is used, it is presumed that IGP flooding is taken care of. But how can it be done?

The rise of Cloud computing has changed the architecture, design and scaling requirements of IP and MPLS networks - from flat, partial mesh topologies to hierarchical CLOS networks.

As we mentioned earlier - IGP scaling limitations led to BGP adoption as de facto protocol, some famous citations:

John Moy OSPF Scaling Recommendations:

50 routers per area

Dave Katz – in “IS-IS OSPF Comparative Anatomy”: https://www.youtube.com/watch?v=qbNW-cndt70&ab_channel=TeamNANOG

”Don’t put zillions of routes in your IGP”

IGP scaling limitations and comparison to BGP as a routing protocol adoption in massive scale DC: http://www.columbia.edu/~ta2510/pubs/hotnets2019bgpPerformance.pdf

In other words, in a dense topology, the flooding algorithm that is the heart of conventional link state routing protocols causes a great deal of redundant messaging. This is exacerbated by scale. While the protocol can survive this combination, the redundant messaging is unnecessary overhead and delays convergence. Thus, the problem is to provide routing in dense, scalable topologies with rapid convergence. Unnecessary, redundant flooding is the biggest scale inhibitor in IGPs. However, BGP loss of topology detail reduces the value of IGP-based forwarding mechanisms (ie LFA).

BGP adoption in hyperscaling DC is expanding by way of BGP app development for policy control via tooling, i.e. BIRD, Quagga, GoBGP. Besides, BGP could be simply configured by templates if automated and scripted. BGP scale is known and usage in a symmetric topology makes it almost trivial. ECMP Leaf-Spine design reduces convergence wavefront to Clos stage diameter. However, BGP can’t really be used as an IGP in an arbitrary topology without an almost impossible amount of configuration, it’s un-scriptable with irregular topologies.

Alternatives to BGP

RIFT is described in https://datatracker.ietf.org/doc/draft-przygienda-rift/ and pushed on IETF, at NANOG and other meetings. The main idea of RIFT is to distribute link state information South-North direction, but do only distance vector distribution North-South direction. It uses BGP with modification and hacks RFC7938:

AS Numbering hacks

EBGP everywhere

ADD_PATH

Timer hacks

4byte ASN

Allow_AS

RIFT merges all the benefits of BGP as well as that of IGP (distance vector and link state), doing auto-discovery and assisting in config management. At this point it’s very hard to predict what would be the scalability of RIFT since it’s such a new protocol, but presumably it’s high. Just recently this book was published explaining some aspects of RIFT: https://www.juniper.net/documentation/en_US/day-one-books/DO_RIFT.pdf

BGP in the DC is valid and right now there’s no better production-ready alternative. That said, no solution is perfect. The industry as a whole has embarked on a search for better alternatives.

We can certainly expect that existing Leaf-Spine DC deployments to continue with BGP indefinitely. At the same time, we are trying to evolve technology and modify the link state IGPs so that we can provide a better alternative in the future.

LSVR and OpenFabric can leverage ISIS (link state) protocols to route in the underlay. Link state protocols give us topology information and enable functions such as traffic engineering which are simply not possible with BGP. LSVR tries to preserve that link state capability but leverages BGP route reflectors as a replacement for fixing the flooding issues.

At last, if you are running your networking gear under the management of OS which opens opportunities for installing any routing agent and integrating it with dataplane via SDK. There are a number of operators using this approach, some public examples:

FB OpenR: https://code.fb.com/connectivity/open-r-open-routing-for-modern-networks/

Qrator-ExaBGP: https://www.arista.com/assets/data/pdf/CaseStudies/Qrator_CaseStudy.pdf

Another approach is to remove routing protocol at all, as it’s done in ONF Trellis, classic-SDN Control with ONOS to directly program ASIC forwarding tables in bare metal switches with merchant silicon. Trellis is currently deployed in production networks by at least one Tier-1 operator.

The topic is complex and it would be advised to research it and make the decision based on the particular use case. But if you still undecided - BGP and ISIS are 30 years old and have been used in alot of use cases. So why not use them?

If the goal is to fix the Link State info distribution - let’s just fix LS protocols, so they can flood better. IS-IS spine leafs extension https://tools.ietf.org/html/draft-shen-isis-spine-leaf-ext-06 allows the spine nodes to have all the topology and routing information, while keeping the leaf nodes free of topology information other than the default gateway routing information. Leaf nodes do not even need to run a Shortest Path First (SPF) calculation since they have no topology information. More about advantages of IS-IS will be discussed later in this document.

Another key to enabling the solution is the additional scalability in the IGP. Specifically:

Reduce the IGP flooding by an order of magnitude - flood on a small subset of the full data center, elect an area leader which computes the flooding topology for the area, distribute flooding topology as part of link state database

Improve the abstraction capability of IS-IS, so that areas are more opaque, improving overall scalability. This flooding topology is encoded into and distributed as part of the normal link state database. Nodes within the dense topology would only flood on the flooding topology. On links outside of the normal flooding topology, normal database synchronization mechanisms (i.e., OSPF database exchange, IS-IS CSNPs) would apply, but flooding would not

Increase IS-IS abstraction levels from two levels to eight. PDU/Hello Header has reserved bits for 6 more levels of abstraction – allowing tremendous scalability

The details are described in draft-ietf-lsr-dynamic-flooding & draft-li-lsr-isis-area-proxy.

Supernode

Naturally, the latest advances in IGP lead to some interesting concepts such as Supernode.

In this paper Google discusses it’s approach in building supernodes for their B4 network: https://www2.cs.duke.edu/courses/fall18/compsci514/readings/b4after-sigcomm18.pdf

Routing “Supernodes” built of leaf/spine switches. The key is to make scaling “inside” the supernode to have minimal impact on total network scaling. The benefit extends beyond avoiding multichassis system design – complete asset fungibility and best of breed “line card” selection with rolling upgradeability.

To put it in two sentences supernode is a two-layer topology abstraction: at the bottom layer, we introduce a “supernode” fabric, a standard two-layer Clos network built from merchant silicon switch chips. At the top layer, we loosely connect multiple supernodes into a full mesh topology.

IPv6

How many of us said before “we will never run out of 10/8 network”? Save yourself time dealing with DHCP and ARP issues by making a step forward and not a step sideways - switch to IPv6 underlay infra from the start.

Multitenancy is usually a requirement, i.e. virtualisation or segmentation of the network. There is no requirement to do that on the level of network fabric as it is a task for hosts. This approach simplifies the goal of scalability. Thanks to IPv6 and the huge address space, there is no need to use duplicate addresses in the internal infra - each address is unique.

Filtering and segmentation is done on the hosts, so there is no need to create virtual networking entities in the DC network. How to do this: /24 v4 and /64 v6 per ToR. Why /64? - for routing table scalability and ECMPv6.

RFC5549 for v4 over v6: advertising IPv4 unicast NLRI with IPv6 next-hops over IPv6 peering sessions, supported for IPv4 unicast, IPv4 Labeled Unicast, and IPv4 VPN address and sub-address families (1/1, 1/4, 1/128 respectively).

Getting lightweight

It is very important to get rid of anything which is not needed in the network. This makes life easier and significantly expands the choice of networking equipment vendors. If the stack of applications is controlled and can scale horizontally, this usually implies that there is a service discovery and machine monitoring in a given cluster. This means if one machine is down, the replacement does not have to start with the same ip-address. As a consequence - L2 is not needed, not real, nor emulated.

There is also no such concept as IP-address migration, which is usually required for VM mobility. If this is a requirement - it can be supported without network infra change, but rather via overlay networks. This way there are not that many dynamic changes in routing tables for underlay.

Multicast in DC fabric

Another thing to consider is a multicast fit in DC Leaf-Spine fabric. Traditional DC fabric chips, such as Tomahawk3 are specifically built to fit the purpose of a traditional DC functionality, hence we get a very attractive pricing, port capacity and density. Which makes vendors to prioritise the supported features and scale, making some of the functionality seaminly not suited for the scope of a given box. Such example on TH3 128x100G de-facto standard for a modern DC, not suitable for ST2110 or ST2022-6 heavy duty multicast use cases.

The limitations should be specified by every individual vendor, but things worth to consider are - the scale of outgoing interface set per (S,G) which puts the limit on how many possible replication scenarios can be described with the growth of the number of the leafs. Another thing is the multicast throughput and L2/L3 multicast tables. All in all, it would be wise to consider and consult with an SE from your favorite vendor about the correct choice of the chip. Please don’t try to put all possible functionality on a purpose build system and complain later about the performance.

Multitenancy

Sometimes there is a need to provision VPN services within a DC. Also there are needs to keep different data on diverse paths for end to end service provisioning. For such purposes EVPN VXLAN and EVPN MPLS come into place. The complexity appears to be lying within there is a requirement to provide a handoff between tradicional inter DC MPLS VPN and VXLAN environments within a DC. Inter DC VPN defined in RFC4364 Section10: VRF-to-VRF connections at the ASBR, EBGP redistribution of VPN-IPv4 routes from AS to AS, Multi-hop EBGP VPNv4 peers (RRs). EVPN VXLAN to MPLS handoff is possible by either L3 EVPN VXLAN to L3 EVPN MPLS or L3 EVPN VXLAN to RFC4364 VPN MPLS.

When the conversation turns to VXLAN it’s important to consider if you are expecting to have a support for features like: suppressing flooding of unknown unicast packets, the ability to limit the broadcast to ARP and storm control within VXLAN L2 domain on ingress VTEP. Check out this post on VXLAN security considerations: https://eos.arista.com/vxlan-security/

Cost

A big chunk of the cost is interconnect, in order to minimise it - optimise the distance b/w the networking components by designing the racks and cable management. Result - switch between SR optics to DAC 3 meters cables.

Automation

Is simply a must, managing this amount of devices and interconnections manually is not physically possible.

Challenges

By itself building a redundant network is expensive, but expenses are reduced by redundancy in case of a component failure, so the cost is not a main argument against Clos for large scale deployments.

How to ingest traffic into a CLOS topology?

Three major approaches are seen across the industry:

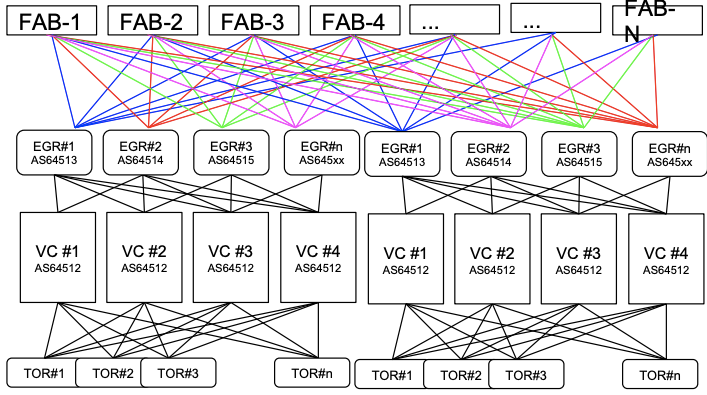

Data center routers connected directly to the top of the Fabric. This works good on small and medium scale but requires connections to all top nodes in the top layer of Fabric. As this approach is pretty old, this implied a challenge due to the cost of a port of feature reach Data Center Edge router. Today this is not really a problem with second and third generations of merchant silicon such as Broadcom’s Jericho2 and Jericho2C

Second approach - connect to CLOS from the bottom via Edge POD. This approach gives some advantage due to the nature of the CLOS itself - traffic is distributed across all Fabric layers in just two steps

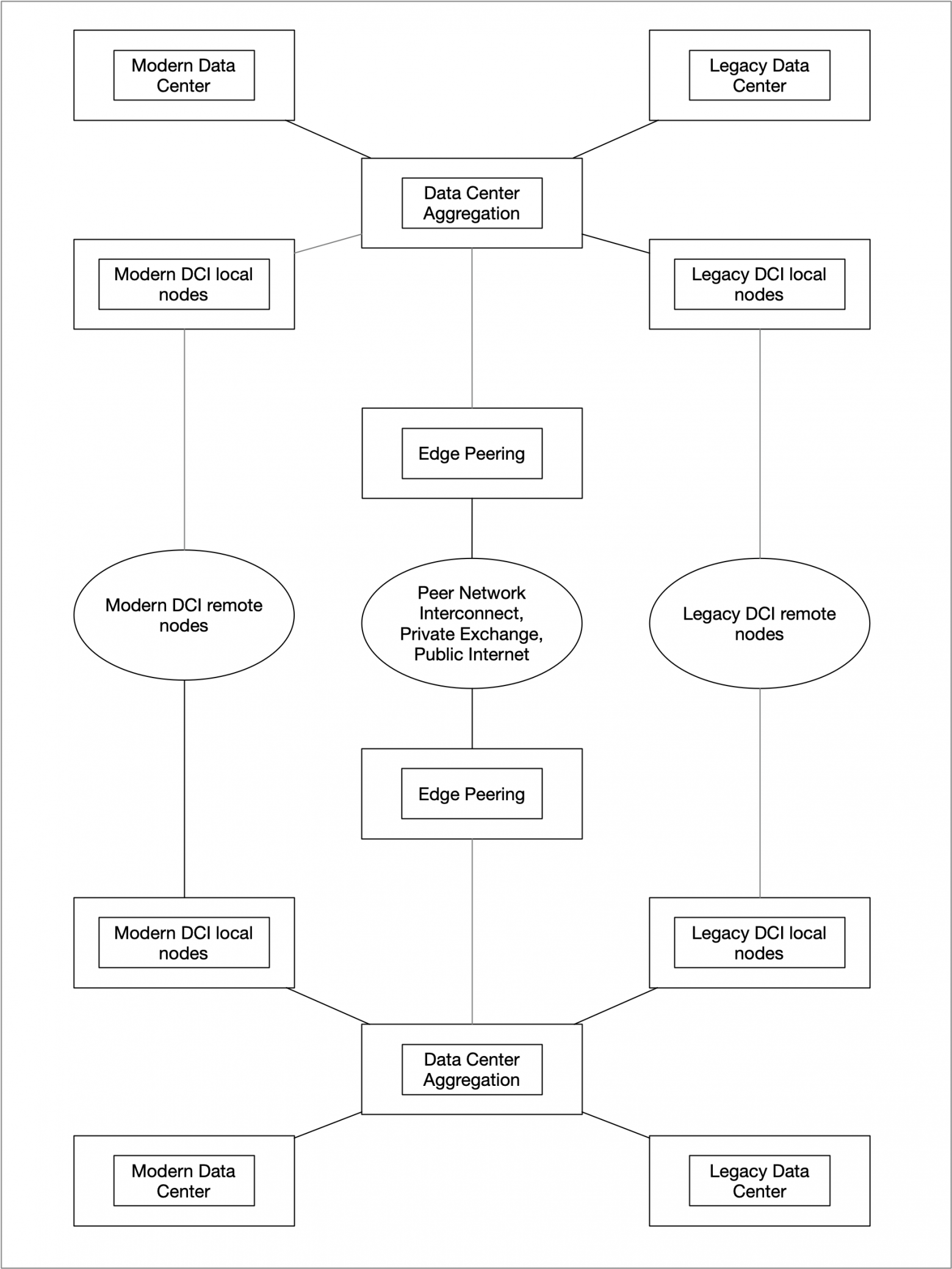

Some extremely large network operators went even further and build a distributed topology interconnecting multiple datacenters in a given region

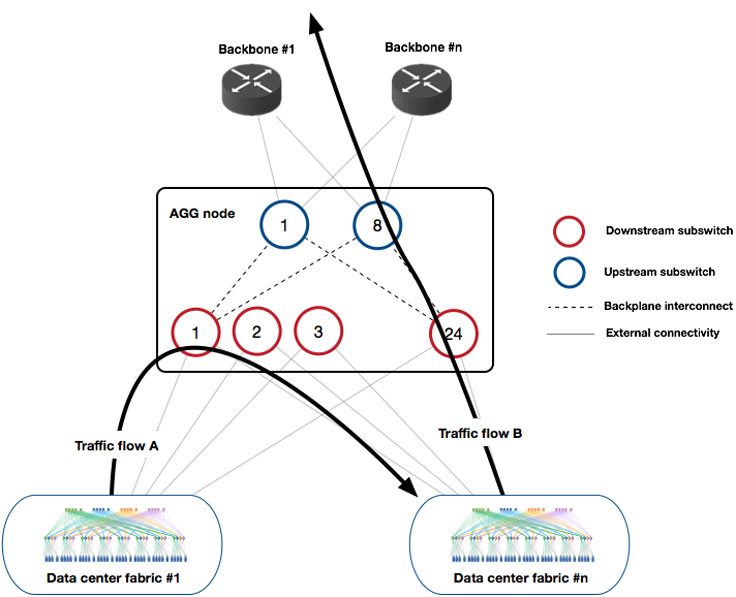

Each Fabric Aggregator node implements a 2-layer cross-connect architecture. Each layer serves a different purpose:

The downstream layer is responsible for switching regional traffic (fabric to fabric inside the same region, designated as east/west).

The upstream layer is responsible for switching traffic to and from other regions (north/south direction). The main purpose of this layer is to compress the interconnects with Facebook’s backbone network.

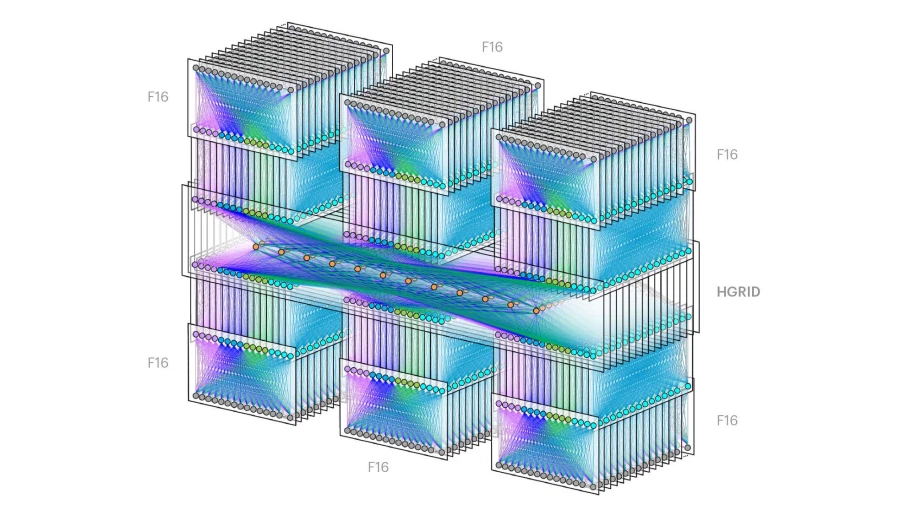

The downstream and upstream layers can contain a quasi-arbitrary number of downstream sub-switches and upstream sub switches. Separating the solution into two distinct layers allows the East-West and North-South capacities to grow independently by adding more sub switches as traffic demands change. Fabric edge PODs are replaced with the direct connectivity between fabric Spine switches and HGRID. This flattens out the regional network for E-W traffic and scales the uplink bandwidth.

Places in Network: Data Center Interconnect

Traditional WANs designed as sparse, uniplanar, polygonal lattices. This is dictated by the cost of long-haul links, router ports, and concerns about IGP scale. Routers vertically scaled, allow for single control and management point. RSVP-TE scaling concerns are also there due to soft, dense midpoint state and O(n2) tunnel scale. Overall the same design pattern in use for 20+ years.

Private WANs are increasingly important to the operation of enterprises, telecoms, and cloud providers. In some cases, private WANs are larger and growing faster than connectivity to the public Internet. DCI/WAN network have common characteristics:

massive bandwidth requirements deployed to a modest number of sites,

elastic traffic demand that seeks to maximize average bandwidth

full control over the edge servers and network, which enables rate limiting and demand measurement at the edge

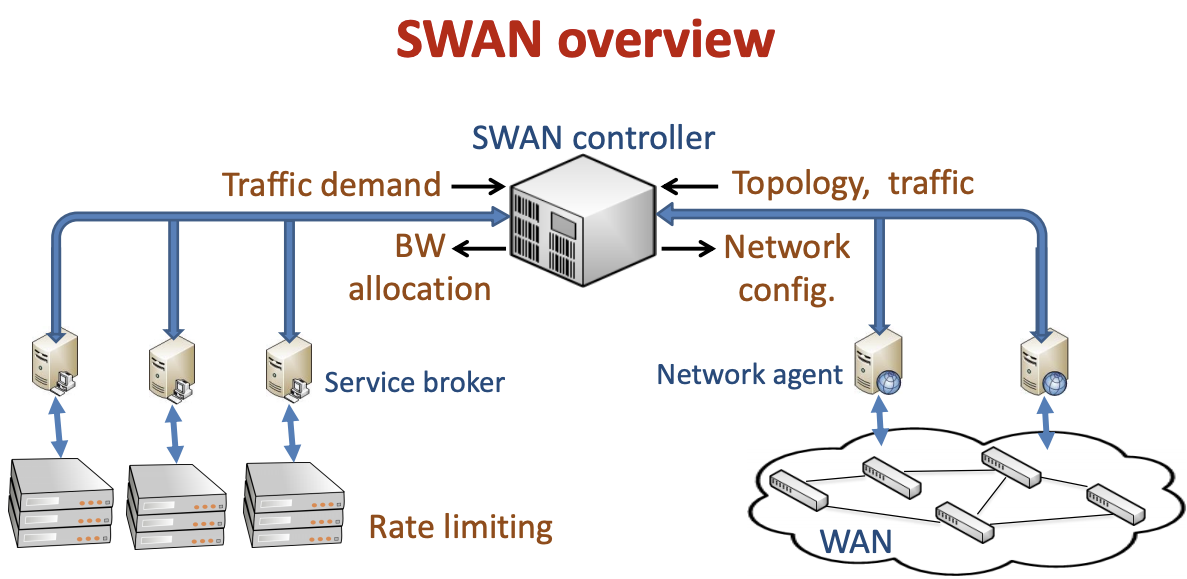

Many existing production traffic engineering solutions use MPLS-TE - MPLS for the data plane, OSFP/IS-IS/iBGP to distribute the state and RSVP-TE to establish the paths. Since each site independently establishes paths with no central coordination, in practice, the resulting traffic distribution is both suboptimal and non-deterministic. Full-mesh operational complexity and non-ECMP nature of a circuit does not play in favour of RSVP-TE either.

Alternative solutions for TE is OpenFlow, but the fear is that besides others, it would take way too much time for the centralized control-plane to send updates to the switches and have them installed in HW.

What do we see being reported as a technology stack used in the field by hyperscalers? It appears this works in production for some time one, let's see how this evolves. The thing to improve - minimize state change in the network, get read of RSVP-TE and LDP. Introduce automatic local repair with LFA, focus on Segment Routing. Run some form of IGP for setting up connectivity. Utilise BGP-LU, Defined in IETF’s RFC 3107 and described with examples in (https://eos.arista.com/carrying-label-information-in-bgp-4/) for traffic steering and BGP-LS with SR extension to feed Bandwidth aggregators about topology information. Cool SR based stuff integration in the future such as BGP peering SID for Egress TE: https://tools.ietf.org/html/draft-ietf-idr-bgpls-segment-routing-epe-19

The way people do routing is with at least two layer route hierarchy: traffic steering routes - identify the flows for engineering - plane BGP unicast routes. Those resolve to host addresses /32 and /128 from the reserved block which is not routed. Tunnel routes - resolve host addresses, programmed via BGP-LU. The assumption behind such design is that there will be many more traffic steering routes than tunnel routes and tunnel routes will change much more often than steering routes. Traffic steering is achieved by changing a few BGP-LU routes and TE evaluation happens on a regular cadence.

Traffic with a particular destination is routed via static routing entry which points that traffic to a Next Hop Group. Based on the traffic class packet is encapsulated in MPLS, assigned label and set a next-hop ip-address resolvable via a specific interface directly connected to the node or to the next-hop device on a multi-hop path to the ultimate switch on the path. For a direct path label Null0 is used. For a TE path the Segment routing approach is used - stack of label is utilised along the whole path with consecutive PUSH, SWAP and POP actions accordingly.

Depending on links utilisation different number of port-channels could be utilised between sites, a specific label will always correspond to a specific interface.

Pieces and parts of TE solution

TE controller - essentially a customized BGP Route reflector which speaks BGP-LU. It programs weighted paths using BGP multipath and Link bandwidth extended community. Performs tunnel OAM - we need to know when the tunnel goes down, this is required as local repair can fail and we can’t wait for the next TE refresh cycle. Prob packets are sent down the tunnel path, if the tunnel is down - the BGP-LU route is withdrawn. If all the tunnels for a given set of steering routes are down - withdraw the steering route and fall back to IGP shortest path.

Topology service speaks BGP-LS to the switches, reports labels, topology and bandwidth changes to the TE controller. Flow collector - pulling Sflow data, prefix map generator - identifies which traffic to steer, bandwidth broker - adjusts dynamic admission control.

Common challenges

Distributed control planes for TE leads to uncoordinated efforts and bursty nature of the traffic leads to inefficient usage of high cost WAN links. Sophisticated Centralised control plane for TE with granular policies may expose some limitations on how many labels can be pushed and successfully hashed on a node to do link bundling as for example. To fix this, some optimisation in path computation is required - compressing adjacency-SIDs where the shortest path would take us where we wanna go.

There is a BGP SR TE policy draft - a new BGP SAFI with a new NLRI in order to advertise a candidate path of a SR Policy. RFC8491 Signaling Maximum SID Depth (MSD) Using IS-IS - a way for an IS-IS router to advertise multiple types of supported Maximum SID Depths at nodes and link granularity. It allows controllers to determine whether a particular Segment ID stack can be supported in a given network. Important to remember is that the entropy labeling comes in pairs - Entropy Label and Entropy Label Indicator, which is effectively two additional labels. An ingress Label Switching Router (LSR) cannot insert ELs for packets going into a given Label Switched Path (LSP) unless an egress LSR has indicated via signaling that it has the capability to process ELs.

In addition, it would be useful for ingress LSRs to know each LSR's capability for reading the maximum label stack depth and performing EL-based load-balancing. Draft-ietf-isis-mpls-elc-11 addresses this by defining a mechanism to signal these capabilities in ISIS.

RSVP-TE and SR-TE coexistence might be a challenge to solve during the transition to SR.

ECMP and HW limitations

One aspect which makes the DCI network more expensive per port is the cost of the long-haul links. Obviously it is important to utilise the available bandwidth at full, hence the ECMP or even UCMP approach is preferred across all available paths. In order to accommodate the complexity of SR based approach, some level of in-directional resolution of paths is required. Hence there are some aspects of HW limitations which must be considered.

LFA and FRR in ECMP

Equal-cost paths are installed in routing and forwarding tables as ECMP entries. They might be implemented as independent forwarding entries, or as a single Forwarding entry pointing to a Next Hop Group. Once a link to a next hop fails, the corresponding entry is removed from the routing and forwarding table or the next-hop group is adjusted. If you have LFA or Fast Reroute in place, the failed next hop could be replaced with another next hop without involving a routing protocol. Without LFA or Fast Reroute, you just have fewer equal-cost next hops.

IP FRR relies on pre-computed backup next-hop that is loop-free which could be ECMP and is a control plane function, it could take into consideration some additional data - SRLGs, interface load, etc.

ECMP NHG autosize is a forwarding construct, where the NHG is an array of entries. If one of them becomes unavailable, in case of an interface down event, it is simply removed from the array and the hashing is updated. Without support of such a construct, HW would be programmed with a Drop entry for a missing NHG entry. Important part of this is the ability to shrink the NHG with an Inplace Adjacency Replace - virtually hitless update, cause of the fact that some traffic will be lost inflight on the interface which went down.

In order to continue to resiliently forward traffic via a ECMP, it’s crucial that load balancing is not impacted significantly, so that some of the remaining NHGs could be oversubscribed and others - underutilized. Details of this is described in the following article.

HFEC

Hierarchical Forwarding Equivalence Class (HFEC) changes a FEC from a single flat level to a multi-level FEC resolution. Two level HFEC could be supported depending on the chip:

1st level FEC points to tunnel next hops

2nd level FEC points to direct next hops for each of the tunnel’s next hops.

HFEC has below benefits:

Supports larger ECMP since only the 1st level tunnel FEC is exposed to RIB, the 2nd level interface FEC is hidden to RIB.

Achieves faster convergence since tunnel FEC and interface FEC at each level can be independently updated.

Tunnel Types could be an MPLS tunnel, which can be signaled by IS-IS Segment Routing, BGP-SR, BGP-LU, Ethernet VPN (EVPN), MPLS VPN, MPLS LDP; or just an IPv4 and IPv6.

Hierarchical FEC gives a good level of flexibility in configuring next-hop resolution and patch computation with contracts like Policy Based Routing (PBR). As for example it is possible to support complicated use cases where ECMP size exceeds HW limitations. In such case HFEC could be used to create another level of in-direction between a Prefix, an equivalent FEC which is pointed to a set of FECs, each containing a lower number of NH entries.

But one has to be careful with creating such constructs because even if it provides workarounds for HW limitations as specified above, there are still cases where it simply not possible to go around it. E.g. consider a use case where a prefix would be advertised from multiple nodes in a site to a remote node. The expectation is that the remote node receiving the prefix would PBR traffic based on the DSCP. The remote node by default will have a static route pointing to the NHG for BGP nexthop of all advertising nodes. The problem is that PBR can match on top-level FEC. So, for non-ECMP cases, the top level FEC is NHG FEC. In case of ECMP, the NHG are second level FEC's and PBR cannot match on this. The options are to either break the ECMP, i.e use only 1 NHG, or create a separate NHG which has the contents of the other two. Not great, not terrible.

UCMP

UCMP is another construct which is highly utilized in DC, which is allowing to set BGP extcommunity lbw. Multiple things would be worth sharing: the maximum set size for the UCMP next-hop could be set, an optional % deviation constraint from the optimal traffic load sharing ratio for every next-hop in the UCMP. It means the amount of error we are willing to tolerate for the computed weight of some nexthop wrt it's optimal weight.

The most important part which has to be kept in mind while UCMP is being utilised is the total number of ECMP entries consumed due to the structure of advertisements. Therefore it is highly advised to perform approximation of the amount of required FECs when sets of prefixes are being advertised. The advertised link-bandwidth is capped by the physical link speed if we regenerated the link-bandwidth. If we are merely passing along the received link-bandwidth to a peer, or if we set the link-bandwidth explicitly in an outbound route-map, then we do not do any capping. In that case the switch will accept link-bandwidth values greater than the inbound link speed.

Details and use cases are described in https://tools.ietf.org/html/draft-ietf-idr-link-bandwidth-07

DCI optics

The rapid growth of cloud computing is driving the upgrade cycle from 100G to 400G transmission in DCIs, and spurring the demand for innovative optical line systems that are more compact, power-efficient, and easier to install, configure, and operate. One of the important topics of the recent year is the arrival of 400G-ZR and OSFP-LS optics with DP-16QAM modulation replacement for proprietary DWDM transponders which can cover the distance of up to 120km with the same port density in a switch as with OSFP/QSFP-DD optics or traditional 10/40/100Gbps interfaces. Consists of 4 connectors: 2xLC 120km DCI line + 2xSC for client connection and contains Erbium Doped Fiber Amplifiers.

Multiplex 8x400G-ZR modules using fiber based Mux/Demux provide 3.2Tbps of DCI bandwidth over a single fiber pair. There is no need to match a specific port to a specific lambda. Which significantly eases the connections - connect any port of the fiber mux to a 400G-ZR module, and set the wavelength channel through the switch OS.

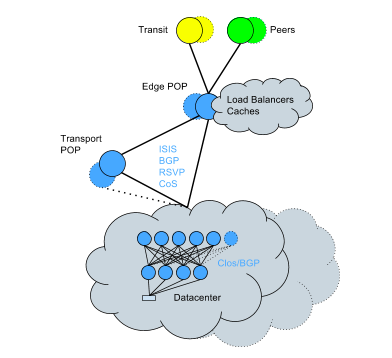

Places in Network: Edge

Large content providers build points of presence around the world, each connected to tens or hundreds of networks. A company serves its dynamic content, e.g. HTML and JSON, from its data centers, while utilizing CDNs for serving the static assets such as CSS, JS, images etc.

The tier of infrastructure closest to users - PoPs (Point of Presence) used to improve the download time of the dynamic content.

PoPs are small scale data centers with mostly network equipment and proxy servers; that act as end-points for user's TCP connection requests. PoP would establish and hold that connection while fetching the user-requested content from the data center. POPs are where we connect a network to the rest of the internet via peering.

Traffic management systems direct user requests to an edge node that provides the best experience. How does POP selection mechanism work? It’s based on DNS. Third parties are used as authoritative name servers for a given site address. These providers let configure their name servers to give different DNS answers based on the user's geographic location.

Given this ability and the expanding PoP footprint, the obvious next question is - how do we make sure that users are connecting to the optimal PoP? This could be decided based on:

Geographic distance: But geographical proximity does not guarantee network proximity.

Network connectivity: the Internet is constantly changing and we would have never kept up with it by using a manual approach like this.

Synthetic measurements: Some companies have a set of monitoring agents distributed across the world that can test your website. But agent geographic and network distribution may not represent the user base. Agents usually have very good connectivity to the internet backbone, which may not be representative of our user base.

Another approach is Real User Monitoring, it consists of JavaScript code e.g. boomerang library, that runs on the browser when the page is loaded and collects performance data. It does so mostly by reading the Navigation Timing API object exposed by the browsers and sends the performance data to beacon servers after page load.

To identify the optimal PoP per geography - knowing the latency from users to all the PoPs is necessary. This is done by downloading a tiny object from each PoP after the page is loaded and measuring the duration to download the object. By aggregating this data the recommendation of optimal PoP per geography could be made. Once it’s done, configure DNS providers to change PoP per geography.

Edge Fabrics

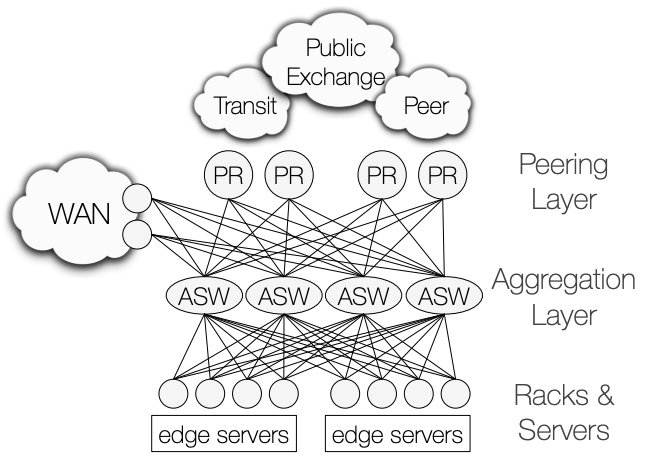

Large content providers built Edge Fabrics, a Traffic Engineering based system consisting of dozens of PoPs. In a nutshell Edge Fabric receives BGP routes from peering routers, monitors capacities and the demand for outgoing traffic, and determines how to assign traffic to routes. Edge Fabric enacts its route selections by injecting them by means of BGP into the peering routers, overriding the router’s normal BGP selection.

Topology

A PoP serves users from racks of servers, which connect via intermediate aggregation switches to multiple peering routers. BGP sessions are maintained between the components of the edge fabric including rack switches. Each PoP includes multiple peering routers (PRs) which exchange BGP routes and traffic with multiple other AS.

Peering

Transit providers provide routes to all prefixes via a private network interconnect (PNI) with dedicated capacity just for traffic between the Edge Fabric and the provider. Peers provide routes to the peer’s prefixes and to prefixes in its customer cone. Private peers are connected via a dedicated PNI. Public peers connections traverse the shared fabric of an Internet exchange point (IXP). Route server peers advertise peers routes indirectly via a route server and exchange traffic across the IXP fabric.

Following is the example of how Facebook describes it in the paper https://research.fb.com/wp-content/uploads/2017/08/sigcomm17-final177-2billion.pdf. A PoP may maintain multiple BGP peering sessions with the same AS (e.g., a private peering and a public peering, or at multiple PRs). Only a single PoP announces each most-specific Facebook prefix, and so the PoP at which traffic ingresses into Facebook ‘s network depends only on the destination IP address. In general, a flow only egress at the PoP that the flow enters at, Isolating traffic within a PoP reduces backbone utilization and simplifies routing decisions.

PRs prefer peer routes to transit routes (via local_pref), with AS path length as a tiebreaker. When paths remain tied, PRs prefer paths from the following sources in order: private peers over public peers over route servers.

Peer type is encoded in MEDs while stripping MEDs set by the peer, which normally express the peer’s preference of peering points, but are irrelevant given that Facebook egresses a flow at the PoP where it ingresses. BGP at PRs and ASWs using BGP multipath. When a PR or an ASW has multiple equivalent BGP best paths for the same destination prefix, as determined by the BGP best path selection algorithm, it distributes traffic across the equivalent routes using ECMP.

Capacity awareness

Ideally, this connectivity lets providers better serve users, but providers cannot obtain enough capacity on some preferred peering paths to handle peak traffic demands. Main challenges are that by default: BGP is not capacity-aware. Interconnections and paths have limited capacity. Performance measurements of a large content provider network show that it is unable to obtain enough capacity at many interconnections to route all traffic along the paths preferred by its BGP policy. And BGP is not performance-aware. AS path length and multi-exit discriminators (MEDs), do not always correlate with performance.

To tackle these challenges routing decisions must be aware of capacity, utilization, and demand. Decisions should also consider performance information and changes, while simultaneously respecting policies. To accomodate this a pretty sophisticated solution, some sort of Predictor-Corrector is used consisting of BMP Collector - gets all available routes per prefix; the Sflow collector - gets traffic samples and calculates average traffic rate; the Allocator - retrieves list of interfaces, projects the utilisation and assigns the load as granular as per prefix load.

Because the controller is stateless and uses projected interface utilization instead of actual utilization, a shadow controller inside a sandbox that can query for the same network state as the live controller. This enables a mechanism of verification on a controller and identifies misprojections in interface utilization.

The described solution helps with destination-based routing, but what should be done with prioritization of the traffic based on the content, in other words - prioritise specific flows? Servers set the DSCP field in the IP packets of selected flows to one of a set of predefined values. The controller injects routes into the alternate routing tables per each DSCP value at PRs to control how marked flows are routed.

Measuring Performance

End-user TCP connections terminate at front-end servers at the edge of our network, which in turn proxy HTTP requests to backend servers. eBPF randomly selects a subset of connections and routes them via alternate path, by setting the DSCP field in all of the flow’s IP packets to a DSCP value reserved for alternate path measurements. This allows to collect measurements with existing infrastructure that logs the performance of client connections observed by the front-end servers

Alternative approach

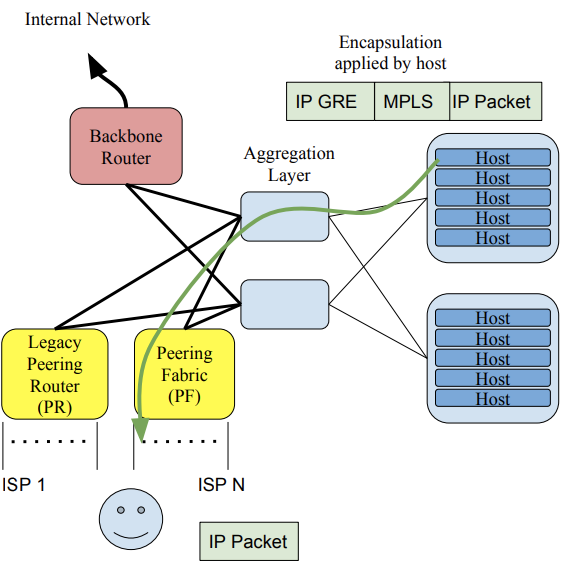

Google’s Espresso Peering Fabric https://www.cs.princeton.edu/courses/archive/fall17/cos561/papers/espresso17.pdf does not run BGP locally and does not have the capacity to store an Internet-scale FIB. Instead it stores Internet-scale FIBs on the servers. Espresso directs ingress packet to the host using IP GRE where it applies ACLs. The hosts also encapsulate all outbound packets with a mapping to the PF’s output port.

Espresso uses IP-GRE and MPLS encapsulation, where IP-GRE targets the correct router and the MPLS label identifies the PF’s peering port. The PF simply decapsulates the IP-GRE header and forwards the packet to the correct next-hop according to its MPLS label after popping the label. An L3 aggregation network using standard BGP forwards the IP-GRE encapsulated packet from the host to the PF.

Comparison

Facebook's Edge Fabric has similar traffic engineering goals as Espresso, but has independent local and global optimization which relies on BGP to steer traffic. The key difference lies in Espresso’s use of commodity MPLS switches, in contrast with Edge Fabric that relies on peering routers with Internet-scale forwarding tables.

Security on the Edge

The fundamentals of securing any network apply to the edge as well. But the edge is a particular place where you would expect to see the implementation of such features as BGP origin validation (BGP-OV) to secure your system against DoS attacks. The combination of BGP-OV and the feasible path uRPF techniques is a strong defense against address spoofing and DDoS. For this to work, the target prefix owner should create a ROA for the prefix and all ASes on the path should be performing BGP-OV in addition to employing uRPF.

Currently, RPKI only provides origin validation. While BGPsec path validation is a desirable characteristic and standardised in https://tools.ietf.org/html/rfc8205, a real-world deployment may prove limited for the foreseeable future due to vendor support. So for now we stuck with ROA information retrieved from an RPKI cache server to mitigate the risk of prefix hijacks and some forms of route leaks in advertised routes. A BGP router would typically receive a list of prefix, maxlength, origin AS tuples derived from valid ROAs from RPKI cache servers. The router makes use of the list with the BGP-OV process to determine the validation state of an advertised route https://tools.ietf.org/html/rfc6811.

To dig deeper or refresh the knowledge base, I suggest to watch this presentation.

Security within a Data Center

The term data center network security could be divided into groups: data center firewall (DCFW), data center intrusion prevention system (DCIPS) and the group which combines the capabilities of the DCFW and DCIPS and is referred to as a data center security gateway (DCSG).

Considerations for deployment include answering the following questions:

What server operating systems and applications are to be protected?

What are peak performance requirements?

Can the security product be bypassed using common evasion techniques?

How reliable and stable is the device?

Applications deployed in the data centers have become multi-tiered and distributed. This has resulted in an increase in the amount of east-west traffic seen in the data centers. This includes traffic from physical-to-physical, virtual-to-virtual, and between physical and virtual workload.

Legacy security architecture consists of perimeter firewalls, that primarily enforce security for north-south traffic. As the applications within the datacenter become increasingly distributed, it results in a rise in the number of workloads both physical and virtual deployed in the datacenter which in turn present many new possible entry points for security breaches.

Micro-segmentation solves part of the problem by providing security for east-west flows between virtual workloads. In comparison Macro-Segmentation Service (MSS) addresses the remaining gap in security deployment models by securing the east-west traffic between physical-to-physical and physical-to-virtual workloads. Following are just a few examples of the integration options:

Starting from MSS with a 3rd party firewall. Design elements Trident3 based VTEP as Leafs and Service Leaf, CVX as VxLAN control-plane, coexist with non vxlan vlans as MSS-FW acts as gateway. All L3 subnets use MSS redirect policy. Use offload rules to debug any problematic host

Another approach is to use Group based MSS. Segmentation uses a combination of the routing tables and TCAMs, segments are identified using a segment identifier from the routing tables. Route lookup involves Source and Destination IP addresses, routing table scale reduced to almost half due to dual lookup which is performed at line rate, does not impact any other functionality that uses routing tables. Policy enforcement done in TCAM - match based on segment identifier, enforcement action is to drop or allow the traffic at line rate. In MSS-G there is no control plane involvement once segment policies are programmed in the hardware. Feature interaction similar to security ACLs

Load balancing between firewall clusters

It might be a big and non-trivial question, answer to which could depend on a variety of constraints, but it is easy to come across a question in a variation of the following -

What tricks to use to ensure the correct hashing for traffic traversing firewall clusters on both sides when passing from DC1 to DC2 and backwards? What if firewall clusters located in each DC can't sync sessions state between each other, as it is proposed by some firewall vendors? How do you make sure the network is providing the correct result of hashing on both sides?

We have to make the assumption that an operator can run all sorts of traffic: TCP, UDP, QUIC and does not have the capacity to run something like Maglev - a large distributed software system for load balancing on commodity Linux servers, so this question here is solely about ECMP knobs from real life deployments and any other tricks.

Option1: Ensure to have the Symmetric Reverse hashing for ECMP on both sides, Example:

https://www.arista.com/assets/data/pdf/Whitepapers/PANW_Symmetric_Scale.pdf

FW as a L2 vwire, hashing based on fixed fields (fixing what IP gets sent to which FW as long as the I/F is up)

Solution consists of Trident based OpenFlow Direct Mode switches (Arista 7050 series), each switch interconnects with each FW unit

Event handler tracking the status of interfaces status change and triggerin a script

The script is fed buy event handler variables and creates hash buckets based on the number of devices to be included in the hash

A priority value is used to ensure that if a link or firewall fails, the next device in the hash takes over

Flows from one side are hashed based on source IP while the return traffic is hashed based on destination IP to ensure the response traffic traverses the same firewall.

Option2: Stateless hashing:

Similar approach as with Option1, but in this case no OpenFlow involved and hence no active session tracking

The operation relies on a NHG configuration and resilient ECMP functionality

no ip load-sharing sand fields ingress-interface

Proved to be operational on multiple cases, e.g. Load Balancing for CGNAT appliances

Option3: Reduce the mode of operation of FW to stateless.

Option4: Arista CCF (Former Big Switch BCF) + Checkpoint Maestro. This option seem to be unsupported anymore but still looks interesting. Forwarding the traffic to Maestro as a Service Chaining. And Maestro shares the sessions to the FWs. CCF has multiple VRFs and they are called (E-VPC) Enterprise Virtual Private Cloud.

Service Chaining is an architecture to insert a service (FW, IPS, LB) between two VRFs. Then, a policy is created in the CCF to redirect the traffic to an external device before forwarding it to another VRF. If the external device is not the default gateway and the traffic needs to be inspected by an external device before hitting VRF B, the policy will enforce that a traffic coming from VRF goes to the external device (FW). The FW has a route to send the traffic back to the VRF B. If the customer has multiple FWs working as active, CCF will load balance the sessions between the FWs and it will keep the track of the session to make sure that the packets from the same session is forwarded to the same FW.

In the other case, CCF supports ECMP and keeps the track of the session, so we configured ECMP with Next Hop to send the traffic to multiple Palo Alto FWs.

Security in Hyper Scale environment

For the reason of enormous scale security is pushed to nodes: move networking functionality out of the kernel and into userspace modules through a common framework. As for example The Snap architecture has similarities with a microkernel approach where traditional operating system functionality is hoisted into ordinary userspace processes.

Unlike prior microkernel systems, Snap benefits from multi core hardware for fast IPC and does not require the entire system to adopt the approach wholesale, as it runs as a userspace process alongside our standard Linux distribution and kernel. Snap engines may handle sensitive application data, doing work on behalf of potentially multiple applications with differing levels of trust simultaneously.

Snap runs as a special non-root user with reduced privilege, although care must still be taken to ensure that packets and payloads are not mis-delivered. One benefit of the Snap userspace approach is that software development can leverage a broad range of internal tools, such as memory access sanitizers and fuzz testers, developed to improve the security and robustness of general user-level software.

The CPU scheduling modes also provide options to mitigate Spectre-class vulnerabilities by cleanly separating cores running engines for certain applications from those running engines for different applications

Snap a Microkernel Approach to Host Networking are described by Google here.

Link Layer Security

MACsec is an IEEE standard for security in wired ethernet LANs described in https://1.ieee802.org/security/802-1ae/. For switches and routers capable of supporting multiple terabits of throughput MACsec can provide line rate encryption for secure connections, regardless of packet size, and scales linearly as it is distributed throughout the device. The MACsec Key Agreement Protocol (MKA) specifies mutually authenticated MACsec peers, and elects one as a Key Server that distributes the symmetric Secure Association Keys (SAKs) used by MACsec to protect frames. MACsec adds 24 bytes (sectag + ICV) to every encrypted frame.

Considering this, MACSec becomes a pretty interesting option to secure P2P connections, as for example WAN links. A great deal of caution should be taken though when interoperability between vendors is required. As for example, some vendors still don’t support Fallback keys, or if a device is not a key server, it does not install Tx SAK after a link flap. There is no point to list all the findings here, but it would worth to mention that interop testing is crucial for success.

Monitoring and control

One of the main goals of network monitoring is to locate the failed part in an appropriate period of time. Sooner or later everybody realises that good efficient monitoring is as important as good networking design. The cheapest way to monitor a network and alert network ops team is by means of customer complaint. This is not as cheap as it could be expensive and not working really well in large networking environments. If you are lucky enough and have a mono-vendor network without any plans to introduce diversity into your networks - your vendor will likely provide you with some proprietary means of monitoring However, not everyone is so lucky.

A common approach to monitoring via OpenConfig

Use standardised vendor neutral data models for streaming telemetry via OpenConfig. This allows to stream off a box all the raw state of a box in a commonly understandable language. Streaming happens over gNMI or gRPC. This brings you to a whole streaming telemetry world where you can stream all the data and/or subscribe to status changes. Data models are structured in YANG models, which are vendor neutral, which represent the state tree of a device and also across devices.

Data modes are Structured and published like MIBs for SNMP, however in OpenConfig they are open sourced so you can continue to contribute to them and access them in a much faster and simpler way. The OpenConfig state tree defines all sorts of familiar concepts like mac-, arp-tables, bgp router id, RD etc. Also it contains less familiar concepts like key-value store of network instances - providing the mechanism of effectively communicating data not just for one box but for all devices. Check this refreshed on OpenConfig: https://www.youtube.com/watch?v=3T0j8AzsGBo&t=637s

Another way to approach monitoring is by the means of end-hosts. Pretty much every hyperscaler publicly reported at least one variation of this approach that they use. This works via agents installed on end-hosts, each agent has a goal to send some traffic, usually tcp, udp and icmp with colors of DSCP and target a variety of destinations. The data is aggregated, stored and analysed, as a result you get an idea how reachability b/w different hosts look like. Examples: https://presentations.clickhouse.tech/meetup7/netmon.pdf and https://engineering.fb.com/core-data/netnorad-troubleshooting-networks-via-end-to-end-probing/

Some approaches were even put as IETF draft, as Active data plane probes https://tools.ietf.org/html/draft-lapukhov-dataplane-probe. These don’t carry application traffic. They are separate INT packets - sort of like ping, traceroute except they would be handled in the data plane in the transiting nodes. The advantage of this tactic is - you get the picture as granular as two hosts in one rack and as scaled out as reachability b/w two datacenters.

Disadvantages - no visibility into where exactly packets are lost. There are ways to improve it by, for example marking TCP SYN-retransmits and matching via ACL on devices. The idea is great, but also requires fine tuning - the threshold will depend on the number of packets transmitted via a device, so it’s not straightforward but doable. It’s done via eBPF. It can be used for many things: network performance, firewalls, security, tracing, and device drivers. The tcpretrans utility displays details about TCP retransmissions, such as the local and remote IP address and port number, as well as the TCP state at the time of the retransmissions. The utility uses eBPF features and, therefore, has a very low overhead.

What else will help you to drill down such a problem?

Traceroute, good but not good enough if you have to deal with encapsulation, as you need to tunnel ICMP TTL-exceeded messages.

How about a device telling you that it is experiencing an issue by means of a large variety of self probes available via Health Tracker (https://eos.arista.com/eos-4-21-0f/health-tracker/). The feature uses the event-handler infrastructure to set up monitoring conditions that detect bad events and associate them with various metrics like voltage, temperature, cooling, power, hardware-drops, etc.

Queue utilisation and congestion monitoring is possible via Latency Analyzer (LANZ).

What else - it would be nice to see what’s going on in the data plane at the moment of the issue by intercepting traffic, there are three options:

Intercept on a end-host - usually burdens the device, so likely not applicable to all scenarios

Intercept on a switch by mirroring to CPU, usually works great for control plane debug, but data plane can exhaust CoPP allowance and not show important packets in a stream

Intercept via TapAgg https://www.arista.com/assets/data/pdf/Whitepapers/TAP_Aggregation_Product_Brief-2.pdf

Tap aggregation is the accumulation of data streams and subsequent dispersal of these streams to devices and applications that analyze, test, verify, parse, detect, or store data.

LANZ Mirroring

Lanz Mirroring (https://eos.arista.com/eos-4-21-0f/lanz-mirroring/) is a feature of active dataplane monitoring and available features. It allows users to automatically mirror traffic queued as a result of congestion to either CPU or a different interface. Current Telemetry mechanisms support only exporting counters at various stages of pipeline at regular intervals.

INT

Inband Telemetry (INT) allows for collecting per packet telemetry information as the packet traverses a network across multiple nodes. Every node appends telemetry information as the packet passes through the switch in the data plane. It gives fine-grained information for specific flows, microbursts, network misconfiguration etc. We can carry INT information over mirrored and truncated copies of sampled packets. Currently there are two specs - IFA 1.0 and IFA 2.0. So interoperability with other vendors is crucial. (https://tools.ietf.org/html/draft-kumar-ippm-ifa)

Mirror on drop

Mirror on drop - when an unexpected flow drop occurs in the ingress pipeline, a set of TCAM rules is installed captures the first 80 bytes of the first packet, and mirrors it together with timestamp and the drop reason to pre-configured external collectors over a UDP dataframe. As a result you get a START event: timestamp + drop reason + first 80B of a packet. Subsequent drops are not mirrored. If there are no flow drops for a configurable duration of N seconds, report a STOP event.

Postcard based telemetry

To gain detailed data plane visibility to support effective network OAM, it is essential to be able to examine the trace of user packets along their forwarding paths. Such on-path flow data reflect the state and status of each user packet's real-time experience and provide valuable information for network monitoring, measurement, and diagnosis. Such mechanism is described in https://tools.ietf.org/html/draft-song-ippm-postcard-based-telemetry-08

Operates by selecting packets of a flow from each node and sending copies to the collector via a GRE tunnel along with basic telemetry information. This approach differs from INT (Inband Network Telemetry) by sampling packets out-of-band. A few things to consider - IFA (Inband Flow Analyser) is not yet standardized in IETF and needs sophisticated support from the underlying hardware which may not be available across the variety of chips. Packet copy can cause some inconsistencies in packet counters and QoS configurations. In comparison, INT exports the telemetry data at the end-nodes. If packets are dropped somewhere in the path, INT data will be lost and also, we cannot pinpoint the drop location. INT packets traversing tunnels within the INT domain require special handling during encap and decap processes which are more involved.

Comparing to GRE-EN-SPAN

(https://www.arista.com/assets/data/pdf/Whitepapers/Arista_Enabling_Advanced_Monitoring_TechBulletin_0213.pdf), one can notice in the case of Postacd, samples from different switches are coordinated so that we sample the same packet(s) from all the switches in the path. However, in the case of GREENSPAN those packets are independently mirrored. Timestamp is one of the important attributes of Postcard which provides the timestamp by punting the packets to the CPU and computing the PTP timestamp in the CPU.

All in all, there is no silver bullet, but a couple of ideas put together in a mix could be interesting.

Extensibility of network OS

Why extensibility matters? Saves time, gives flexibility, works around the clock - automate everything which you might have to do manually via cli. Rely less on vendor - build you own features and control the release cycle. You own the switch, so you should be able to do whatever you need on it. Even if a customer doesn’t know how to program/code, there are places like github/gitlab where other customers share nice things. The only requirement is to have Linux-based OS and high quality APIs.

API options

SNMP - well documented, ubiquitous, well integrated, common types of config and notification, but very slow to get new features

Linux Bash - many great tools exist for linux, if you Network OS is linux you can just drop and go. Standard utilities like ping, traceroute, mtr, cronjobs etc. Many commands may be even integrated with CLI, so you don’t need to switch between cli and bash to execute them

Example of bash application - Spotify’s SIR - BGP controller https://github.com/dbarrosop/sir

eAPI - eAPI uses JSON-RPC over HTTPS. What this means in simpler terms is that the communication to and from the switch consists of structured data (JSON) wrapped in an HTTPS session. Furthermore, this structured data has a particular nature–RPC. RPC, which stands for remote procedure call, makes remote code execution look similar to a local function call. Use any programming language (C++, Python, Go, Java, Bash):

Python library https://pyeapi.readthedocs.io/en/latest/

Node.js, Go, Ruby libraries are also available on github: https://github.com/thwi/node-eAPI, https://github.com/fredhsu/eapigo, https://github.com/imbriaco/arista-eapi

Create custom extensions, custom CLI show/config commands, integration with eAPI, using EosSdk wrappers to look at the data within the CLI context. Examples:

Arista ML2 Mechanism Driver implement ML2 Plugin Mechanism Driver API. This driver can manage all types of Arista switches. For further details, please refer to Arista OpenStack Deployment Guide: https://wiki.openstack.org/wiki/Arista-neutron-ml2-driver

FlowSpec for real-time control and sFlow telemetry for real-time visibility is a powerful combination that can be used to automate DDoS mitigation and traffic engineering. Here is an example using the sFlow-RT analytics software. The sFlow-RT REST API Explorer is a simple way to exercise the FlowSpec functionality. In the article it is demonstrated how to push a rule that blocks traffic from UDP port 53 targeted at host 10.0.0.1. This type of rule is typically used to block a DNS amplification attack.

Understand ECMP using sFlow and eAPI: https://blog.sflow.com/2019/07/arista-bgp-flowspec.html

SDK

Full programmability on top of network devices is available through SDK for purposes of customisation - write agents optimised to your requirements, performance - share state with other agents, react to events, integration - agents can interact with other software agents and services externally, convenience - documentation, extensive APIs and lot’s of examples, and portability - agents can run on different switсhes.

Arista EOS SDK written agent, as for example, is like other agents (MLAG, LED agent, ProcMgr) - Layer2 SysDB. Write agents and then hook SDK bindings and write and read their data directly to SysDB is possible. Allowing everyone that power gives an option to behave like native full power agent That is great for resolving some of the limitations of eAPI: performance and efficiency.

Another advantage of SDK over eAPI is you will get a notification when an event happens, as any other native agent would do. You can do all sorts of custom routing and provision actions such as - provisions of NHs, tunneling, program QoS, PBR etc.

So the way how SDK works is - you build your own agent locally against the C++ headers that your vendor provides. Otherwise you can just use Python and don’t use any sort of headers

On the switch the agent dynamically links to libeos.so library. Vendor has to be very strongly versioned, and follow very strict versioning system to make sure that a customer knows when to recompile, when APIs have changed entirely and pre-existing code won’t work anymore etc

Examples of custom agents could be Open/R which is being used in Facebook’s backbone and data center networks: https://engineering.fb.com/2017/11/15/connectivity/open-r-open-routing-for-modern-networks/

The platform supports different network topologies such as WANs, data center fabrics, and wireless meshes as well as multiple underlying hardware and software systems (FBOSS, Arista EOS, Juniper JunOS, Linux routing. Supports features like automatic IP prefix allocation, RTT-based cost metrics, graceful restart, fast convergence, and drain/undrain operations.

Extensibility of CLI

For operational purposes it is extremely useful to be able to customize or even to create a custom CLI commands. CLI plugins, Custom CLI show/config commands which integrates with eAPI using SDK wrappers to look at the data within the CLI context. Below will be reviewed an example of Arista EOS CLI.

Example of changes to existing native CLI commands - on the switch drop down to bash and obtain root edit /usr/lib/python2.7/site-packages/CliPlugin/IraIpCli.py and copy the modified script via rc.eos before the agents even start. Event handler triggers based on any criteria, launch any script, command or program.

It’s also proven to be great to create CLI commands alias to shortcut any frequently used show or configurational commands:

bgpFA6 show ipv6 bgp summary | egrep -v "64502|65000"

Stream your switch packet capture directly to your workstation:

ssh root@<switch> "bash sudo tcpdump -s 0 -Un -w - -i <linux-interface-name>" | wireshark -k -i

Add perfectly legit alias to print interface description along with ‘show ip int brief’

alias showipintdesc sho ip interface | json | python -c $'import sys,json;data=json.load(sys.stdin)\nfor i in data["interfaces"]: int=data["interfaces"][i]; print "{0:12} {1:>15}/{2:2d} {3:60} {4:<16}".format(int["name"],int["interfaceAddress"]["primaryIp"]["address"],int["interfaceAddress"]["primaryIp"]["maskLen"],int["description"],int["interfaceStatus"])'

Combine the Event handler, python and OS APIs, and you’ve got pretty much everything you need to automate any complex operation on your network, just make sure your vendor support and test this. If we were to compare APIs and create a cross section it would look something like this:

Audience | Notification | Ease of Use | Use Case | |

CLI Scraping | Traditional Network Operators | No | Hard | Scripting and Automation, the painful way |

eAPI | Traditional Network Operators | No | Easy | Scripting and Automation |

SNMP | Traditional Network Operators | Traps | Medium | Monitoring, limited programmability |

OpenConfig | Network Software Engineers | Yes | Medium | Control plane extension (controller), management plane, monitoring |

SDK | Network Software Engineers | Yes | Hard | Control plane extension on box |

Linux APIs | Tech savvy Network Operators | Netlink (Meh) | Medium | Control plane extension on box |

CVP | Traditional Network Operators | Yes | Easy | Network-wide apps, management plane, monitoring |

Conclusion

In conclusion I’d like to add that there are lots of similarities in how Hyperscalers are designing their networks. Major principles are the same - IPv6 is key, scaling out VS scaling up: leaf-spine design and CLOS. The amount of tooling built around standard control management, CLI, provisioning and monitoring is huge, so ideas might be re-used for others as they are mostly open sourced. Automation is crucial - starting from ZTP and traffic draining and covering every possible aspect to rebuilds and tech support collection and upload. Due to the size of network devices fleet - there is no way the amount of NOC engineers will be proportional. Understanding this from a support perspective is important.

The important topic which often goes under radar, in my opinion, is the testing. It is crucial to do thorough testing. This means testing with high state churn at scale a customer will deploy. I.e. we can’t assume that it will simply work, not even with the most simple functionality.

Why? Because the simple functionality is only simple when looked under the microscope in the lab. In production it will be a part of a sophisticated tool chain and this is when things start to get real. Learning from industry leaders makes it easy to avoid mistakes, as for example - thoroughly choose and qualify the next release for your equipment based on the detailed BUG scrubs, hence up to date BUG info is very important. Plan the release lifecycle in advance, so you have time to address any issues and recompile your custom tools in case there are any major changes in libraries or APIs.

Due to the constant change in architecture at pretty much any hyperscaler, it is expected that some of the data in this document is not reflecting the current state at any mentioned network operator anymore as of June-2021.

33