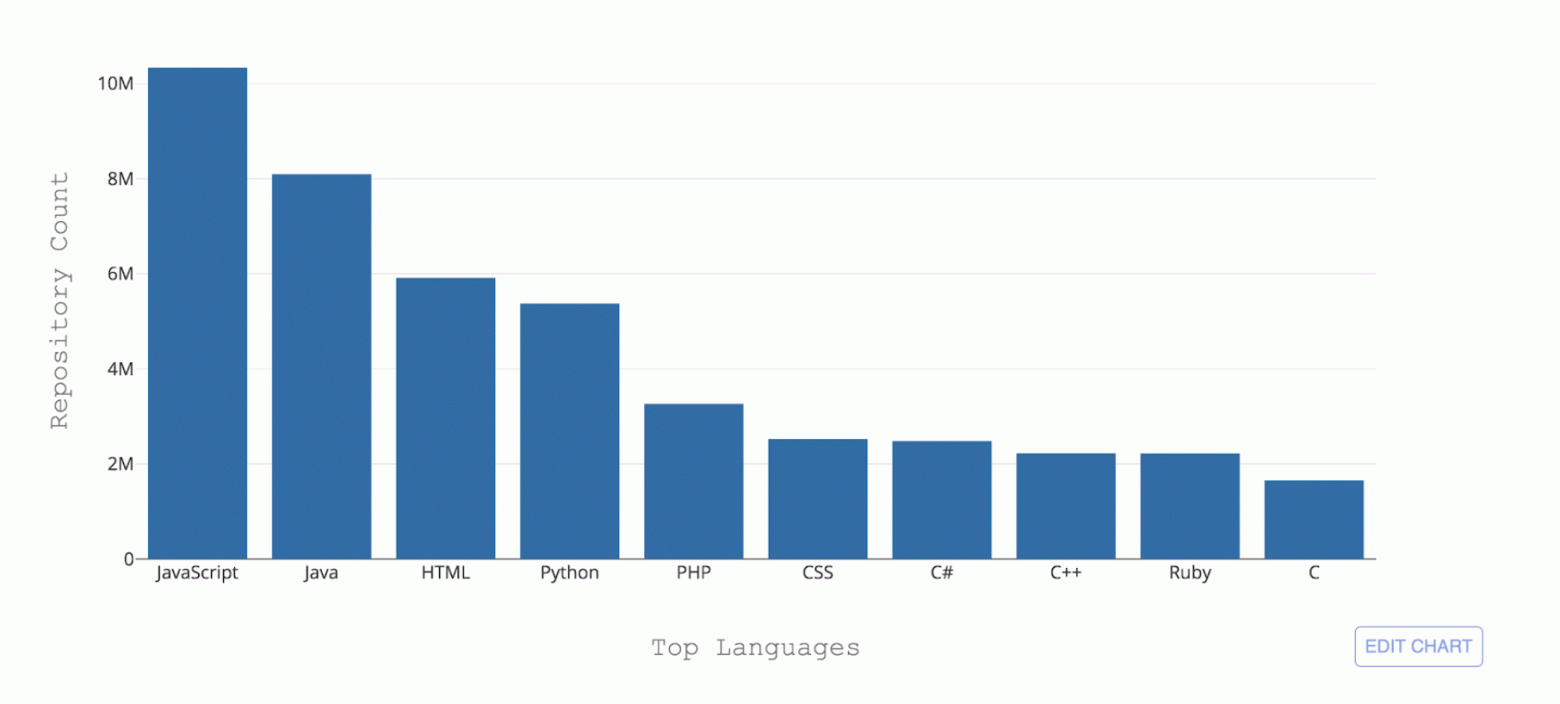

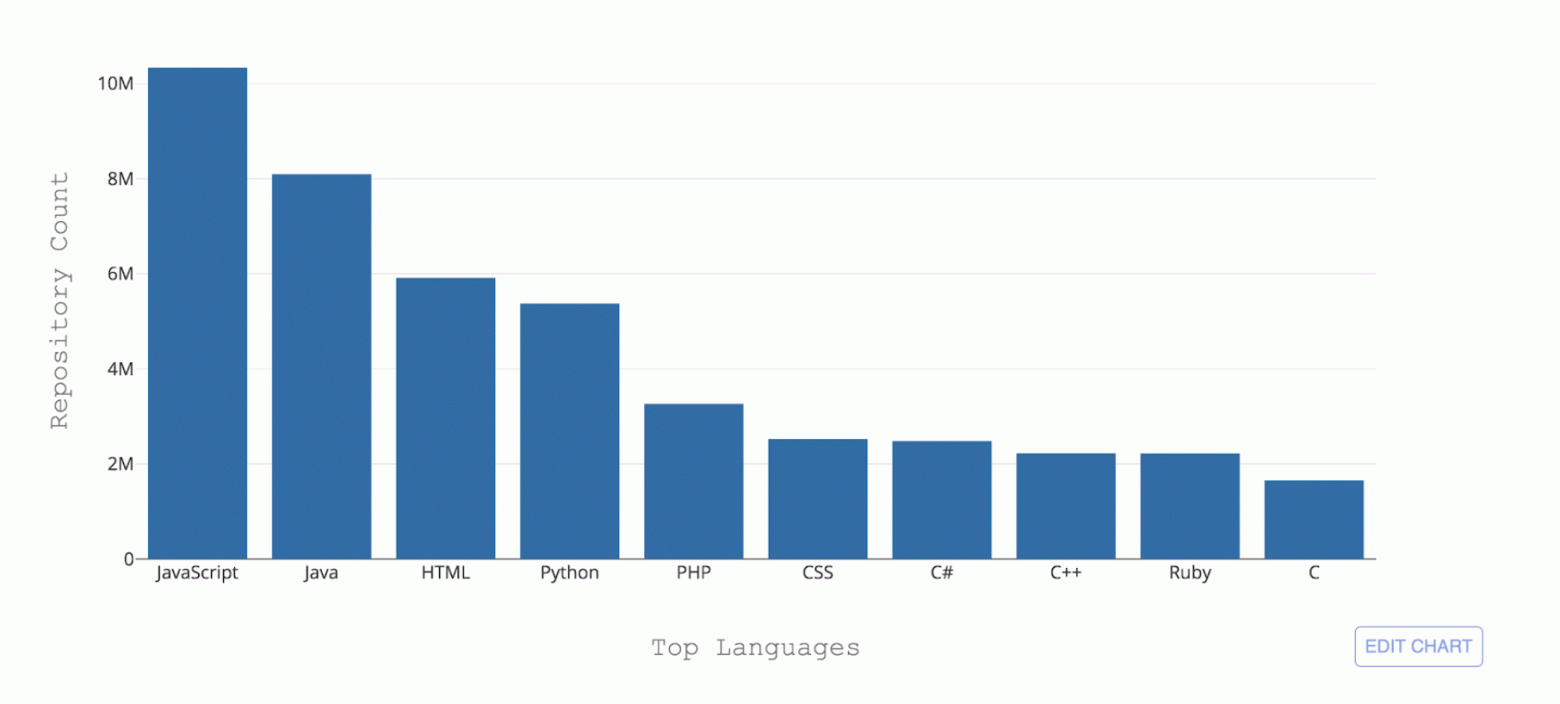

GitHub hosts over 300 programming languages—from commonly used languages such as Python, Java, and Javascript to esoteric languages such as Befunge, only known to very small communities.

Figure 1: Top 10 programming languages hosted by GitHub by repository count

One of the necessary challenges that GitHub faces is to be able to recognize these different languages. When some code is pushed to a repository, it’s important to recognize the type of code that was added for the purposes of search, security vulnerability alerting, and syntax highlighting—and to show the repository’s content distribution to users.

Linguist is the tool we currently use to detect coding languages at GitHub. Linguist a Ruby-based application that uses various strategies for language detection, leveraging naming conventions and file extensions and also taking into account Vim or Emacs modelines, as well as the content at the top of the file (shebang). Linguist handles language disambiguation via heuristics and, failing that, via a Naive Bayes classifier trained on a small sample of data.

Although Linguist does a good job making file-level language predictions (84% accuracy), its performance declines considerably when files use unexpected naming conventions and, crucially, when a file extension is not provided. This renders Linguist unsuitable for content such as GitHub Gists or code snippets within README’s, issues, and pull requests.

In order to make language detection more robust and maintainable in the long run, we developed a machine learning classifier named OctoLingua based on an Artificial Neural Network (ANN) architecture which can handle language predictions in tricky scenarios. The current version of the model is able to make predictions for the top 50 languages hosted by GitHub and surpasses Linguist in accuracy and performance.

OctoLingua was built from scratch using Python, Keras with TensorFlow backend—and is built to be accurate, robust, and easy to maintain. In this section, we describe our data sources, model architecture, and performance benchmark for OctoLingua. We also describe what it takes to add support for a new language.

The current version of OctoLingua was trained on files retrieved from Rosetta Code and from a set of quality repositories internally crowdsourced. We limited our language set to the top 50 languages hosted on GitHub.

Rosetta Code was an excellent starter dataset as it contained source code for the same task expressed in different programming languages. For example, the task of generating a Fibonacci sequence is expressed in C, C++, CoffeeScript, D, Java, Julia, and more. However, the coverage across languages was not uniform where some languages only have a handful of files and some files were just too sparsely populated. Augmenting our training set with some additional sources was therefore necessary and substantially improved language coverage and performance.

Our process for adding a new language is now fully automated. We programmatically collect source code from public repositories on GitHub. We choose repositories that meet a minimum qualifying criteria such as having a minimum number of forks, covering the target language and covering specific file extensions. For this stage of data collection, we determine the primary language of a repository using the classification from Linguist.

Traditionally, for text classification problems with Neural Networks, memory-based architectures such as Recurrent Neural Networks (RNN) and Long Short Term Memory Networks (LSTM) are often employed. However, given that programming languages have differences in vocabulary, commenting style, file extensions, structure, libraries import style and other minor differences, we opted for a simpler approach that leverages all this information by extracting some relevant features in tabular form to be fed to our classifier. The features currently extracted are as follows:

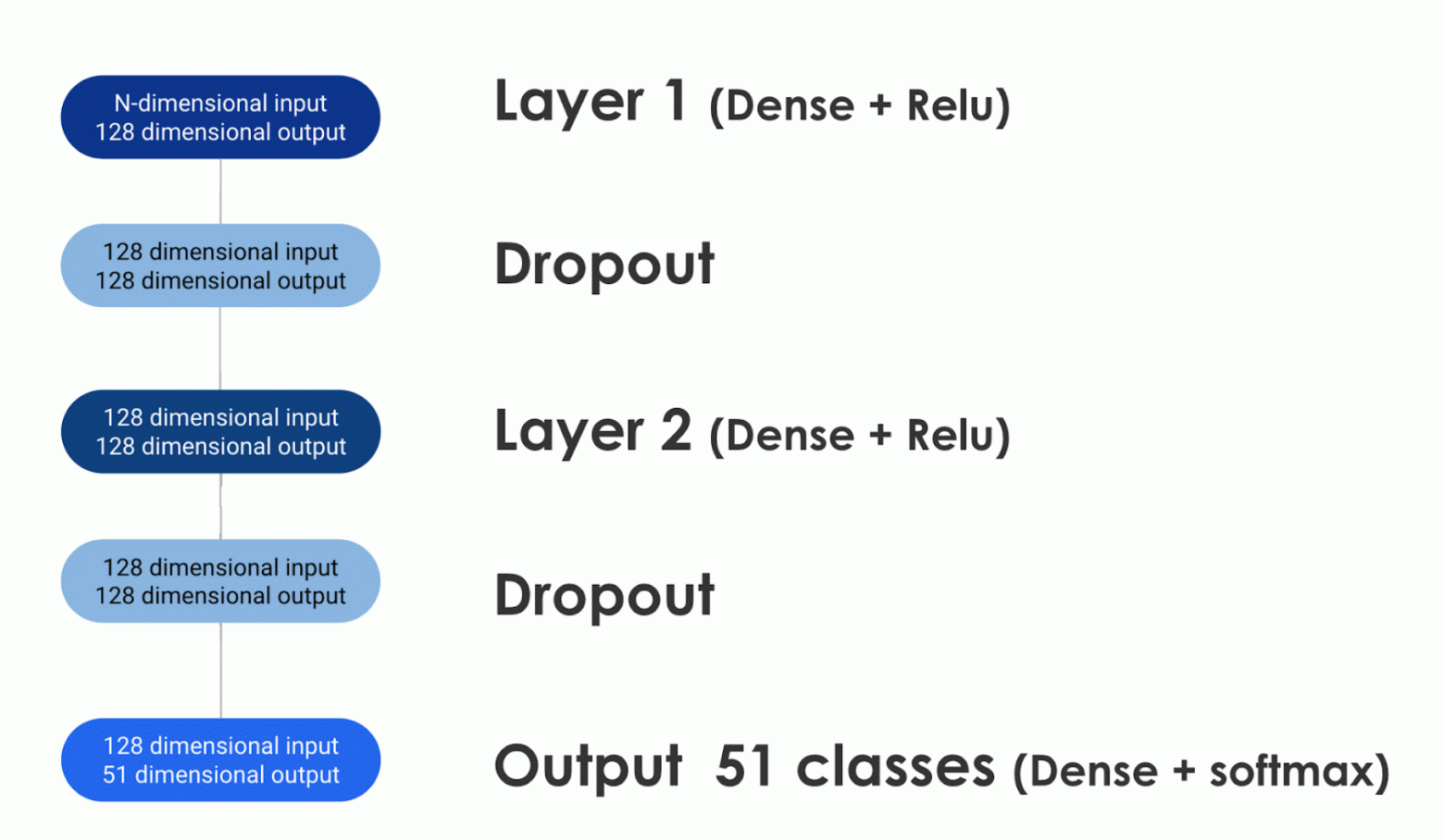

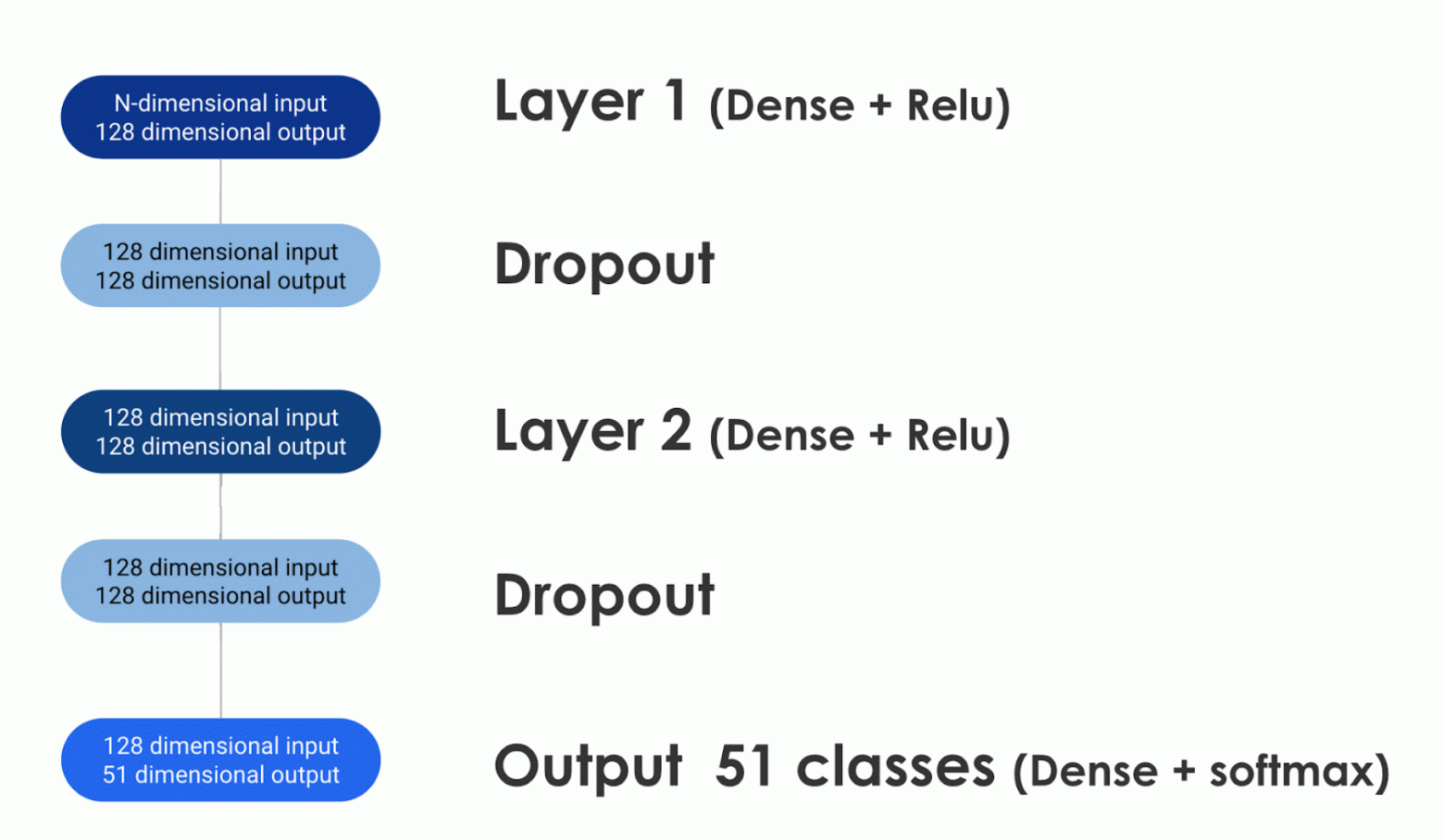

We use the above features as input to a two-layer Artificial Neural Network built using Keras with Tensorflow backend.

The diagram below shows that the feature extraction step produces an n-dimensional tabular input for our classifier. As the information moves along the layers of our network, it is regularized by dropout and ultimately produces a 51-dimensional output which represents the predicted probability that the given code is written in each of the top 50 GitHub languages plus the probability that it is not written in any of those.

Figure 2: The ANN Structure of our initial model (50 languages + 1 for «other»)

We used 90% of our dataset for training over approximately eight epochs. Additionally, we removed a percentage of file extensions from our training data at the training step, to encourage the model to learn from the vocabulary of the files, and not overfit on the file extension feature, which is highly predictive.

OctoLingua vs. Linguist

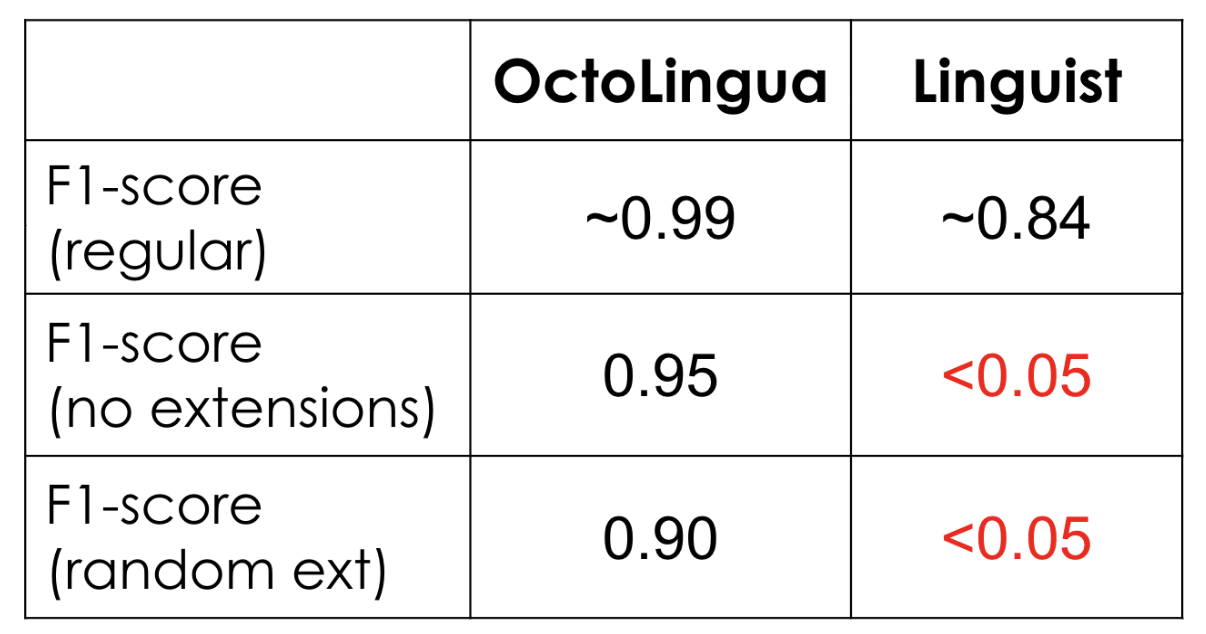

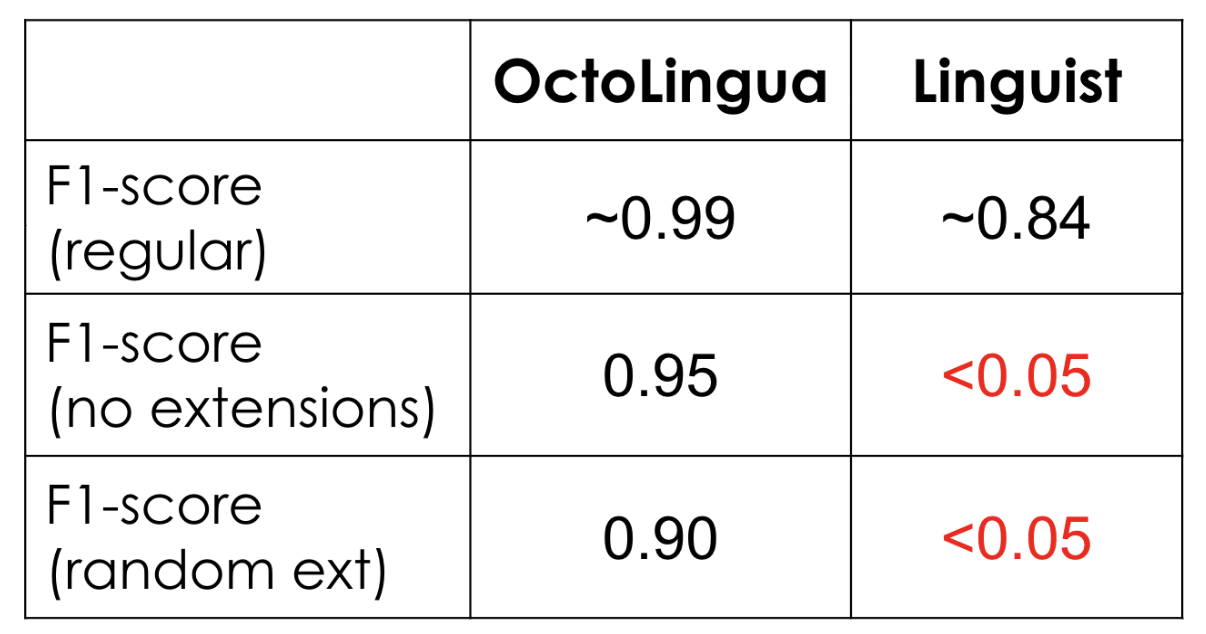

In Figure 3, we show the F1 Score (harmonic mean between precision and recall) of OctoLingua and Linguist calculated on the same test set (10% from our initial data source).

Here we show three tests. The first test is with the test set untouched in any way. The second test uses the same set of test files with file extension information removed and the third test also uses the same set of files but this time with file extensions scrambled so as to confuse the classifiers (e.g., a Java file may have a ".txt" extension and a Python file may have a ".java") extension.

The intuition behind scrambling or removing the file extensions in our test set is to assess the robustness of OctoLingua in classifying files when a key feature is removed or is misleading. A classifier that does not rely heavily on extension would be extremely useful to classify gists and snippets, since in those cases it is common for people not to provide accurate extension information (e.g., many code-related gists have a .txt extension).

The table below shows how OctoLingua maintains a good performance under various conditions, suggesting that the model learns primarily from the vocabulary of the code, rather than from meta information (i.e. file extension), whereas Linguist fails as soon as the information on file extensions is altered.

Figure 3: Performance of OctoLingua vs. Linguist on the same test set

Figure 3: Performance of OctoLingua vs. Linguist on the same test set

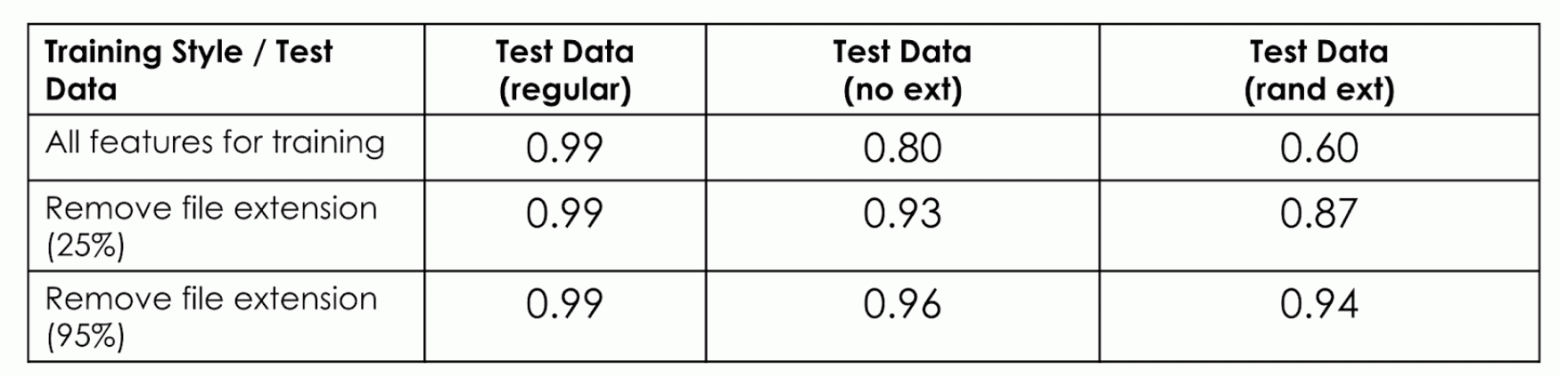

Effect of removing file extension during training time

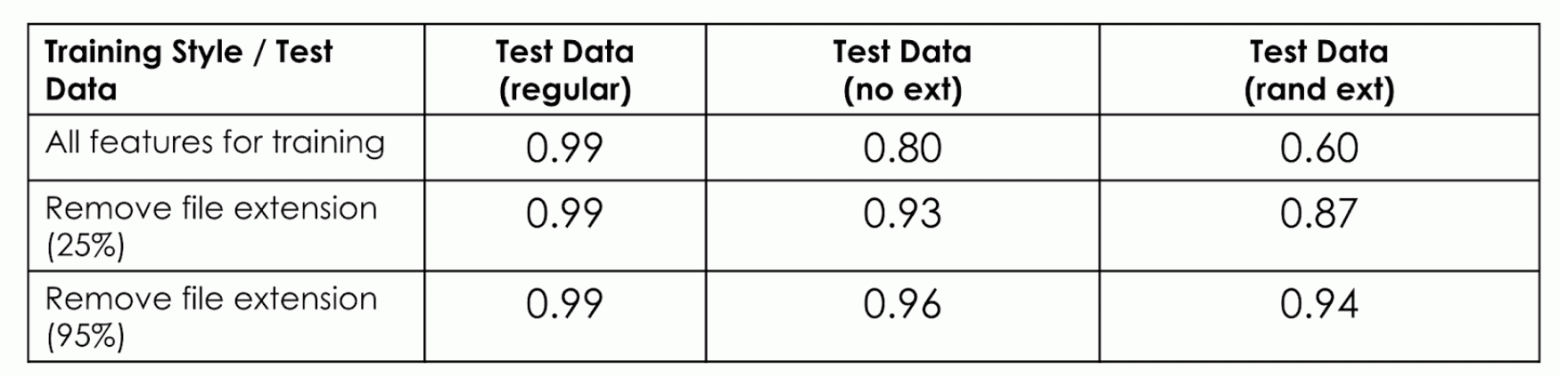

As mentioned earlier, during training time we removed a percentage of file extensions from our training data to encourage the model to learn from the vocabulary of the files. The table below shows the performance of our model with different fractions of file extensions removed during training time.

Figure 4: Performance of OctoLingua with different percentage of file extensions removed on our three test variations

Notice that with no file extension removed during training time, the performance of OctoLingua on test files with no extensions and randomized extensions decreases considerably from that on the regular test data. On the other hand, when the model is trained on a dataset where some file extensions are removed, the model performance does not decline much on the modified test set. This confirms that removing the file extension from a fraction of files at training time induces our classifier to learn more from the vocabulary. It also shows that the file extension feature, while highly predictive, had a tendency to dominate and prevented more weights from being assigned to the content features.

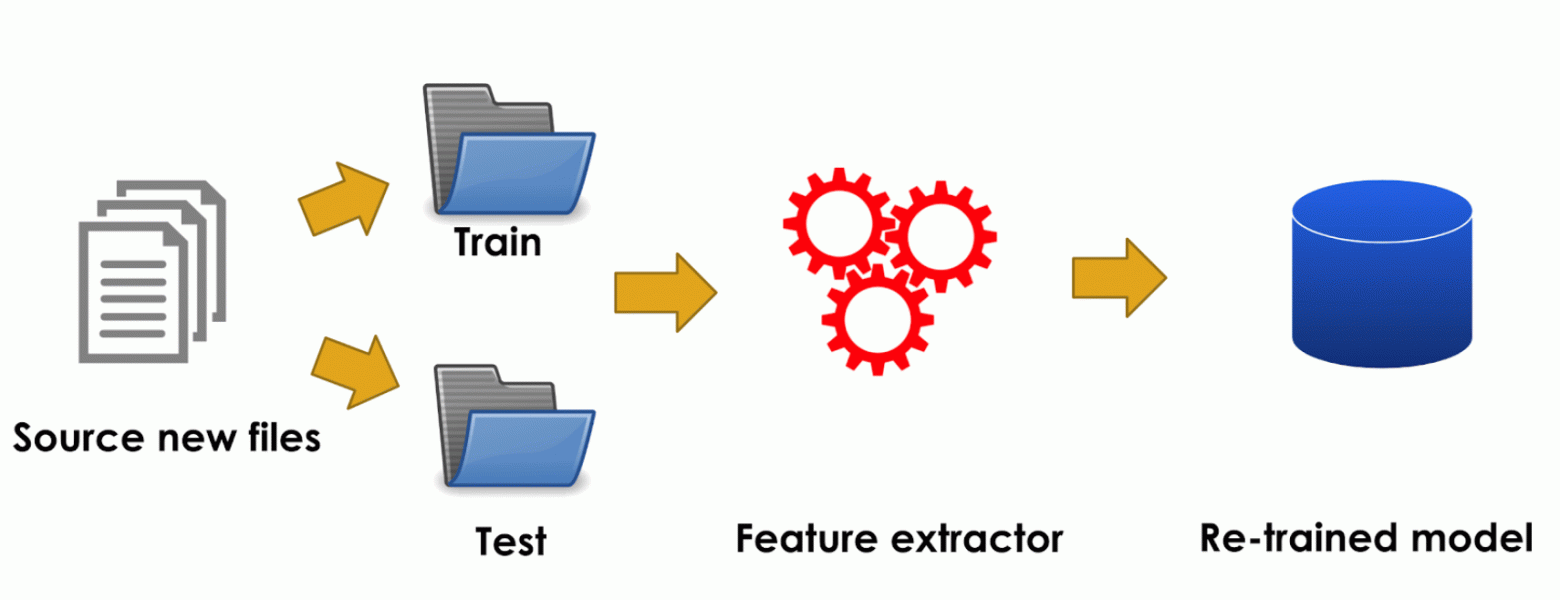

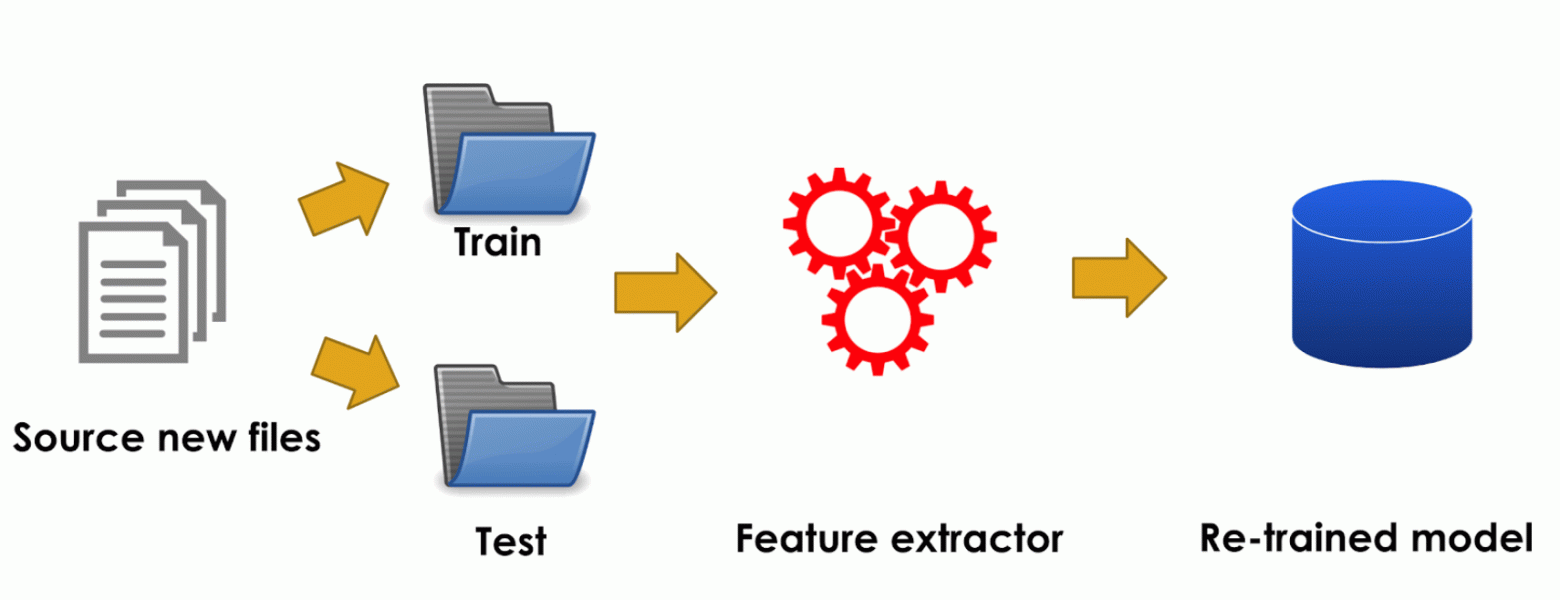

Adding a new language in OctoLingua is fairly straightforward. It starts with obtaining a bulk of files in the new language (we can do this programmatically as described in data sources). These files are split into a training and a test set and then run through our preprocessor and feature extractor. This new train and test set is added to our existing pool of training and testing data. The new testing set allows us to verify that the accuracy of our model remains acceptable.

Figure 5: Adding a new language with OctoLingua

Figure 5: Adding a new language with OctoLingua

As of now, OctoLingua is at the «advanced prototyping stage». Our language classification engine is already robust and reliable, but does not yet support all coding languages on our platform. Aside from broadening language support—which would be rather straightforward—we aim to enable language detection at various levels of granularity. Our current implementation already allows us, with a small modification to our machine learning engine, to classify code snippets. It wouldn’t be too far fetched to take the model to the stage where it can reliably detect and classify embedded languages.

We are also contemplating the possibility of open sourcing our model and would love to hear from the community if you’re interested.

With OctoLingua, our goal is to provide a service that enables robust and reliable source code language detection at multiple levels of granularity, from file level or snippet level to potentially line-level language detection and classification. Eventually, this service can support, among others, code searchability, code sharing, language highlighting, and diff rendering—all of this aimed at supporting developers in their day to day development work in addition to helping them write quality code. If you are interested in leveraging or contributing to our work, please feel free to get in touch on Twitter @github!

Figure 1: Top 10 programming languages hosted by GitHub by repository count

One of the necessary challenges that GitHub faces is to be able to recognize these different languages. When some code is pushed to a repository, it’s important to recognize the type of code that was added for the purposes of search, security vulnerability alerting, and syntax highlighting—and to show the repository’s content distribution to users.

Linguist is the tool we currently use to detect coding languages at GitHub. Linguist a Ruby-based application that uses various strategies for language detection, leveraging naming conventions and file extensions and also taking into account Vim or Emacs modelines, as well as the content at the top of the file (shebang). Linguist handles language disambiguation via heuristics and, failing that, via a Naive Bayes classifier trained on a small sample of data.

Although Linguist does a good job making file-level language predictions (84% accuracy), its performance declines considerably when files use unexpected naming conventions and, crucially, when a file extension is not provided. This renders Linguist unsuitable for content such as GitHub Gists or code snippets within README’s, issues, and pull requests.

In order to make language detection more robust and maintainable in the long run, we developed a machine learning classifier named OctoLingua based on an Artificial Neural Network (ANN) architecture which can handle language predictions in tricky scenarios. The current version of the model is able to make predictions for the top 50 languages hosted by GitHub and surpasses Linguist in accuracy and performance.

The Nuts and Bolts Behind OctoLingua

OctoLingua was built from scratch using Python, Keras with TensorFlow backend—and is built to be accurate, robust, and easy to maintain. In this section, we describe our data sources, model architecture, and performance benchmark for OctoLingua. We also describe what it takes to add support for a new language.

Data sources

The current version of OctoLingua was trained on files retrieved from Rosetta Code and from a set of quality repositories internally crowdsourced. We limited our language set to the top 50 languages hosted on GitHub.

Rosetta Code was an excellent starter dataset as it contained source code for the same task expressed in different programming languages. For example, the task of generating a Fibonacci sequence is expressed in C, C++, CoffeeScript, D, Java, Julia, and more. However, the coverage across languages was not uniform where some languages only have a handful of files and some files were just too sparsely populated. Augmenting our training set with some additional sources was therefore necessary and substantially improved language coverage and performance.

Our process for adding a new language is now fully automated. We programmatically collect source code from public repositories on GitHub. We choose repositories that meet a minimum qualifying criteria such as having a minimum number of forks, covering the target language and covering specific file extensions. For this stage of data collection, we determine the primary language of a repository using the classification from Linguist.

Features: leveraging prior knowledge

Traditionally, for text classification problems with Neural Networks, memory-based architectures such as Recurrent Neural Networks (RNN) and Long Short Term Memory Networks (LSTM) are often employed. However, given that programming languages have differences in vocabulary, commenting style, file extensions, structure, libraries import style and other minor differences, we opted for a simpler approach that leverages all this information by extracting some relevant features in tabular form to be fed to our classifier. The features currently extracted are as follows:

- Top five special characters per file

- Top 20 tokens per file

- File extension

- Presence of certain special characters commonly used in source code files such as colons, curly braces, and semicolons

The Artificial Neural Network (ANN) model

We use the above features as input to a two-layer Artificial Neural Network built using Keras with Tensorflow backend.

The diagram below shows that the feature extraction step produces an n-dimensional tabular input for our classifier. As the information moves along the layers of our network, it is regularized by dropout and ultimately produces a 51-dimensional output which represents the predicted probability that the given code is written in each of the top 50 GitHub languages plus the probability that it is not written in any of those.

Figure 2: The ANN Structure of our initial model (50 languages + 1 for «other»)

We used 90% of our dataset for training over approximately eight epochs. Additionally, we removed a percentage of file extensions from our training data at the training step, to encourage the model to learn from the vocabulary of the files, and not overfit on the file extension feature, which is highly predictive.

Performance benchmark

OctoLingua vs. Linguist

In Figure 3, we show the F1 Score (harmonic mean between precision and recall) of OctoLingua and Linguist calculated on the same test set (10% from our initial data source).

Here we show three tests. The first test is with the test set untouched in any way. The second test uses the same set of test files with file extension information removed and the third test also uses the same set of files but this time with file extensions scrambled so as to confuse the classifiers (e.g., a Java file may have a ".txt" extension and a Python file may have a ".java") extension.

The intuition behind scrambling or removing the file extensions in our test set is to assess the robustness of OctoLingua in classifying files when a key feature is removed or is misleading. A classifier that does not rely heavily on extension would be extremely useful to classify gists and snippets, since in those cases it is common for people not to provide accurate extension information (e.g., many code-related gists have a .txt extension).

The table below shows how OctoLingua maintains a good performance under various conditions, suggesting that the model learns primarily from the vocabulary of the code, rather than from meta information (i.e. file extension), whereas Linguist fails as soon as the information on file extensions is altered.

Effect of removing file extension during training time

As mentioned earlier, during training time we removed a percentage of file extensions from our training data to encourage the model to learn from the vocabulary of the files. The table below shows the performance of our model with different fractions of file extensions removed during training time.

Figure 4: Performance of OctoLingua with different percentage of file extensions removed on our three test variations

Notice that with no file extension removed during training time, the performance of OctoLingua on test files with no extensions and randomized extensions decreases considerably from that on the regular test data. On the other hand, when the model is trained on a dataset where some file extensions are removed, the model performance does not decline much on the modified test set. This confirms that removing the file extension from a fraction of files at training time induces our classifier to learn more from the vocabulary. It also shows that the file extension feature, while highly predictive, had a tendency to dominate and prevented more weights from being assigned to the content features.

Supporting a new language

Adding a new language in OctoLingua is fairly straightforward. It starts with obtaining a bulk of files in the new language (we can do this programmatically as described in data sources). These files are split into a training and a test set and then run through our preprocessor and feature extractor. This new train and test set is added to our existing pool of training and testing data. The new testing set allows us to verify that the accuracy of our model remains acceptable.

Our plans

As of now, OctoLingua is at the «advanced prototyping stage». Our language classification engine is already robust and reliable, but does not yet support all coding languages on our platform. Aside from broadening language support—which would be rather straightforward—we aim to enable language detection at various levels of granularity. Our current implementation already allows us, with a small modification to our machine learning engine, to classify code snippets. It wouldn’t be too far fetched to take the model to the stage where it can reliably detect and classify embedded languages.

We are also contemplating the possibility of open sourcing our model and would love to hear from the community if you’re interested.

Summary

With OctoLingua, our goal is to provide a service that enables robust and reliable source code language detection at multiple levels of granularity, from file level or snippet level to potentially line-level language detection and classification. Eventually, this service can support, among others, code searchability, code sharing, language highlighting, and diff rendering—all of this aimed at supporting developers in their day to day development work in addition to helping them write quality code. If you are interested in leveraging or contributing to our work, please feel free to get in touch on Twitter @github!