Hello Habr! My name is Nikita Letov. I am a tech lead of backend development in remote banking services for individuals (or retail department) of Rosbank. This post is part of my series about backend microservice development with Java and Spring, a text version of my presentation at JPoint 2023.

First, a disclaimer. This post is not a cookbook, it doesn’t provide a perfect solution to any business problem. In fact, this post is a review of a single technology, which can help you solve a real problem when used rightly. Or it might not help; this depends on the exact problem you solve.

If you can't wait to try everything by yourself, here you can find projects with all the source code, infrastructure, Gatling tests, and Grafana metrics:

If you need help with the project to be described, feel free to comment or send a private message, I'll try to answer and help everyone. This time, we have a wonderful monitoring setup, completely based on Grafana OSS (Grafana, Loki, Tempo). I recommend it to everyone. Get to know more, it may be a nice change from ELK/Graylog and Zipkin/Jaeger.

In this post, I will describe what an entry point of an application is, when it becomes necessary, and what tasks API Gateway pattern solves. We’ll review a classic blocking approach based on Netflix Zuul 1.x gateway as an example, its problems. Then we’ll dive into reactive Spring Cloud Gateway and what is complicated about switching to it. In conclusion, we’ll compare both approaches.

Let's start with the problem itself.

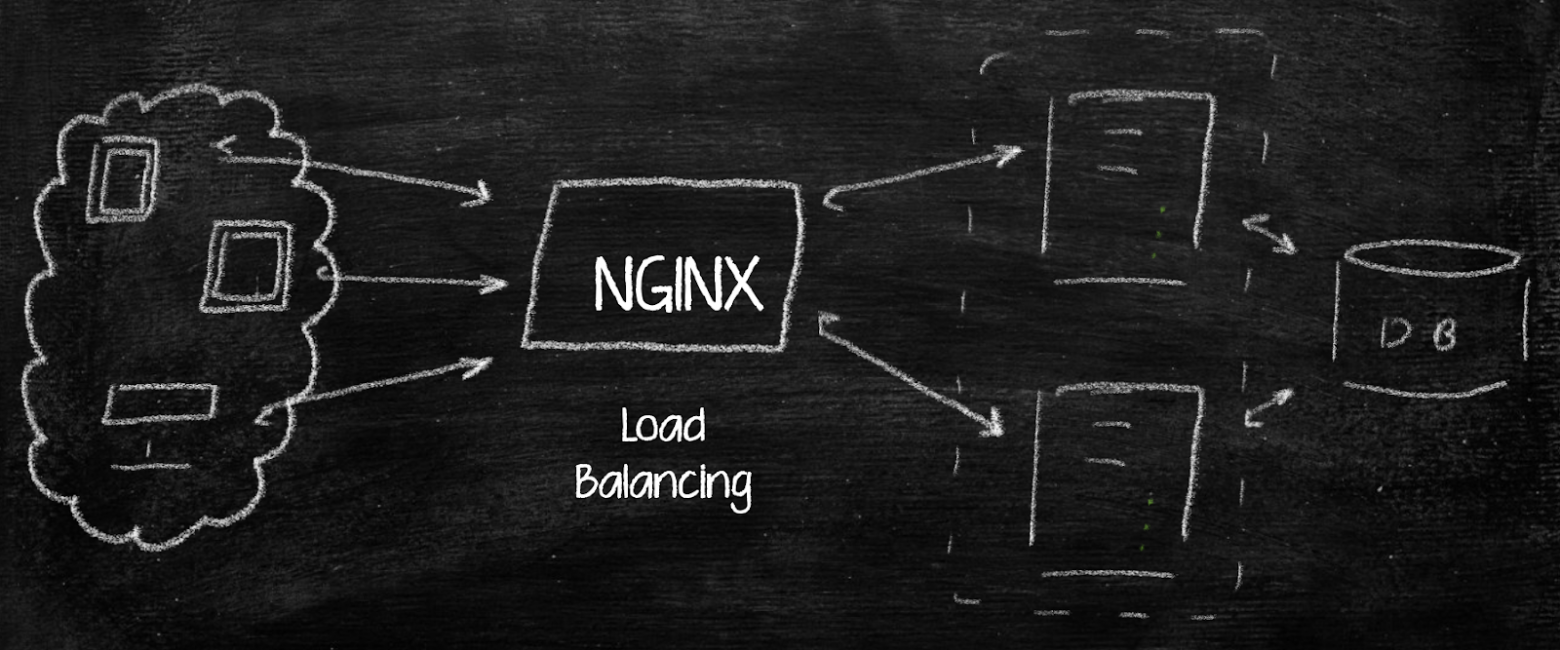

Once upon a time, our beloved bank began to develop remote banking services as a monolithic application. For load balancing and client distribution, nginx was used. Back then, the architecture was as simple as possible:

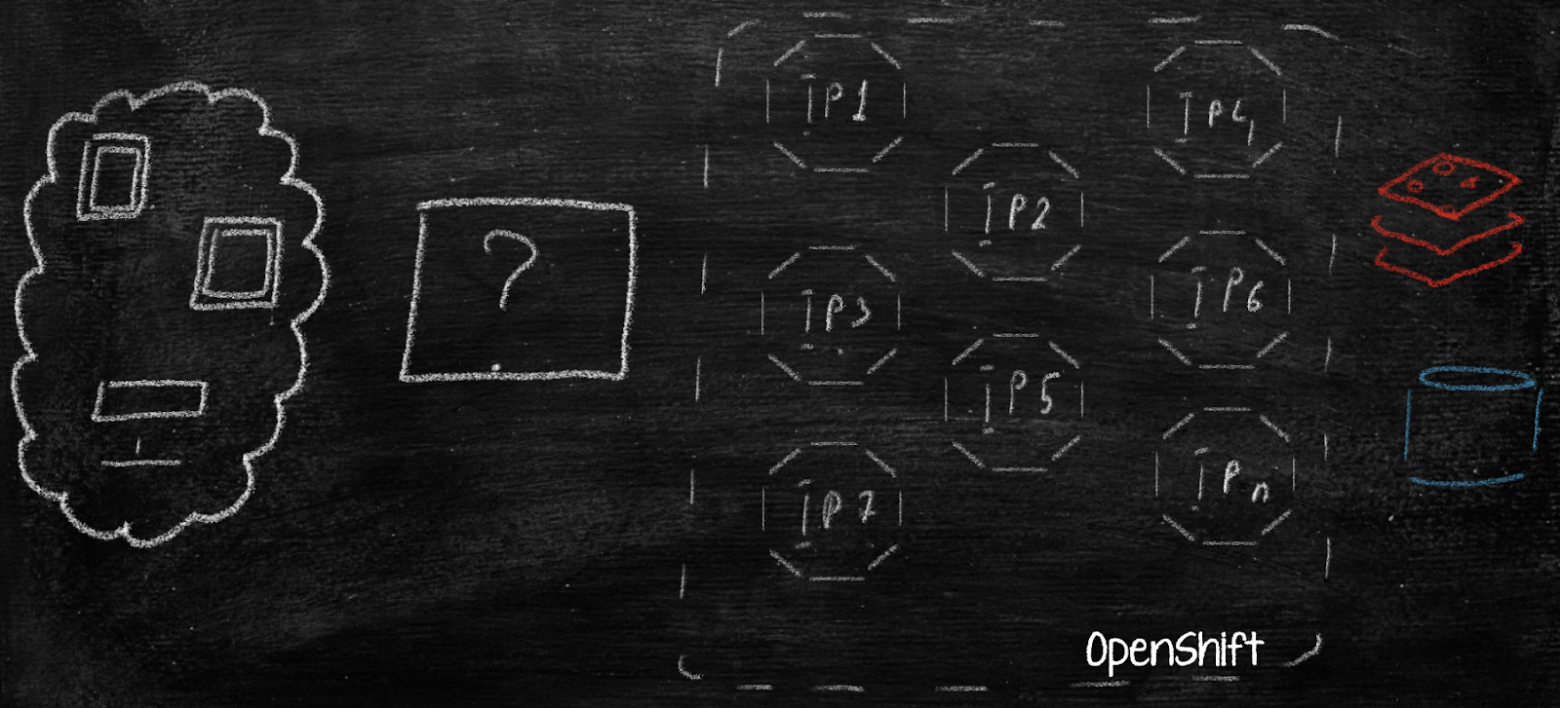

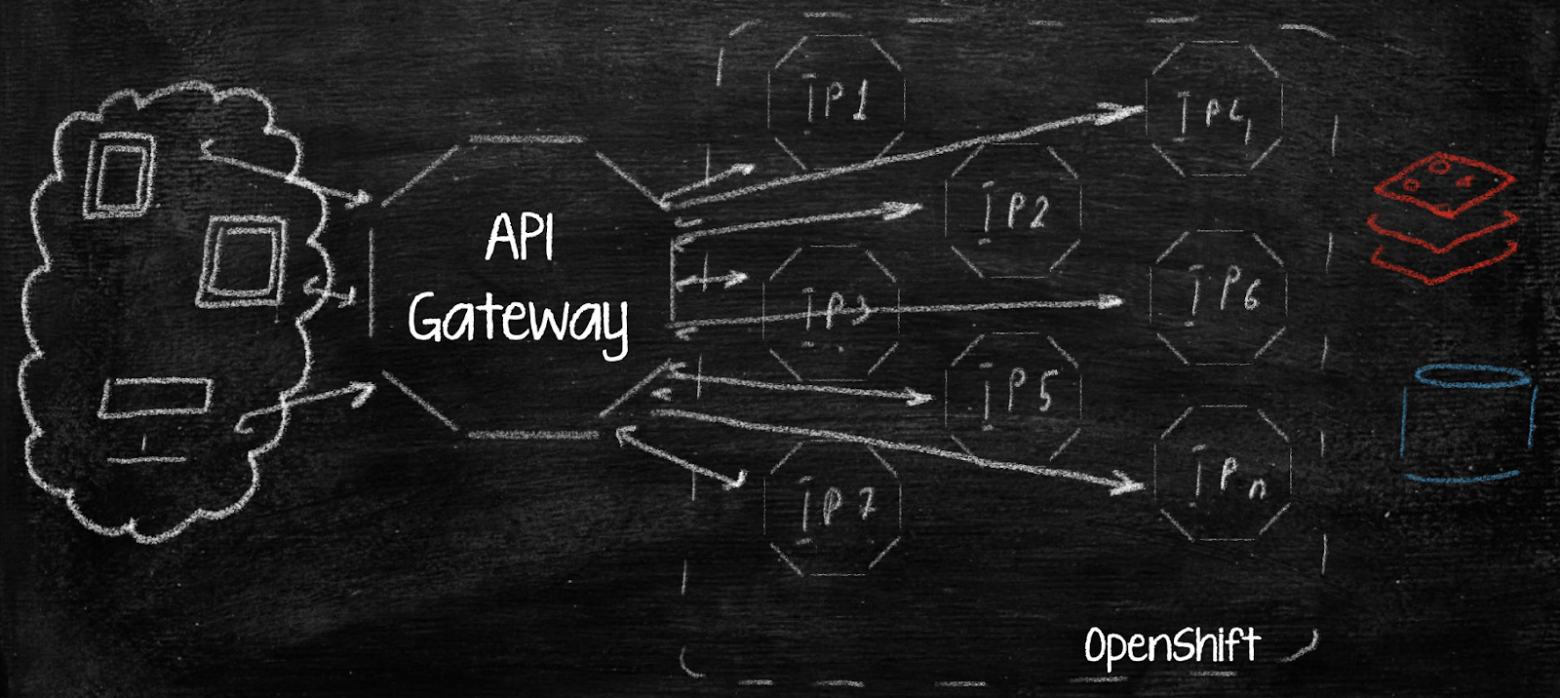

Later, the bank moved its banking application to trendy microservices. Seems ok, but how should we route clients here? Now we have a bunch of Deployments (DC) in OpenShift, each DC has a number of application instances (Pod), and each instance has its own IP. How can the client know the exact DC and pod to connect to?

In theory, we can create numerous nginx or another load balancer configurations. Imagine how challenging its complexity and maintenance will be! Or maybe we can place each microservice at its own subdomain? How do we balance the pods then? These options don’t seem applicable, what a relief.

The best answer here is already discovered: an edge microservice to handle client routing and more :) Here we’re approaching the API gateway pattern.

What is API gateway

To understand the concept, imagine an international airport terminal. For a flight to another country we don't just arrive and board the first plane we see. First, we need to complete check-in and get a boarding pass. Then we go through customs and passport control, where we get a stamp indicating our departure from the country. Then we head to our gate, go through passport and ticket checks, and finally, we board the plane.

The idea of an international airport terminal perfectly resembles the essence of API gateway:

Unification — a single interface for all services. You can fly to any country from the terminal,.

Routing — redirecting requests to target services. You find out your gate and plane after checking in for your flight.

Validation — checking mandatory parameters: visa, passport control, etc.

Authorization and authentication. Your identity is verified to check if you have a right to fly away.

Traceability of requests/responses. We get stamps on departure from one country and arrival in another.

Standardization — trimming the luggage prohibited. If we have something not allowed for import, it gets confiscated by authorities.

Enhancement of requests/responses — adding headers or cookies. We may buy something in duty-free shops to get prepared for our arrival.

Moreover, API gateway can do some extra tasks like providing metrics, managing throttling, and organizing fault tolerance.

Now let's list the tasks of API gateway in our bank:

Routing client requests to services. The gateway routes requests to different versions of services depending on the version of the client application.

Checking client authorization. The gateway acts as an Oauth2.0 (OpenID) Resource Server, and the API services receive user information. This makes it easier to develop backend applications.

Tracing requests/responses: the gateway tags each request/response with a unique identifier. This enables fast tracing of a request, if a client has any problems with it.

Logging request and response parameters to understand potential problems more effectively. This really helps our support team.

Providing metrics to assess load and performance.

Enhancing requests with extra cookies, headers, or even replacing values in the request-response body.

The objectives and the approach are clear, what about the solution? Should we choose something ready-made or develop it ourselves? The architects were inspired with their great new ideas, and the developers were ready to make it a success, so we decided to do it ourselves.

What to use as a basis wasn't much of a question. In 2017-2018, the leading solution for API gateways was Netflix Zuul — a part of the Netflix Spring Cloud project that provides dynamic routing, monitoring, security, and other cross-functional capabilities for microservice applications. Hence, Zuul was the choice.

Zuul has excellent integration with Spring, a vast community and knowledge base. It uses a traditional approach to development, and it can be found in many companies today. Zuul supports filters at different stages of requests — pre filters, post filters, and error filters. What is most important is that Zuul is a classic gateway based on the servlet stack; it uses Apache Tomcat and relies entirely on the request-per-thread paradigm (one request is one thread).

What is servlet context?

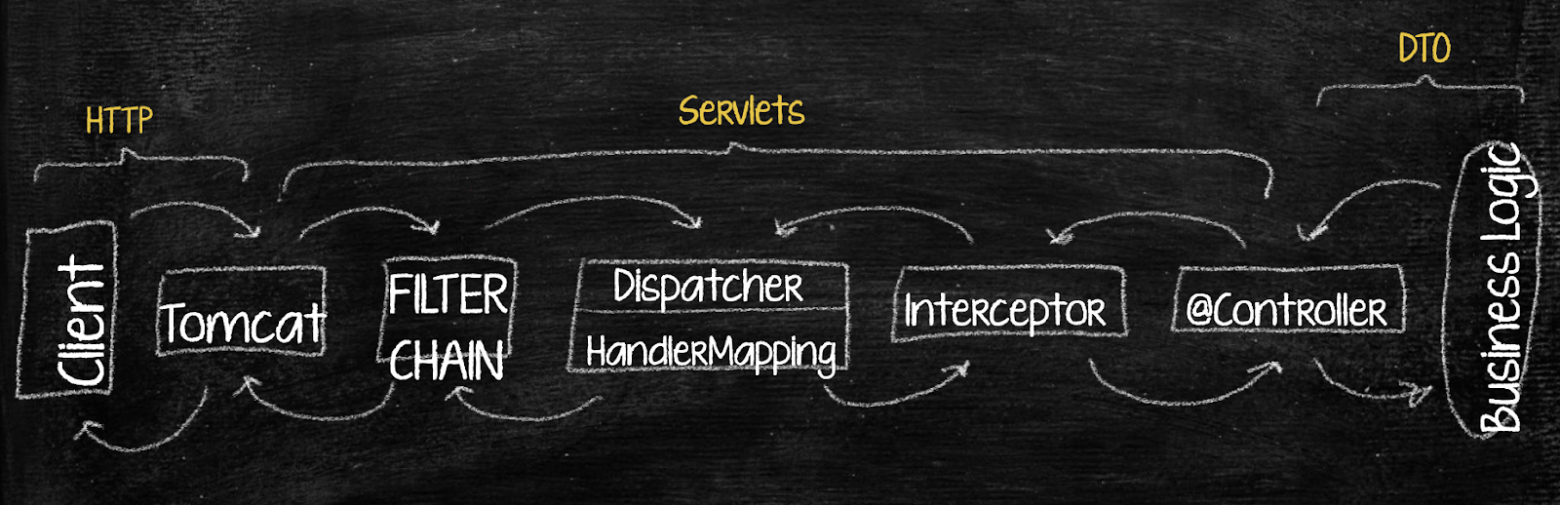

Since we're talking about servlets, let’s remind what they are to those who have forgotten and explain it to those who are not familiar with it. Servlet context provides an environment for executing servlets within a servlet container — like in Apache Tomcat — as well as an access to shared resources. When developing a Spring MVC application, we usually don't use ServletContext as is, but with Spring abstractions — controllers and annotations — @RestController, @GetMapping, @RequestParam, etc. Just because we're not working with an implicitly declared servlet, doesn't mean it doesn't exist. It always comes to any REST controller, and we can address it easily.

Essentially, servlets are objects created by a servlet container, Apache Tomcat. They match the HTTP request and the response from the server. For us, as Java Spring developers, the main objects are HttpServletRequest and HttpServletResponse.

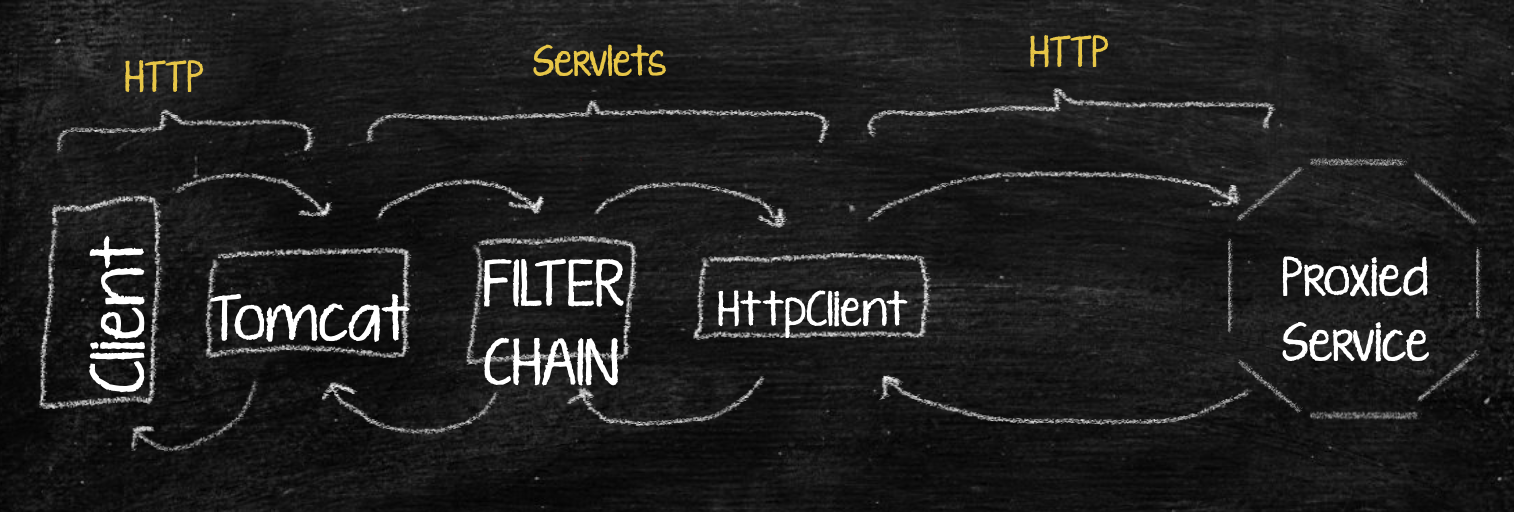

Here's how it works: the client sends us a request in Tomcat. It generates servlets, which go down the entire chain of blocks in our Spring application — through a chain of filters and a servlet dispatcher, where the target controller for a servlet is determined. An interceptor makes the last modifications and, finally, the servlet reaches the controller, where we extract the DTO requested in the method signature. Then the response goes in reverse order. This is how it looks like:

Since we're discussing gateways, we are only interested in the first half of this route. The client sends a request to our Tomcat gateway, the request passes a chain of filters, and then it doesn't go to the dispatcher, but rather to the gateway's HttpClient, in order to be sent to the request destination service.

Zuul uses a thread-safe RequestContext to exchange information between filters,. RequestContext is not directly linked to Zuul, but filled with data from HttpServletRequest, HttpServletResponse, and ServletContext made by Tomcat. RequestContext lives within a single thread, and this is its top advantage! We can access it at any point, extract the entire request or response, and do whatever we want with them in a most transparent and understandable way.

How to organize filters

Let’s dive deeper into filters. A filter is a component to process incoming and outgoing HTTP requests and responses before they reach the controllers or return to the client. Zuul is integrated with Spring Boot, so for processing requests we can use not only ZuulFilter, a basis of Zuul, but also Spring filters, javax.servlet.Filter, and o.s.web.filter package, including GenericFilterBean, OncePerRequestFilter and others.

Have a look at the following implementation a Zuul Filter class. It has type, order, enabling flag, and logic that we need to define in the run method — for example, to access RequestContext, extract HttpServletResponse, and change something in it:

public class SampleZuulFilter extends ZuulFilter {

@Override

public String filterType() {

return FilterConstants.PRE_TYPE;

}

@Override

public int filterOrder() {

return PRE_DECORATION_FILTER_ORDER + 1;

}

@Override

public boolean shouldFilter() {

return true;

}

@Override

public Object run() {

log.info("Logic executed here");

return null;

}

}This filter is somewhat different from the standard one: nothing comes into a Zuul Filter. Spring and javax filters take ServletRequest and ServletResponse as inputs — objects with necessary information about servlets, as well as a filter chain, an object required for passing request and response between other filters.

public class SampleFilter implements Filter {

@Override

public void doFilter(ServletRequest request, ServletResponse response, FilterChain chain)

throws IOException, ServletException {

try {

log.info("Pre. filter logic executed!");

chain.doFilter(request, response);

log.info("Post. filter logic executed!";

} catch (Exception ex) {

log.warn("Filter will be skipped due the exception”, ex);

chain.doFilter(request, response)

}

}

}In this example we use standard logging, organized in accordance with preprocessing logic. It always goes before calling chain.doFilter — this method sends the request further along the chain. Everything after it is post-processing. A common mistake in try/catch filter implementation is forgetting to write chain.doFilter in the catch block. Here, the request will hang and not proceed further through the filter.

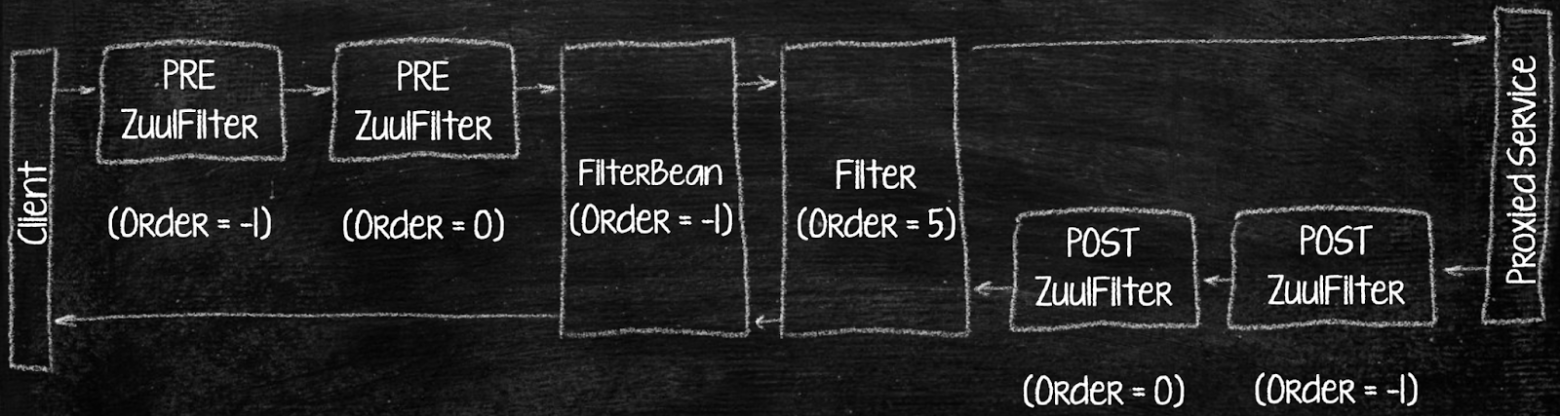

Filter run order (@Order)

Order is one of the most critical conditions for your filters! By changing or not specifying the order in one of your application's filters, you can significantly alter the logic of your service's operation, without even knowing it sometimes.

The most important property of the filter chain is its order. When a request goes through pre-filters, the order is always determined from smallest to largest, from negative to positive. Post-filters in Zuul come after the proxied service processes it, and they are ordered from negative to positive because filters contain a single logic only.

With Spring and Javax/Jakarta filters, the situation is different. When a request goes to the proxied service, the order is standard, from negative to positive. But after the proxied service, when we already have the HttpServletResponse filled, the order gets reversed. As illustrated, the filter to process the request first will be the last to process the response.

If we have two filters of the same type with the same order, their filter execution order becomes unpredictable. This happens when the order of filters is not defined or defined incorrectly, for example, in logging.

Service Discovery

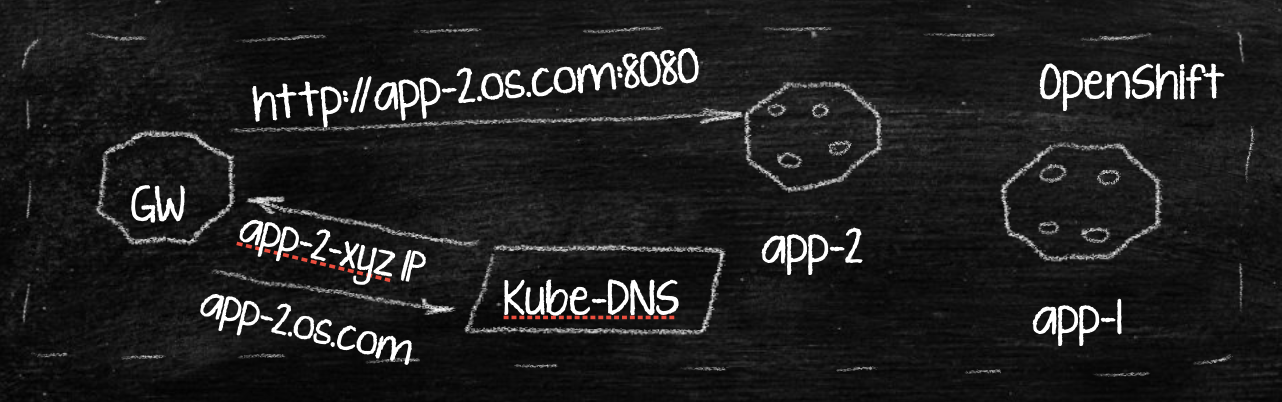

Our gateway must have Service Discovery for discovering services to send requests to. Netflix Eureka is often used for this. However, we decided not to use it and rely on the standard OpenShift tools based on Kube-DNS. Kube-DNS is a Kubernetes service for mapping the Deployment Config Name and the IP addresses of pods within a Deployment Config (DC). This approach allows us to achieve service discovery without using Netflix Eureka.

We access the service by its Deployment Config (DC) name, and then Kube-DNS returns the required pods, handles load balancing, and performs other essential tasks. There is no need to include Eureka or other dependencies.

Setup description

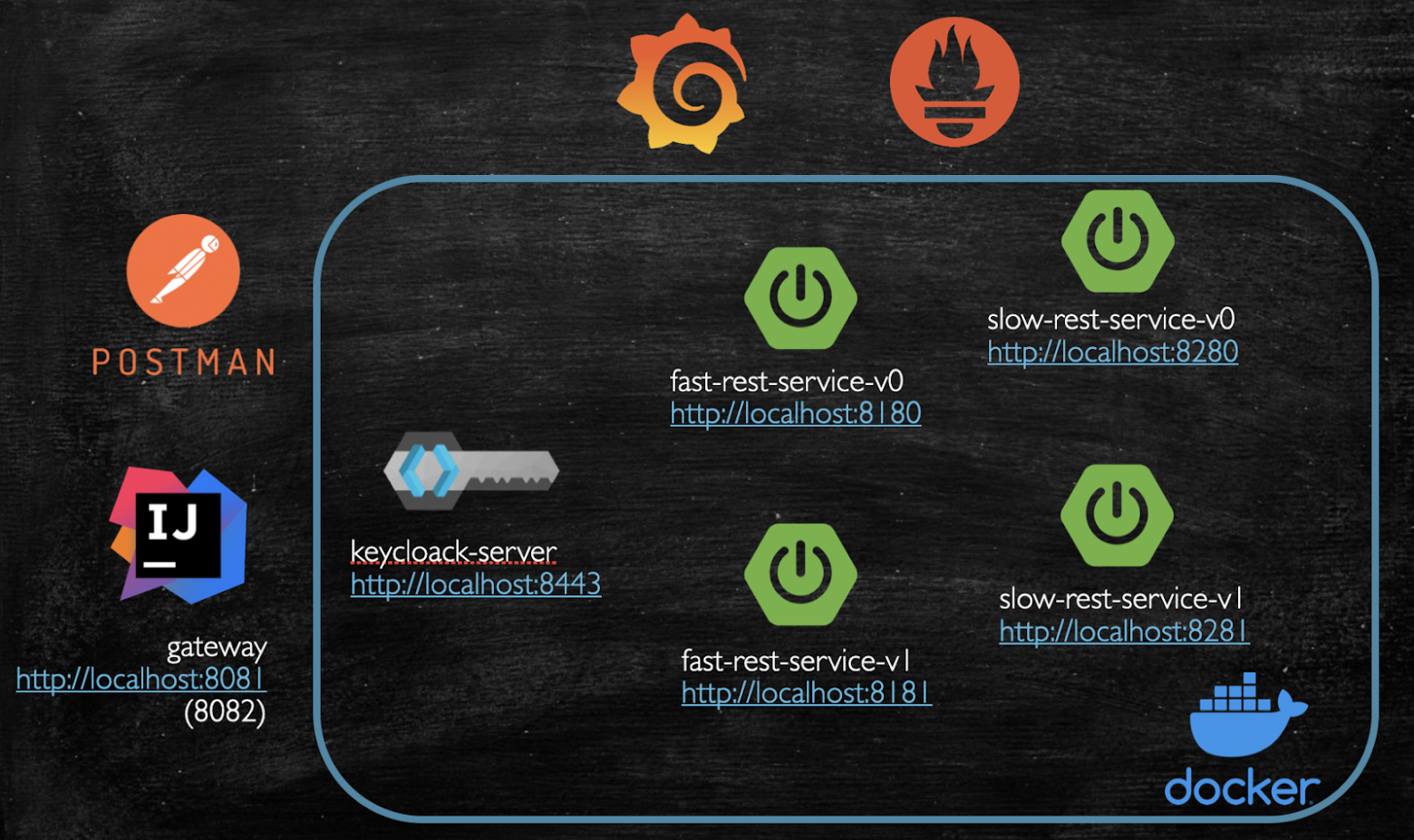

Before the demonstrations, let me describe our setup. We have four services running in Docker - two are fast, and two are slow (v0 with a 3s delay, v1 with a 6s delay). Additionally, there is an authentication service. We run the gateway itself from IDEA, and individual requests are processed by Postman. Metrics will be collected in Grafana with Prometheus. For load testing, we’ll use the Gatling toolkit.

Netflix Zuul

Zuul has some filters:

@Override

public int filterOrder() {

return 0;

}

@Override

public boolean shouldFilter() {

return true;

}

@Override

public Object run() throws ZuulException {

RequestContext ctx = RequestContext.getCurrentContext();

List<Pair<String, String>> zuulResponseHeaders = ctx.getZuulResponseHeaders();

if (zuulResponseHeaders != null) {

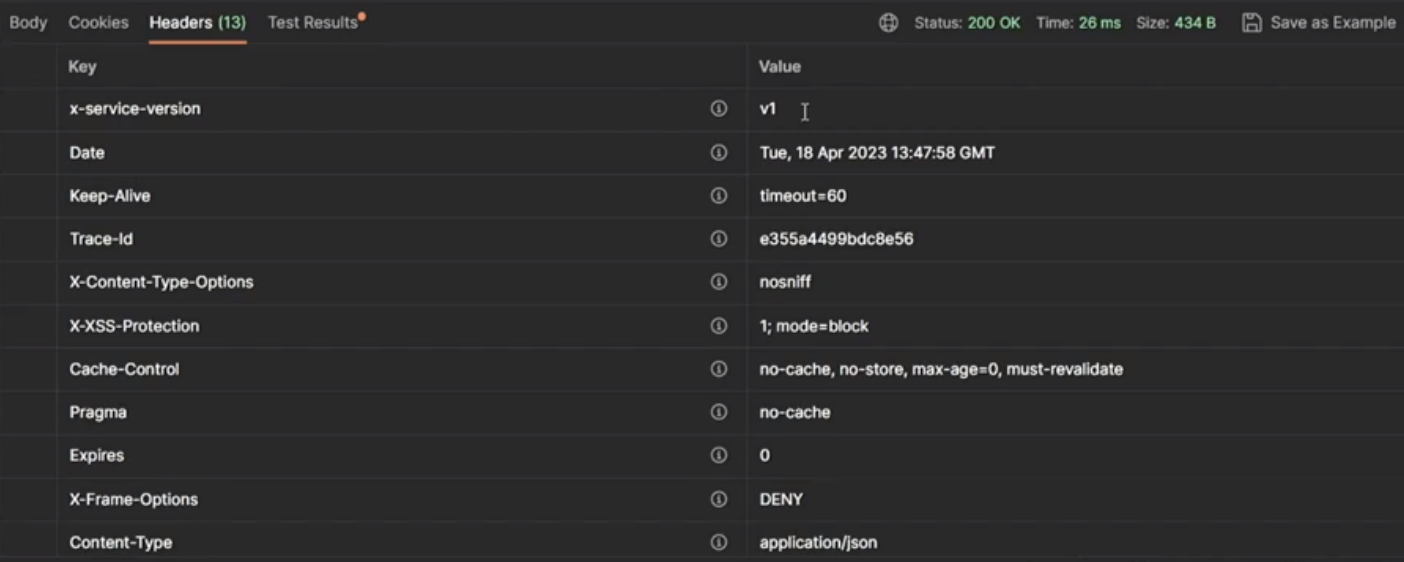

String traceId = tracer.currentSpan().context().traceIdString();

zuulResponseHeaders.add(new Pair<>("Trace-Id", traceId));

}

return null;

}

}And here are the routes:

zuul:

host:

connect-timeout-millis: 10000

socket-timeout-millis: 30000

routes:

auth:

path: /auth/**

stripPrefix: true

url: http://localhost:8443/realms/bank_realm/protocol/openid-connect/token

fast-rest-service:

path: /fast-service/**

stripPrefix: true

url: http://localhost

slow-rest-service-v0:

path: /slow-service/v0/**

stripPrefix: true

url: http://localhost:8280

slow-rest-service-v1:

path: /slow-service/v1/**

stripPrefix: true

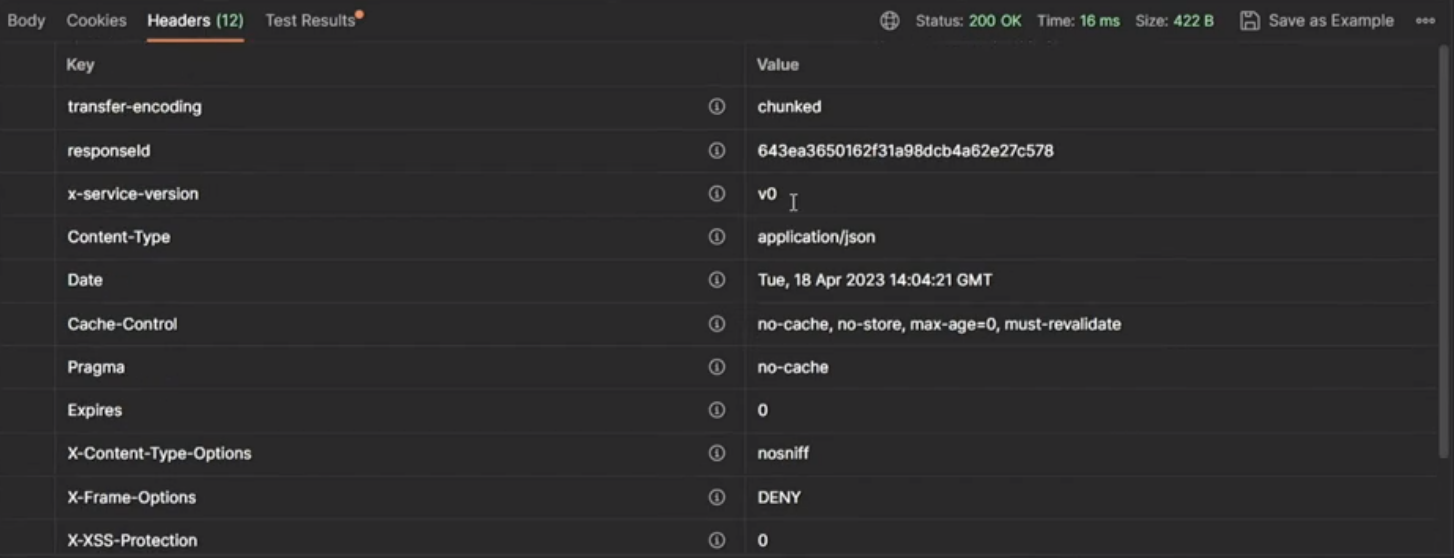

url: http://localhost:8281Let's start with individual test requests to see how our tracing filter and the filter for setting the version of the proxied service work:

Using the trace-id in Grafana, you can immediately trace the request and view its route. Logs for the request are also available.

The same will happen when accessing the slow services, but with the corresponding delay.

Now let's see how the gateway performs under load. Below is an example of a request in Gatling with authentication and a simple request to the service.

public class GatewayThrottle extends Simulation {

HttpProtocolBuilder httpProtocol =

http

// Here is the root for all relative URLs

.baseUrl("http://localhost:8081")

// Here are the common headers

.acceptHeader("*/*")

.acceptEncodingHeader("gzip, deflate, br")

.userAgentHeader(

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10.8; rv:16.0) Gecko/20100101 Firefox/16.0");

// A scenario is a chain of requests and pauses

ScenarioBuilder scn = scenario("Scenario Name")

.exec(http("fastRequest")

.get("/fast-service/")

.header("x-client-version", "0.0.1")

.header("Authorization","Bearer eyJhbGciOiJSUzI1NiIsInR5cCIgOiAiSldUIiwia2lkIiA6ICJvTXlRTHB6TXJmWlJ2SkxXbGxZdXRuS1NHbFlHNE5fZ0J0SkIweHpLdHBBIn0.eyJleHAiOjE2ODE4NDY3MDYsImlhdCI6MTY4MTc2MzkwNiwianRpIjoiNGRhZDY3N2EtNWZhZi00MGRkLTljMzUtMTU2NTdkZDg4ZTNlIiwiaXNzIjoiaHR0cDovL2xvY2FsaG9zdDo4NDQzL3JlYWxtcy9iYW5rX3JlYWxtIiwiYXVkIjpbIm9hdXRoMi1yZXNvdXJjZSIsImFjY291bnQiXSwic3ViIjoiNzY5NzE3MDQtYjdkZC00OGY2LTkyNTEtY2U0ODI1NzAzODg2IiwidHlwIjoiQmVhcmVyIiwiYXpwIjoiamhvbl9kb2UiLCJzZXNzaW9uX3N0YXRlIjoiNWIwMmQ2ODgtMjRiMi00MDlkLWFlOTUtNTRiZTgwNmJhOTNhIiwiYWNyIjoiMSIsImFsbG93ZWQtb3JpZ2lucyI6WyIvKiJdLCJyZWFsbV9hY2Nlc3MiOnsicm9sZXMiOlsiZGVmYXVsdC1yb2xlcy1iYW5rX3JlYWxtIiwib2ZmbGluZV9hY2Nlc3MiLCJ1bWFfYXV0aG9yaXphdGlvbiJdfSwicmVzb3VyY2VfYWNjZXNzIjp7ImFjY291bnQiOnsicm9sZXMiOlsibWFuYWdlLWFjY291bnQiLCJtYW5hZ2UtYWNjb3VudC1saW5rcyIsInZpZXctcHJvZmlsZSJdfX0sInNjb3BlIjoicHJvZmlsZSByZWdpc3RyYXRpb24gYmFzaWMgYmFzaWNfcmVhZCBlbWFpbCIsInNpZCI6IjViMDJkNjg4LTI0YjItNDA5ZC1hZTk1LTU0YmU4MDZiYTkzYSIsImVtYWlsX3ZlcmlmaWVkIjpmYWxzZSwibmFtZSI6IkpvaG4gRG9lIiwicHJlZmVycmVkX3VzZXJuYW1lIjoiam9obmRvZSIsImdpdmVuX25hbWUiOiJKb2huIiwiZmFtaWx5X25hbWUiOiJEb2UifQ.nUqK2rXNvMCveGKrMylTsbp3aoh_8Q4IV-qa5KZIxvPVxZdlP82WR0ujx6nvbwS1Jx_zZL9afxOhxdeNt-1WDGS7oaVGHFfpIYCtnW0pcHX-_MHsRZlraVzKf56GHKfqIAC4S9S5oP-4kT-V2ryOMisQEBz4g2RefsvlK1pr4MbwY5OjxuppwAQiMepVkrj45NJpOuJ752dWmsR09HKv0Ti7-25gMoekoYU-YMLoz2yd-2kcO-mknbS_FA1skSw6d2NS3L93OpZJJkDOgRORDjkjOy04QmEe7rsiVjAb_jFlHj-B6fsr5kPQF-dDvvFQjUbZJYgl6-Wdi-DYjNPQZA"))

.exec(http("slowRequest")

.get("/slow-service/v0")

.header("Authorization","Bearer eyJhbGciOiJSUzI1NiIsInR5cCIgOiAiSldUIiwia2lkIiA6ICJvTXlRTHB6TXJmWlJ2SkxXbGxZdXRuS1NHbFlHNE5fZ0J0SkIweHpLdHBBIn0.eyJleHAiOjE2ODE4NDY3MDYsImlhdCI6MTY4MTc2MzkwNiwianRpIjoiNGRhZDY3N2EtNWZhZi00MGRkLTljMzUtMTU2NTdkZDg4ZTNlIiwiaXNzIjoiaHR0cDovL2xvY2FsaG9zdDo4NDQzL3JlYWxtcy9iYW5rX3JlYWxtIiwiYXVkIjpbIm9hdXRoMi1yZXNvdXJjZSIsImFjY291bnQiXSwic3ViIjoiNzY5NzE3MDQtYjdkZC00OGY2LTkyNTEtY2U0ODI1NzAzODg2IiwidHlwIjoiQmVhcmVyIiwiYXpwIjoiamhvbl9kb2UiLCJzZXNzaW9uX3N0YXRlIjoiNWIwMmQ2ODgtMjRiMi00MDlkLWFlOTUtNTRiZTgwNmJhOTNhIiwiYWNyIjoiMSIsImFsbG93ZWQtb3JpZ2lucyI6WyIvKiJdLCJyZWFsbV9hY2Nlc3MiOnsicm9sZXMiOlsiZGVmYXVsdC1yb2xlcy1iYW5rX3JlYWxtIiwib2ZmbGluZV9hY2Nlc3MiLCJ1bWFfYXV0aG9yaXphdGlvbiJdfSwicmVzb3VyY2VfYWNjZXNzIjp7ImFjY291bnQiOnsicm9sZXMiOlsibWFuYWdlLWFjY291bnQiLCJtYW5hZ2UtYWNjb3VudC1saW5rcyIsInZpZXctcHJvZmlsZSJdfX0sInNjb3BlIjoicHJvZmlsZSByZWdpc3RyYXRpb24gYmFzaWMgYmFzaWNfcmVhZCBlbWFpbCIsInNpZCI6IjViMDJkNjg4LTI0YjItNDA5ZC1hZTk1LTU0YmU4MDZiYTkzYSIsImVtYWlsX3ZlcmlmaWVkIjpmYWxzZSwibmFtZSI6IkpvaG4gRG9lIiwicHJlZmVycmVkX3VzZXJuYW1lIjoiam9obmRvZSIsImdpdmVuX25hbWUiOiJKb2huIiwiZmFtaWx5X25hbWUiOiJEb2UifQ.nUqK2rXNvMCveGKrMylTsbp3aoh_8Q4IV-qa5KZIxvPVxZdlP82WR0ujx6nvbwS1Jx_zZL9afxOhxdeNt-1WDGS7oaVGHFfpIYCtnW0pcHX-_MHsRZlraVzKf56GHKfqIAC4S9S5oP-4kT-V2ryOMisQEBz4g2RefsvlK1pr4MbwY5OjxuppwAQiMepVkrj45NJpOuJ752dWmsR09HKv0Ti7-25gMoekoYU-YMLoz2yd-2kcO-mknbS_FA1skSw6d2NS3L93OpZJJkDOgRORDjkjOy04QmEe7rsiVjAb_jFlHj-B6fsr5kPQF-dDvvFQjUbZJYgl6-Wdi-DYjNPQZA"));

{

setUp(scn.injectOpen(constantUsersPerSec(80).during(10)).protocols(httpProtocol));

}

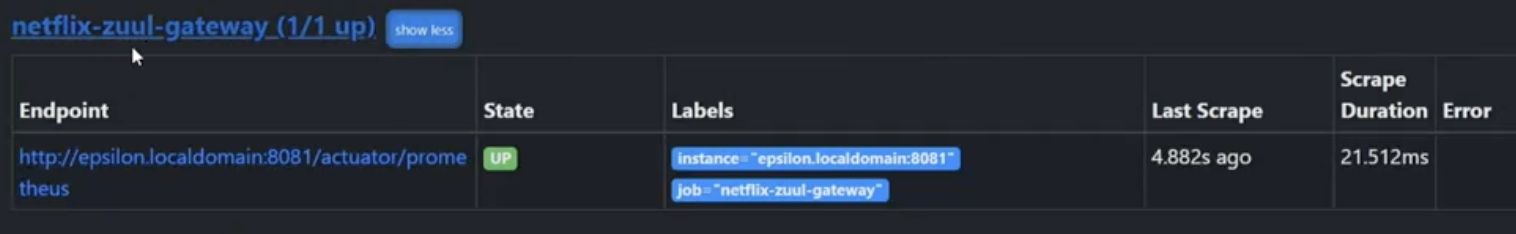

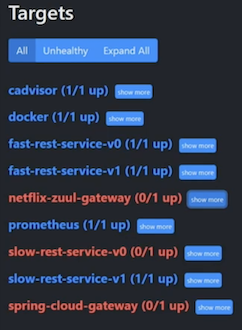

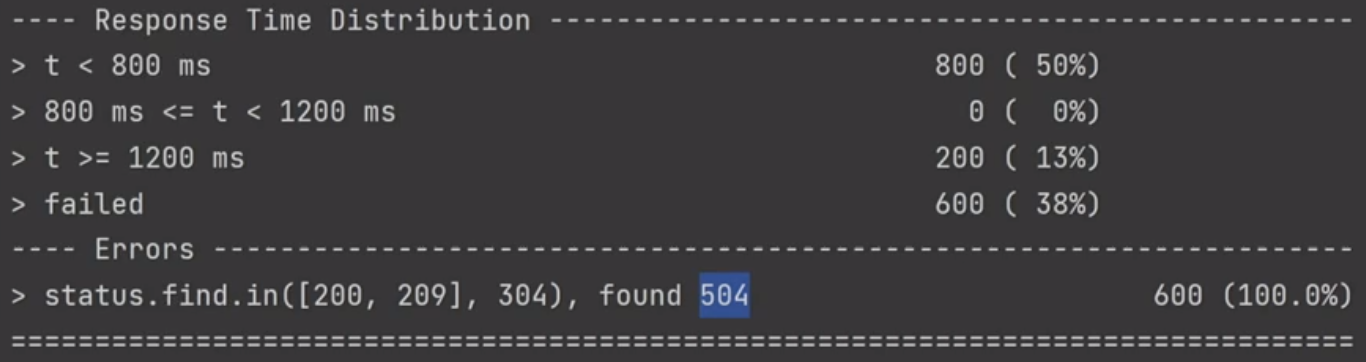

}Above, the client makes 80 requests per second to both the slow and fast services for 10 seconds. The requests are sent; let's check in Prometheus to see what's happening:

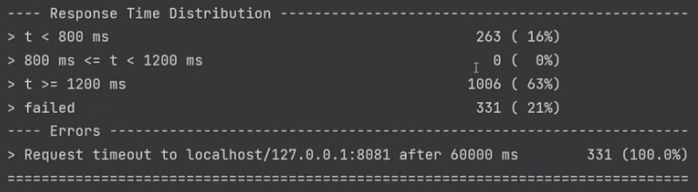

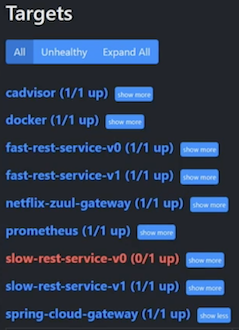

It's interesting that both the slow service and the gateway the requests go through went down. This means that the slow-responding service caused our gateway to fail. At the end of the test, which lasted much longer than the 10 seconds we expected, 331 requests failed. All the others were successful:

Why did the gateway fail?

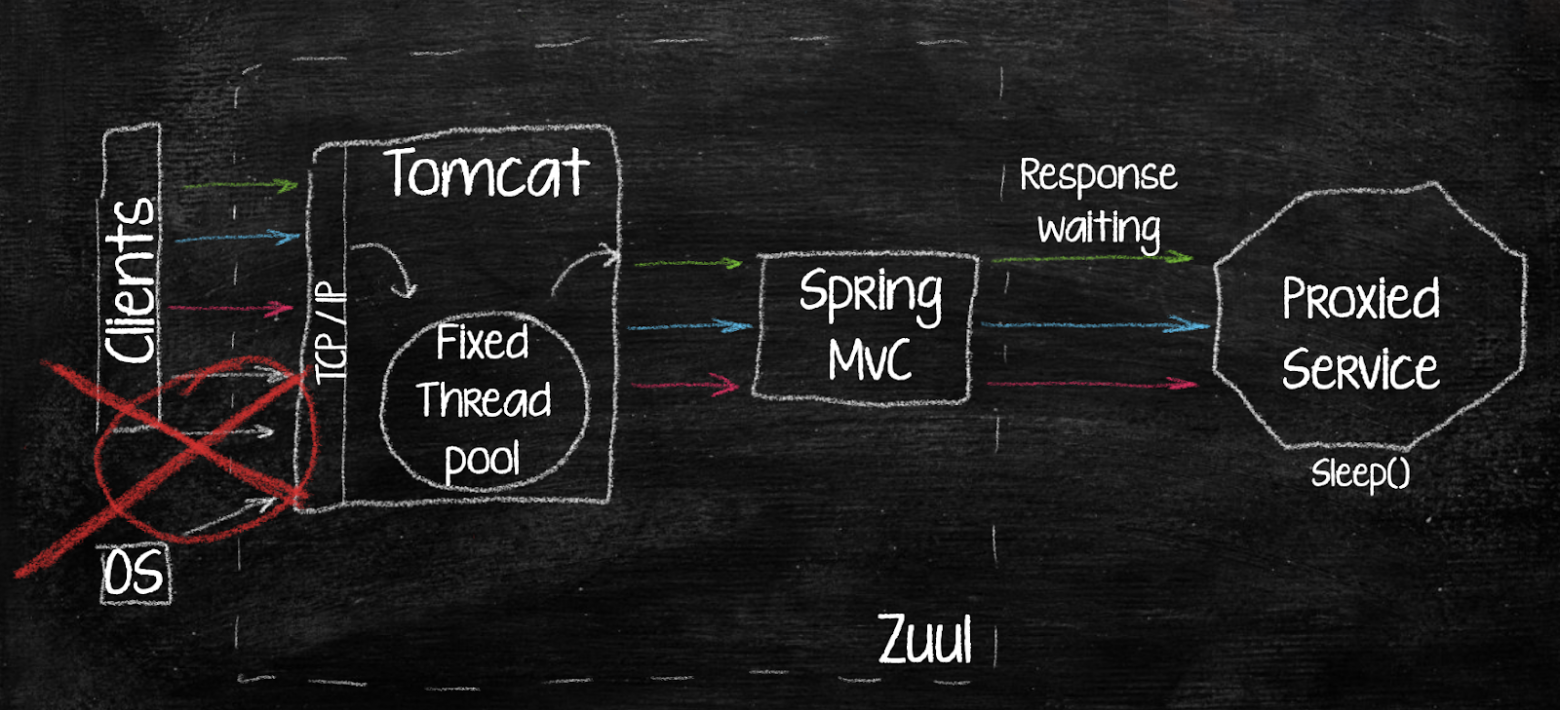

Tomcat, as a servlet container, operates with a fixed number of threads. It is determined in advance; it's usually not recommended to exceed 200 threads.

Let's analyze what happened:

A request arrives from client 1.

Tomcat allocates a thread for it, sends it to the gateway, and then to the proxied service via an HTTP client.

The gateway waits for a response from the proxy service for client 1.

Requests from client 2 and subsequently from client 3 (4, 5, 6, 7, and so on) arrive. In case of a slow-responding REST service, the same thing happens to them as with client 1—they all start waiting.

One by one, all Tomcat threads are over, and the clients are left waiting at the TCP stack level. Whether they will receive their thread or not depends on the connection timeout of the clients and the waiting time of the proxied service.

So, why did the gateway stop responding? In fact, it didn't crash but simply stopped responding to Prometheus metrics. If it was running in OpenShift, OpenShift would have reported an error that the liveness metrics are unavailable (you do use liveness metrics, right?), and the service would have been restarted. This would repeat again and again due to a single slow service under high load.

How to solve the problem? The first option is horizontal scaling, increasing the number of gateway instances. By the way, this is how Zuul solved it before its second version. Additionally, you can use the Deprecated Circuit Breaker Hystrix. However, this doesn’t solve the whole the problem, because it also runs on a blocking API. Moreover, this solution is marked as deprecated.

Optimization and reducing connection/response timeouts is the third and more reasonable option. Trimming the timeouts prevents the service crash even though some requests may fail.

These solutions might work, but in reality, they're just delaying a more significant problem. The number of clients will continue to grow, and you'll have to address the issue repeatedly. A more reliable approach is transitioning to a non-blocking API gateway.

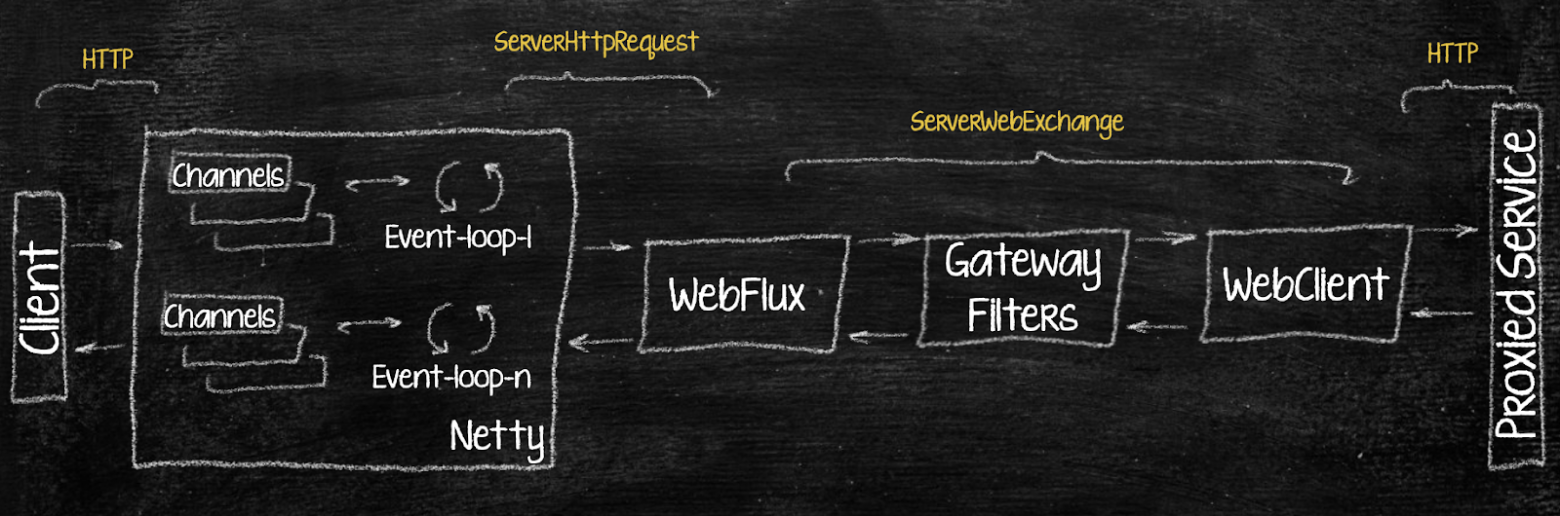

How a non-blocking API gateway works: an example on Spring Cloud Gateway

The non-blocking architecture operates outside the servlet context and runs on Netty, built on the Reactor project. This is an asynchronous framework that relies on event loops rather than a fixed number of threads. There may be just one thread here. One processor thread manages one event loop, which contains a bunch of channels that accept incoming TCP connections, decode HTTP requests (this process is fast), and pass them further.

Via HTTP a request comes into a channel, from the channel into an event loop, and then into a WebFlux application (a reactive approach for writing Spring applications, based on the Project Reactor framework) in a form of a ServerHttpRequest — a new object to replace HttpServletRequest. This happens in a non-blocking manner: the event loop receives an event from the channel, processes it, creates a 'waiter' flag indicating that the response is expected, passes it further down the chain, and next it is ready to accept new requests again. WebFlux wraps it into a ServerWebExchange, which contains both the request and response, and then passes it down the chain to WebClient — the same HttpClient, but capable of asynchronous operations.

As a result, we have an equivalent to the servlet context, but it works in a non-blocking manner through reactive operators. Additionally, everything proceeds asynchronously, so it can all work on a single processor thread. Well, it sounds like a plan.

Reactive gateway and blocking APIs: is it legal after all?

We plan to use microservices with MVC and a gateway with Flux. Will there be any conflicts?

In reality, this won't limit us because Spring Cloud Gateway uses WebFlux WebClient, which provides asynchronous, non-blocking, and efficient response waiting with timeout support. When sending a request, WebClient creates a reactive stream that doesn't wait for a response from the proxied service. While we wait for a response, WebClient is released and doesn't block threads. The only potential load we might encounter is the number of open sockets on the gateway itself.

Spring Cloud Gateway

Spring Cloud Gateway is a project within the Spring Cloud ecosystem that provides an API Gateway solution for microservice architectures. It replaced Zuul at the end of 2018 but has not yet gained big popularity. Don't believe it? Check out StackOverflow, where people are still discussing good old Zuul 1.0 :)

Spring Cloud Gateway uses a reactive stack based on Project Reactor and Netty, ensuring high performance, asynchronous, and non-blocking request processing. It supports global and route filters. "Out of the box" it includes 36 built-in filters that cover most of the business requirements for a gateway. You can activate these filters with no need for a new code!

Transitioning to Spring Cloud Gateway is not as simple as it may seem. The development approach is different from the classic one. You’ll need to rewrite almost all filter logic, not just update dependencies. Moreover, most dependencies from the Netflix Zuul era are now outdated, and you'll need to update them. For example, you'll need to replace o.s.cloud:spring-cloud-starter-sluth with io.micrometer:micrometer-tracing, and o.springframework.security.oauth:* with o.s.boot:spring-boot-starter-security. After these changes, you'll also need to address new issues - tracing, logging, formats, and more.

To replace servlets, WebFlux is introduced, built on top of the reactive stack based on Project Reactor and Netty. Instead of working with RequestContext or ServletRequest/ServletResponse, you now interact with the WebFlux interface – ServerWebExchange, which contains HttpServerRequest, HttpServerResponse, attributes, sessions, and more.

Global Filters

There are global filters always used for all routes and route filters that can be applied to specific routes or all routes (via application.yml or Java config). You don't need to specify additional path details in the filters. You can simply attach the filter to a route and activate it. As I said above, there are already 36 filters at your disposal. All filters also have an order, and the illustration with the filter chain above is still applicable.

Here's an example of implementing a global filter with basic logic, a simple logging:

@Component

public class ExampleGlobalFilter implements GlobalFilter, Ordered {

@Override

public Mono<Void> filter(ServerWebExchange exchange, GatewayFilterChain chain) {

log.info("PRE Logic executed!");

return chain.filter(exchange)

.then(Mono.fromRunnable(() -> {

log.info("POST logic executed!");

}));

}

@Override

public int getOrder() {

return -1;

}

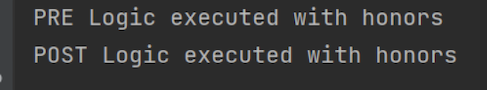

}Now we write filters in a reactive-functional style. We receive a ServerWebExchange with a chain as input. We write pre-logic and return chain.filter(exchange). This is already a reactive stream to which we can apply the reactive operator then. Therefore, to implement post-logic, we write then, fromRunnable or fromCallable, and the logic itself. It's also important to specify the order for a global filter at the end.

Routing Filters

Route filters are implemented in a different way: they extend the GatewayFilter Factory.

@Component

public class ExampleLoggerGatewayFilterFactory extends

AbstractGatewayFilterFactory

@Override

public GatewayFilter apply(Object config) {

return (exchange, chain) -> {

log.info("PRE Logic executed");

return chain.filter(exchange)

.then(Mono.fromRunnable(() -> {

log.info("POST Logic executed");

}));

};

}

}The filter's name is determined by the words before GatewayFilterFactory, and you will specify it in the configuration. The apply method allow the GatewayFilter. It is written in a functional style and returns an exchange and a chain. Here, you define pre- and post-logic, with just a few differences from global filters.

Routing Filters with a configuration

These filters can apply parameters to themselves. All the same here, you add a static class config that can accept parameters.

@Component

public class ExampleLoggerGatewayFilterFactory extends AbstractGatewayFilterFactory<ExampleLoggerGatewayFilterFactory.Config> {

@Override

public GatewayFilter apply(Config config) {

return new OrderedGatewayFilter((exchange, chain) -> {

log.info("PRE Logic executed with {}", config.getParam());

return chain.filter(exchange).then(Mono.fromRunnable(() -> {

log.info("POST Logic executed with {}", config.getParam());

}));

}, -1);

}

@Setter

@Getter

public static class Config {

private String param;

}

}This filter is ordered: it returns a new OrderedFilter. From the configuration, we can take some parameters in our logic, for example, if we need to add different cookies or specify different domains to requests. You can do all of this selectively right in the configuration, with no extra code. And that's really cool! (Remember, programmers should be lazy :))

Example of a request logging filter:

spring:

cloud:

gateway:

routes:

- id: example_route

uri: http://example.org

predicates:

- Path=/example/**

filters:

- ExampleLogger=honorsWe simply add the filter, specify its name, and use equal sign to provide a parameter in the configuration. As a result, we get:

Built-in filters

Here, as an example, I'll provide a throttling filter, RequestRateLimiter. Based on the connection to Redis, it throttles the number of requests that can be sent to the service:

spring:

cloud:

gateway:

routes:

- id: example_route

uri: http://example.org

predicates:

- Path=/example/**

filters:

- ExampleLogger=honors

- name: RequestRateLimiter

args:

redis-rate-limiter:

replenishRate: 10

burstCapacity: 20

requestedTokens: 1If there are more requests than allowed, the service will return a 429 error by default. For this, you simply need to add the last six lines to the configuration and write a KeyResolver, which, in fact, defines the key in Redis:

@Configuration

public class RateLimiterConfig {

@Bean

KeyResolver keyResolver() {

return exchange ->

Mono.just("RequestLimiterKey");

}

}The filters included in Spring Cloud Gateway have proven to be very convenient. Compare it to the RateLimiter implementation in Zuul; you would have to write it at not to mention the code for Redis configuration:

Let's try a similar filter in action. As I mentioned at the beginning, you can find all the projects on my GitHub. Please note that in this repository, there are several branches where you can gradually enhance the gateway with new functionality and conduct your experiments.

cloud:

gateway:

enabled: true

httpclient:

connect-timeout: 10000

response-timeout: 30s

routes:

- id: auth

uri: http://localhost:8443

predicates:

- Path=/auth/**

filters:

- RewritePath=/auth, /realms/bank_realm/protocol/openid-connect/token

- id: fast-rest-service

uri: http://epsilon.BH

predicates:

- Path=/fast-service/**

filters:

- StripPrefix=1

- CustomUrl

- id: slow-rest-service-v0

uri: http://epsilon.BH:8280

predicates:

- Path=/slow-service/v0/**

filters:

- StripPrefix=2

- id: slow-rest-service-v1

uri: http://epsilon.BH:8281

predicates:

- Path=/slow-service/v1/**

filters:

- StripPrefix=2We’re sending the request. Everything works, both the fast service and the slow one.:

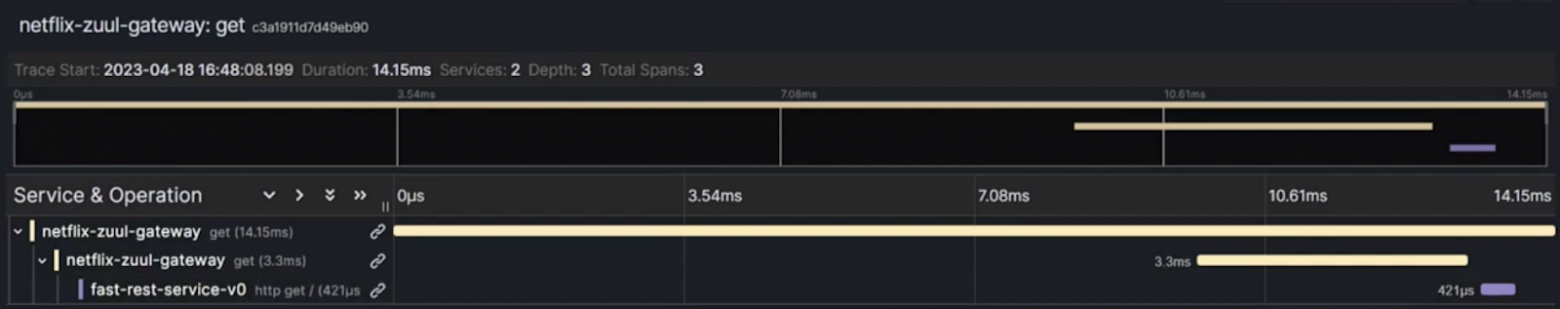

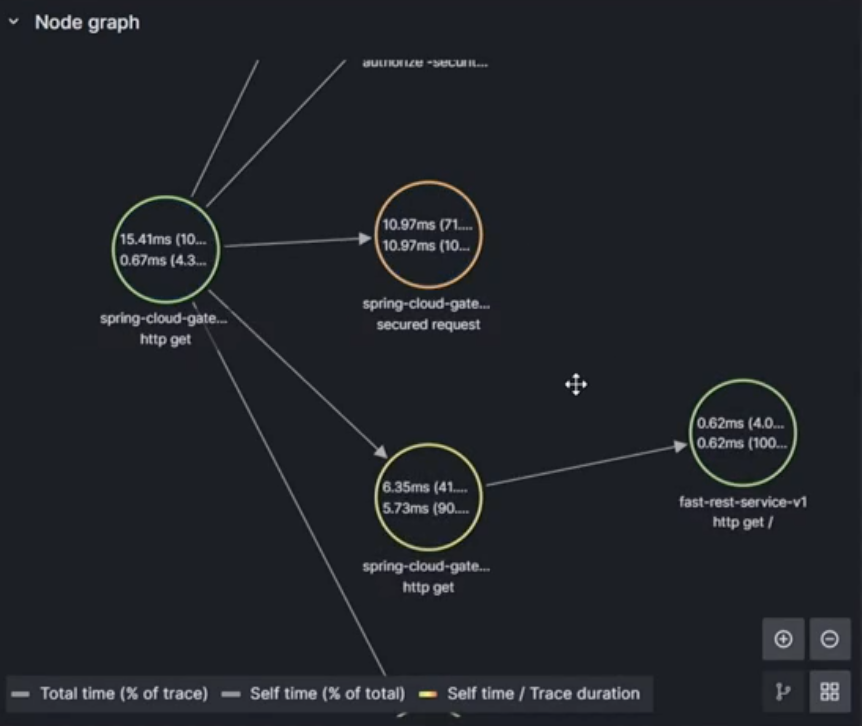

Tracing and logging are also functioning. The diagram has become more interesting, because it displays the tracing of passage through the security service now. The logs are also fine.

Let's try load testing with the same Gatling script for 1000 requests, just like we did with Zuul.

As a result, only the slow service went down, but not the gateway. So, we have solved the initial problem.

All the errors received are related to timeouts. The gateway allowed the requests through, but the services couldn't handle them. How can we fix this? We can activate the RateLimiter, so the client will receive a Status 429 for Throttling, but at least the service won't crash.

Another solution and one of the undeniable advantages of Resilience4J is CircuitBreaker, supported by Spring Cloud Gateway out of the box! You can see how to use this CB in the project on GitHub, and there's an excellent monitoring available for it as well.

Spring Boot 3: native image support out of the box

Spring Boot 3 provides support for compiling native images using the AOT Compiler out of the box. By sacrificing annotations like @ConditionalOnProperty and @Profile, which are often not used in the Gateway, we can run services 10-30 times faster than when running the Jar! For comparison, here's my log when starting the Jar:

And launching the Native container…

3.1 seconds versus 0.15 seconds!!! It's just amazing! When problems arise, Native allows you to quickly roll back and avoid many seconds of downtime in production, which usually comes at a high cost both for the company and your nerves!

Pros and cons of Zuul

Advantages:

Easy to write filters since the context and request are ready yet.

Filters can be used to implement any request-response transformation logic.

Disadvantages:

The number of Requests Per Second (RPS) to the application is tightly bound to the available threads.

Limitations in the use of Circuit Breaker: Hystrix alternatives are not supported.

Requires coding most of the functionality yourself; basic out-of-the-box functionality.

Lacks support for Server-Sent Events (SSE) and WebSocket, which led us to the adoption of Spring Cloud.

Pros and cons of Spring Cloud Gateway

Advantages:

Reactive stack, where requests are not bound to threads.

Greater flexibility in configuration, allowing the creation of both global and specific filters.

Support for resilience4j.

Excellent integration with Spring Boot / Spring Cloud.

Many filters (custom functionality) available out of the box.

Disadvantages:

Incompatibility with the previous solution (Spring Cloud Zuul) requires rewriting the entire functionality. However, it is not as challenging as it may seem, and we can ask for help here:

What we’re having by the end

A modern non-blocking API gateway built on the latest Spring Boot 3.

Reliable protection against cascading failures, where one or more services can bring down the entire application – all of this operates in a non-blocking asynchronous mode.

Support for all the technologies similar to WebSocket and SSE.

The ability to gradually implement Flux from the client to backend services. An interesting tool that some may not be using yet.

Out-of-the-box Native support as a bonus. DevOps will have to reconfigure the pipeline, but only once.

All the code for this post is available on my GitHub. If you have any questions, feel free to reach out in the comments, via email, Twitter, or Telegram.