As a Product Manager who has worked on the development of delivery route optimisation software for 10+ years, I see that modern technologies can significantly improve the optimisation process and deliver better solutions. AI, machine learning, and other modern technologies have the potential to revolutionise the way delivery routes are optimised in the future.

With the increasing availability of data and the advancement of AI and machine learning algorithms, it is becoming possible to develop more sophisticated prediction models that can be integrated into optimisation algorithms to make more accurate and informed decisions about route planning and scheduling. Machine learning algorithms can be trained to predict customer demand based on historical sales data and other market trends, allowing businesses to optimise their delivery schedules and routes accordingly. AI can also be used to optimise delivery schedules based on customer preferences and other relevant factors.

Blockchain technology could be used to create a secure, decentralised database of information about deliveries, including information about the products being shipped, the route they are taking, and the status of the delivery. This could help increase transparency and accountability in the delivery process as well as reduce the risk of fraud and theft.

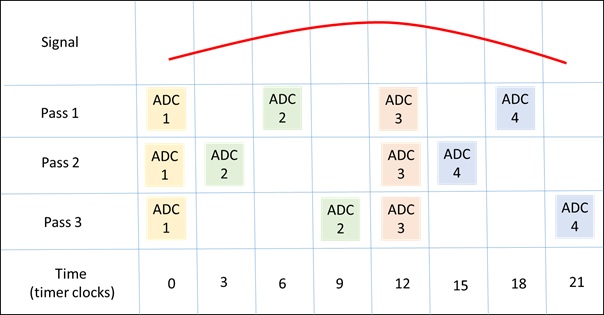

Internet of Things (IoT) devices, such as sensors and GPS trackers, may collect real-time data about delivery vehicles and their surroundings. This data could be analysed and used to optimise delivery routes in real time, as well as to track the location of deliveries and monitor the condition of the products being shipped.