With the recent progress in Neural Networks in general and image Recognition particularly, it might seem that creating an NN-based application for image recognition is a simple routine operation. Well, to some extent it is true: if you can imagine an application of image recognition, then most likely someone have already did something similar. All you need to do is to Google it up and to repeat.

However, there are still countless little details that… they are not insolvable, no. They simply take too much of your time, especially if you are a beginner. What would be of help is a step-by-step project, done right in front of you, start to end. A project that does not contain «this part is obvious so let's skip it» statements. Well, almost :)

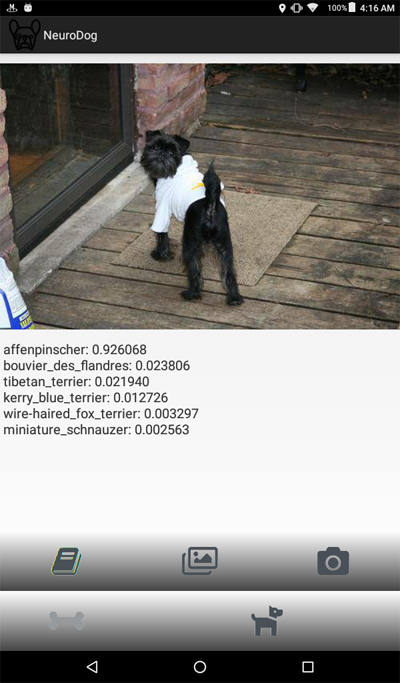

In this tutorial we are going to walk through a Dog Breed Identifier: we will create and teach a Neural Network, then we will port it to Java for Android and publish on Google Play.

For those of you who want to see a end result, here is the link to

NeuroDog App on Google Play.

Web site with my robotics:

robotics.snowcron.com.

Web site with:

NeuroDog User Guide.

Here is a screenshot of the program:

It would seem that the question of the color of the Moon and the Sun from space for modern science is so simple that in our century there should be no problem at all with the answer. We are talking about colors when observing precisely from space, since the atmosphere causes a color change due to Rayleigh light scattering. «Surely somewhere in the encyclopedia about this in detail, in numbers it has long been written,» you will say. Well, now try searching the Internet for information about it. Happened? Most likely no. The maximum that you will find is a couple of words about the fact that the Moon has a brownish tint, and the Sun is reddish. But you will not find information about whether these tints are visible to the human eye or not, especially the meanings of colors in RGB or at least color temperatures. But you will find a bunch of photos and videos where the Moon from space is absolutely gray, mostly in photos of the American Apollo program, and where the Sun from space is depicted white and even blue.

It would seem that the question of the color of the Moon and the Sun from space for modern science is so simple that in our century there should be no problem at all with the answer. We are talking about colors when observing precisely from space, since the atmosphere causes a color change due to Rayleigh light scattering. «Surely somewhere in the encyclopedia about this in detail, in numbers it has long been written,» you will say. Well, now try searching the Internet for information about it. Happened? Most likely no. The maximum that you will find is a couple of words about the fact that the Moon has a brownish tint, and the Sun is reddish. But you will not find information about whether these tints are visible to the human eye or not, especially the meanings of colors in RGB or at least color temperatures. But you will find a bunch of photos and videos where the Moon from space is absolutely gray, mostly in photos of the American Apollo program, and where the Sun from space is depicted white and even blue.