The biggest issue with AI is not that it is stupid but a lack of definition for intelligence and hence a lack of formal measure for it [1a] [1b].

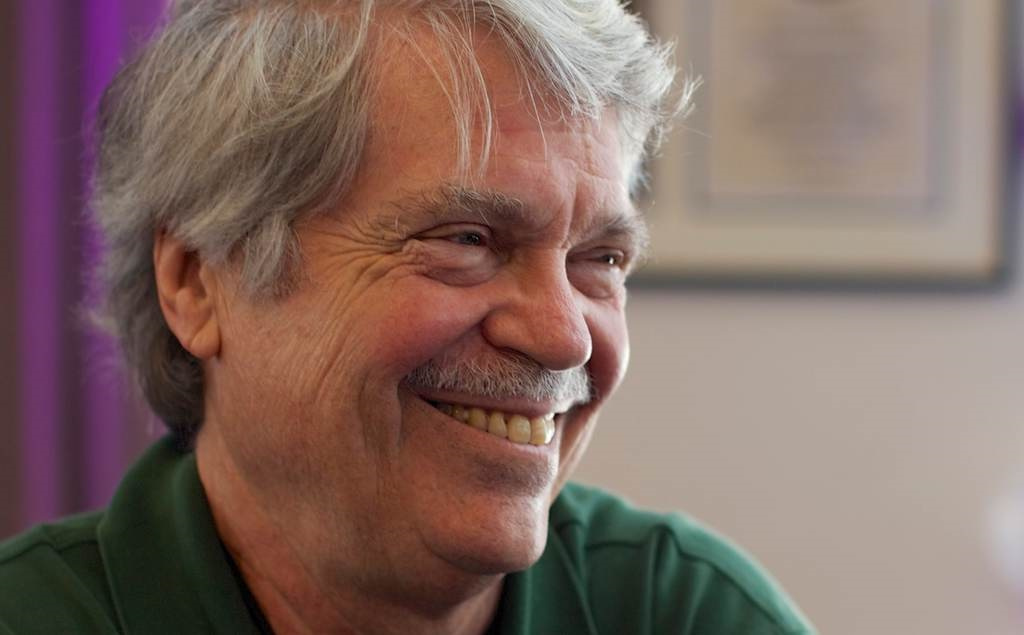

Turing test is not a good measure because gorilla Koko [2a] and bonobo Kanzi [2b] wouldn't pass though they could solve more problems than many disabled human beings.

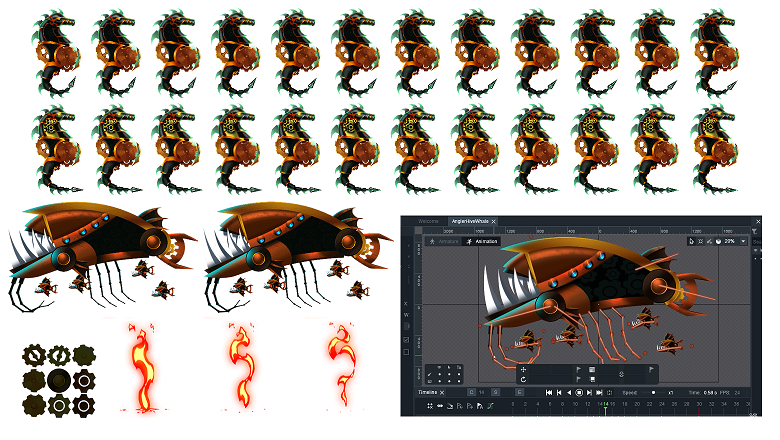

It is quite possible that people in the future might wonder why people back in 2019 thought that an agent trained to play a fixed game in a simulated environment such as Go had any intelligence [3a] [3b] [3c] [3d] [3e] [3f] [3g] [3h].

Intelligence is more about applying/transferring old knowledge to new tasks (playing Quake Arena good enough without any training after mastering Doom) than compressing agent's experience into heuristics to predict a game score and determining agent's action in a given game state to maximize final score (playing Quake Arena good enough after million games after mastering Doom) [4].

Human intelligence is about ability to adapt to the physical/social world, and playing Go is a particular adaptation performed by human intelligence, and developing an algorithm to learn to play Go is a more performant one, and developing a mathematical theory of Go might be even more performant.