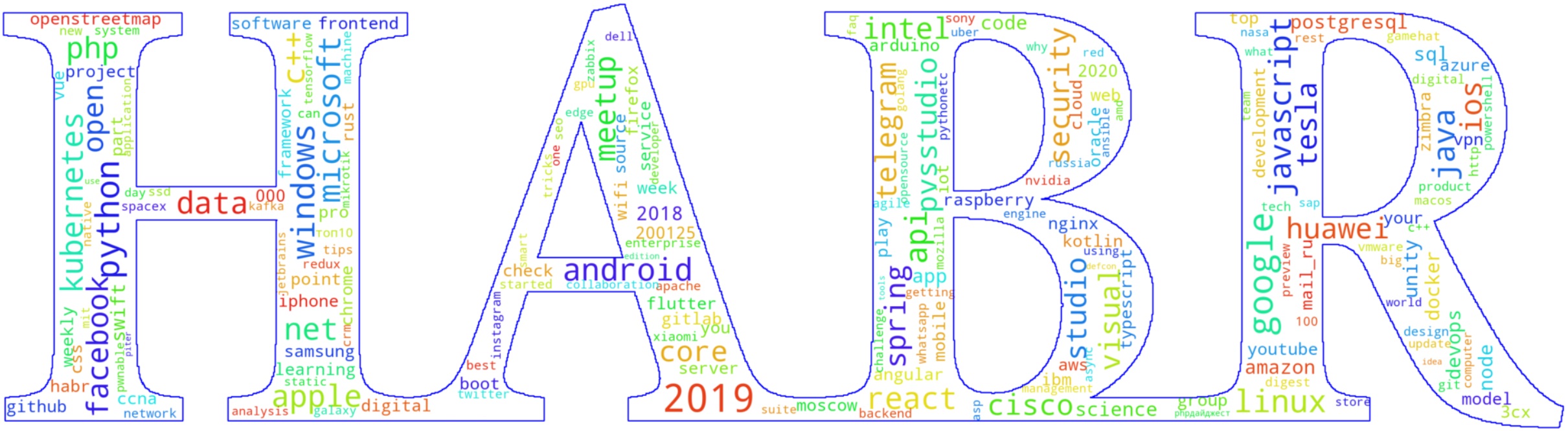

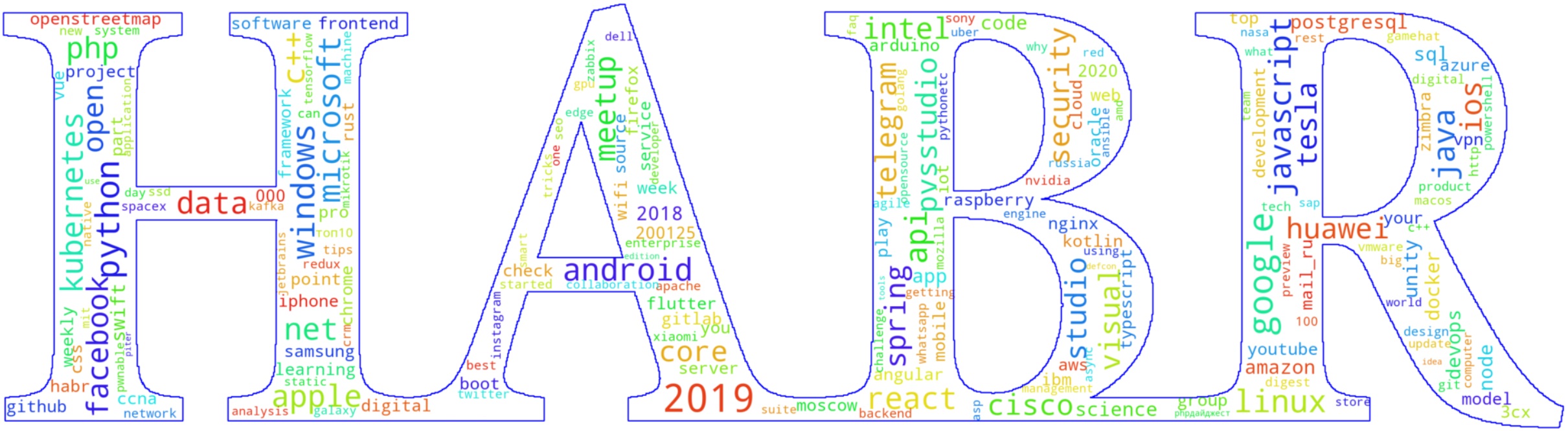

In this post the best articles and best Habr authors 2019 will be presented, I also will show some statistical graphs that I find interesting or unusual.

Let's get started.

Statistics, research, tendencies.

I've long wanted to create educational materials on the topic of Information Theory + Machine Learning. I found some old drafts and decided to polish them up here, on Habr.

Information Theory and Machine Learning seem to me like an interesting pair of fields, the deep connection between which is often unknown to ML engineers, and whose synergy has not yet been fully revealed.

Let's start with basic concepts like Entropy, Information in a message, Mutual Information, and channel capacity. Next, there will be materials on the similarity between tasks of maximizing Mutual Information and minimizing Loss in regression problems. Then there will be a section on Information Geometry: Fisher metric, geodesics, gradient methods, and their connection to Gaussian processes (moving along the gradient using SGD is moving along the geodesic with noise).

It's also necessary to touch upon AIC, Information Bottleneck, and discuss how information flows in neural networks – Mutual Information between layers (Information Theory of Deep Learning, Naftali Tishby), and much more. It's not certain that I'll be able to cover everything listed, but I'll try to get started.

In Part 1, we became familiar with the concept of entropy.

In this part, we will delve into the concept of Mutual Information, which opens doors to error-resistant coding, compression algorithms, and offers a fresh perspective on regression and Machine Learning tasks.

It is an essential component that will pave the way, in the next section, for tackling Machine Learning problems as tasks of extracting mutual information between features and the predicted variable.

Here, there will be three interesting and crucial visualizations.

The first one will visualize entropy for two random variables and their mutual information.

The second one will shed light on the very concept of dependency between two random variables, emphasizing that zero correlation does not imply independence.

The third one will demonstrate that the bandwidth of an information channel has a straightforward geometric interpretation through the convexity measure of the entropy function.

In the meantime, we will prove a simplified version of the Shannon-Hartley theorem regarding the maximum bandwidth of a noisy channel. Let's dive in!