Apache Kafka is a distributed event-streaming platform designed to handle real-time data feeds. It allows applications to publish, process, and subscribe to streams of data in a highly scalable, fault-tolerant manner.

Everything about big data

Apache Kafka is a distributed event-streaming platform designed to handle real-time data feeds. It allows applications to publish, process, and subscribe to streams of data in a highly scalable, fault-tolerant manner.

In today's digital world, where applications process increasing amounts of sensitive data, ensuring reliable user authentication is critical. Authentication is the process of verifying the identity of a user who is trying to access a system. A properly chosen authentication method protects data from unauthorized access, prevents fraud, and increases user confidence.

However, with the development of technology, new authentication methods are emerging, and choosing the optimal solution can be difficult. This article will help developers and business owners understand the variety of authentication approaches and make informed choices.

At some point while diving deeper into automation processes you are faced with the need for data labeling, although just a couple of weeks ago, the phrases data labeling and you were standing at a party called "Earnings on the Internet" in different rooms. Or it would be better to say that you were standing by the pool, and the data labeling was on the third floor, smoking on the balcony with experts in the field of machine learning. How did we meet? Probably, someone pushed it off the balcony into the pool, and I helped it out, soaking my clothes along the way.

In this article, I’m going to discuss something really important. If you’re a data analyst or you want to learn data analysis, please watch this video till the end because it’s really important.

Below, we will discuss user-defined aggregation functions (UDAF) using org.apache.spark.sql.expressions.Aggregator, which can be used for aggregating groups of elements in a DataSet into a single value in any user-defined way.

Let’s start by examining an example from the official documentation that implements a simple aggregation

Today we’re diving into an exciting feature within ChatGPT that has the potential to enhance your productivity by 10, 20, 30, or even 40%. If you’re keen on learning how to leverage this feature to your advantage, make sure to read this article until the end. This feature stands out because it allows you to analyze almost anything by uploading your data and posing various questions to ChatGPT. Whether it's business data, your resume, or any other information you wish to explore, ChatGPT is here to deliver answers based on your specific dataset.

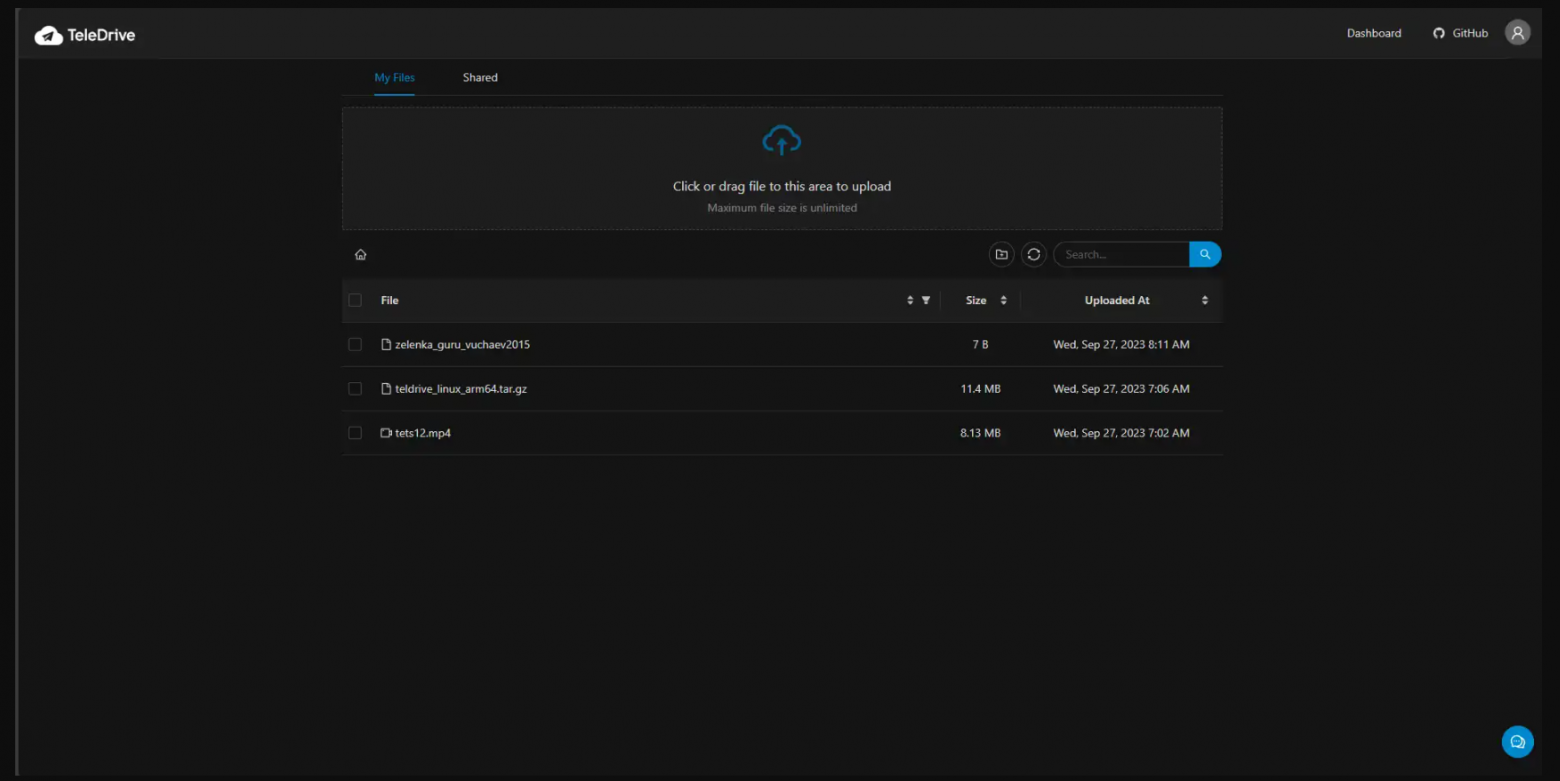

Hey everyone! Today, I'll guide you through creating a boundless cloud storage solution on Telegram using TeleDrive. This open-source project is a game-changer, offering functionalities like Google Drive/OneDrive via the Telegram API.

I believe that every programmer has at least once heard about ChatGPT and its marvelous abilities to process, calculate and create huge amounts of data; if not, go check out this Wikipedia article - https://en.wikipedia.org/wiki/ChatGPT.

Can you imagine that some 50 years ago people could not even believe that there may be something artificial surpassing humans in so many areas? Nowadays, we have this marvel at the distance of a few tabs on a phone screen or a keyboard; however, there is still a sadly large number of people who do not fully—if at all— utilize all the perks of ChatGPT in their lines of work. This is mostly related either to people's reluctance to learn new technologies or the fear of losing coding skills they have previously gained—which is not the case with using ChatGPT properly.

In this article I want to give you some of the most useful uses of ChatGPT for your coding work. Remember, there is nothing shameful in using the AI, since this the development and further implementation of it in our day-to-day life is inevitable, so we should start adapting to it as early as we can to take the full advantage of this "magical" technology. Let's get started.

This article aims at explaining the mathematical sense of the Principal Component Analysis (PCA) in practice.

The most important stage in the data science process is feature engineering, which entails turning raw data into useful features that might enhance the performance of machine learning models. It calls for creativity, data-driven thinking, and domain expertise. Data scientists can improve the prediction capability of their models and find hidden patterns in the data by choosing, combining, and inventing relevant features. Handling missing data, scaling features, encoding categorical variables, constructing interaction terms, and other procedures are examples of feature engineering techniques. The best practises involve investigating the data, testing and improving features iteratively, and applying domain knowledge to draw out important information. The accuracy and effectiveness of machine learning models are significantly influenced by effective feature engineering.

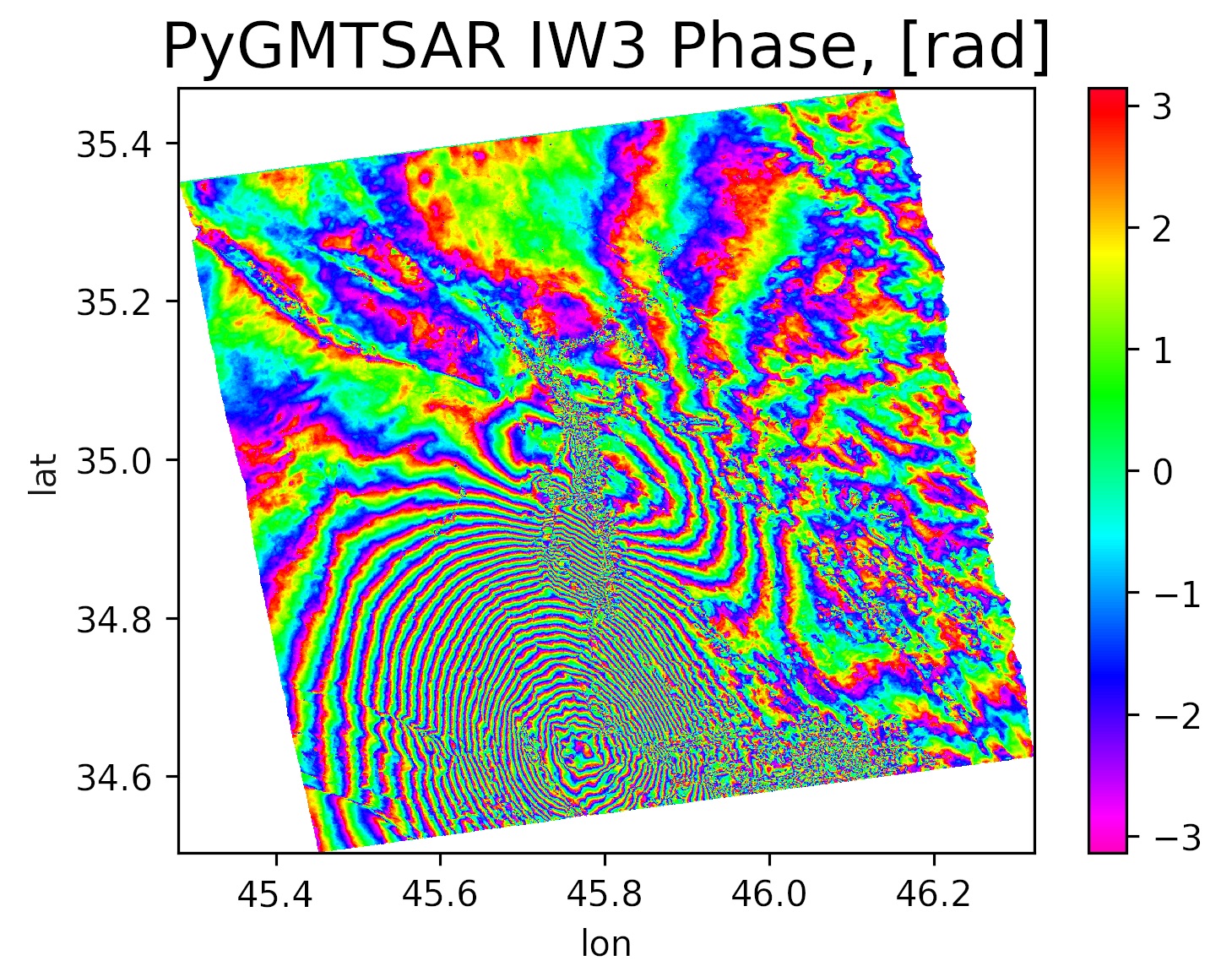

Do you need to produce satellite interferometry results for your work or study? Or should you find the way to process terabytes of radar data on your common laptop? Maybe you aren't confident about the installation and usage of the required software. Fortunately, there is the next generation of satellite interferometry products available for you. Beginners can build the results easily and advanced users might work on huge datasets. Open Source software PyGMTSAR is available on GitHub for developers and on DockerHub for advanced users and on Google Colab for everyone. This is the cloud-ready product, and it works the same as do you run it locally on your old laptop as on powerful cloud servers.

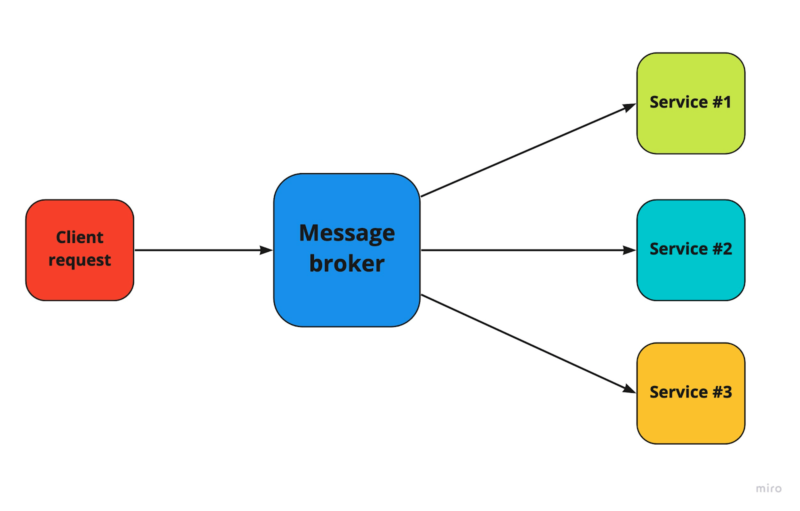

This is a series of articles dedicated to the optimal choice between different systems on a real project or an architectural interview.

At work or at a System Design interview, you often have to choose the best message broker. I plunged into this issue and will tell you what and why. What is better in each case, what are the advantages and disadvantages of these systems, and which one to choose, I will show with several examples.

Video recording of our webinar about dstack and reproducible ML workflows, AVL binary tree operations, Ultralytics YOLOv8, training XGBoost, productionize ML models, introduction to forecasting ensembles, domain expansion of image generators, Muse, X-Decoder, Box2Mask, RoDynRF, AgileAvatar and more.

In 2021, we were contacted by an industrial plant that was faced with the need to create a system for analyzing processes in its production. The enterprise team studied ready-made solutions, but none of the analytics system designs fully covered the required functionality. So they turned to us with a request to develop their own analytical system that would collect data from all machines and allow it to be analyzed to see bottlenecks in production. For this project, we created a data-driven UI/UX design and also developed a web-based interface for the equipment monitoring system.

In Part 1 of this article, I built and compared two classifiers to detect trolls on Twitter. You can check it out here.

Now, time has come to look more deeply into the datasets to find some patterns using exploratory data analysis and topic modelling.

EDA

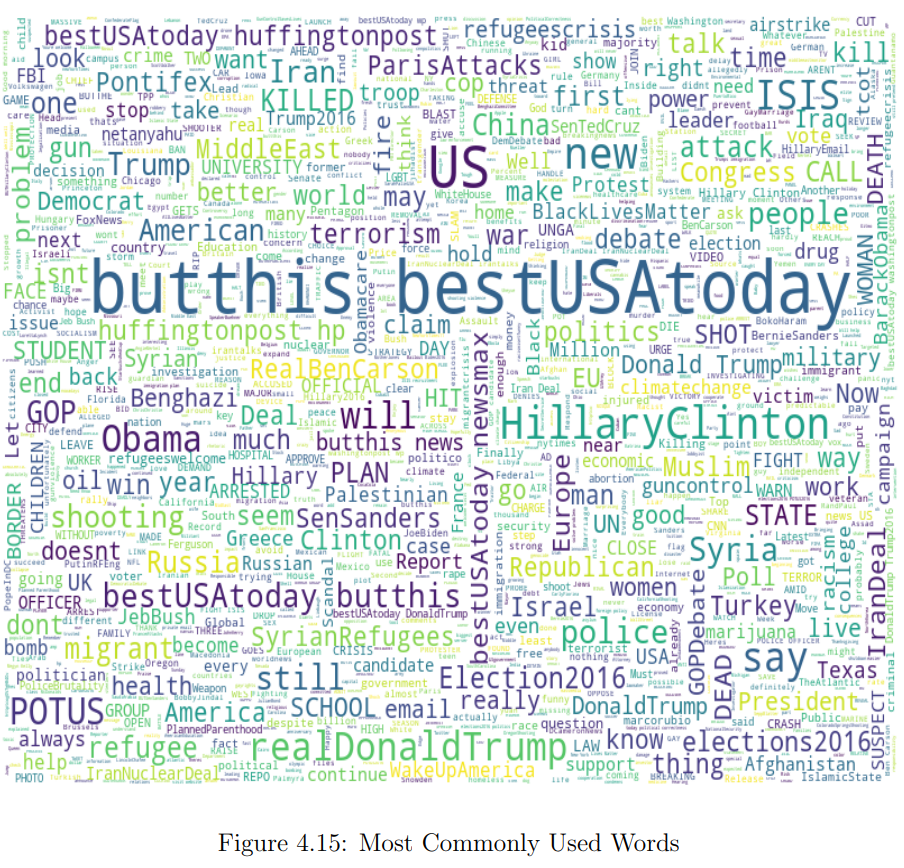

To do just that, I first created a word cloud of the most common words, which you can see below.

During the last decades, the world’s population has been developing as an information society, which means that information started to play a substantial end-to-end role in all life aspects and processes. In view of the growing demand for a free flow of information, social networks have become a force to be reckoned with. The ways of war-waging have also changed: instead of conventional weapons, governments now use political warfare, including fake news, a type of propaganda aimed at deliberate disinformation or hoaxes. And the lack of content control mechanisms makes it easy to spread any information as long as people believe in it.

Based on this premise, I’ve decided to experiment with different NLP approaches and build a classifier that could be used to detect either bots or fake content generated by trolls on Twitter in order to influence people.

In this first part of the article, I will cover the data collection process, preprocessing, feature extraction, classification itself and the evaluation of the models’ performance. In Part 2, I will dive deeper into the troll problem, conduct exploratory analysis to find patterns in the trolls’ behaviour and define the topics that seemed of great interest to them back in 2016.

Features for analysis

From all possible data to use (like hashtags, account language, tweet text, URLs, external links or references, tweet date and time), I settled upon English tweet text, Russian tweet text and hashtags. Tweet text is the main feature for analysis because it contains almost all essential characteristics that are typical for trolling activities in general, such as abuse, rudeness, external resources references, provocations and bullying. Hashtags were chosen as another source of textual information as they represent the central message of a tweet in one or two words.

Once upon a time our DBA team had a task. We had to move a ZooKeeper ensemble which we had been using for Clickhouse cluster. Everyone is used to moving an ensemble by moving its data files. It seems easy and obvious but our Clickhouse cluster had more than 400 TB replicated data. All replication information had been collected in ZooKeeper cluster from the very beginning. At the end of the day we couldn’t miss even a row of data. Then we looked for information on the internet. Unfortunately there was a good tutorial about 3.4.5 and didn’t fit our version 3.6.2. So we decided to use “the extending” for moving our ensemble.

Working with speech recognition models we often encounter misconceptions among potential customers and users (mostly related to the fact that people have a hard time distinguishing substance over form). People also tend to believe that punctuation marks and spaces are somehow obviously present in spoken speech, when in fact real spoken speech and written speech are entirely different beasts.

Of course you can just start each sentence with a capital letter and put a full stop at the end. But it is preferable to have some relatively simple and universal solution for "restoring" punctuation marks and capital letters in sentences that our speech recognition system generates. And it would be really nice if such a system worked with any texts in general.

For this reason, we would like to share a system that:

To reiterate — the purpose of such a system is only to improve the readability of the text. It does not add information to the text that did not originally exist.

I had some experience in the matching engine development for cryptocurrency exchange some time ago. That was an interesting and challenging experience. I developed it in clear C++ from scratch. The testing of it is also quite a challenging task. You need to get data for testing, perform testing, collect some statistics, and at last, analyze collected data to find weak points and bottlenecks. I want to focus on testing the C++ matching engine and show how testing can give insights for optimizations even without the need to change the code. The matching engine I developed can do more than 1’000’000 TPS (transactions per second) and is 10x times faster than the matching engine of the Binance cryptocurrency exchange (see one post on Binance Blog).