The second quarter of the year has ended and, as usual, we take a look back at the mitigated DDoS attacks activity and BGP incidents that occurred between April and June 2022.

The second quarter of the year has ended and, as usual, we take a look back at the mitigated DDoS attacks activity and BGP incidents that occurred between April and June 2022.

PXE is a great solution for booting a diskless computer (or a computer without an OS installed). This method is often used for terminal stations and OS mass installation.

Stock ubuntu (16.04) in pxe-mode can mount rootfs only from NFS. But this is not a great idea: any difficulties with the network/NFS server and the user gets problems.

In my opinion, it's best to use other protocols, such as http/ftp. Once booting, you will have an independent system

Vepp is our new panel for managing servers and websites. At first, we just wanted to transform the interface of ISPmanager 5 but at the designing phase, we figured that (changing) the interface is not enough. We have to change the approach to modern user’s needs and tasks. As a matter of fact, it meant that we had to create a whole new product.

In the article, we’ll explain why we couldn’t make do with only cosmetic changes to ISPmanager 5 and show the result of the global overhaul.

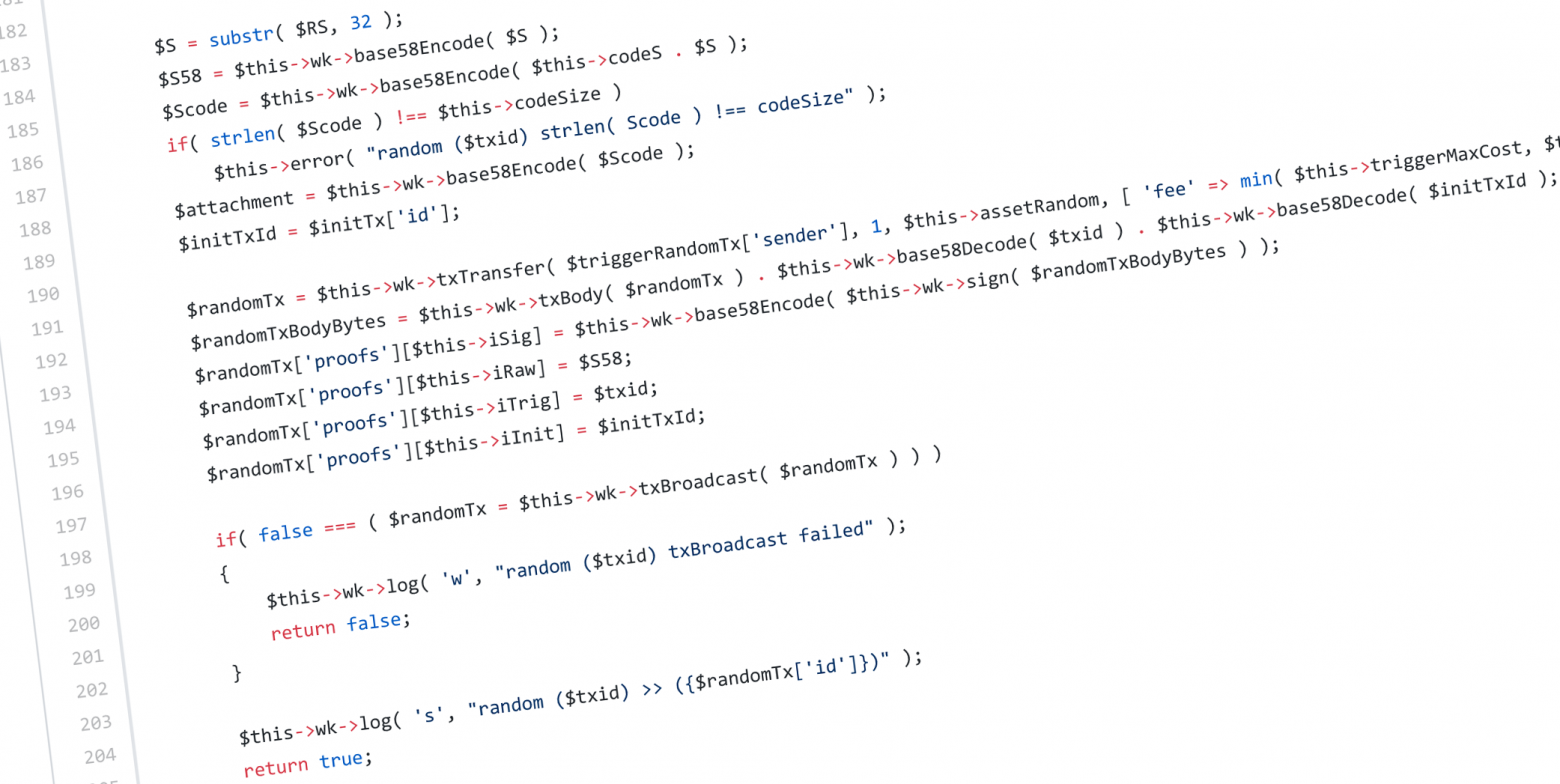

From idea to implementation: modifying the existing elliptic curve signature scheme to be deterministic and providing functions on it to obtain verifiable within the blockchain pseudorandom numbers.

In this post we'd like to share an interesting way of dealing with configuration of a distributed system.

The configuration is represented directly in Scala language in a type safe manner. An example implementation is described in details. Various aspects of the proposal are discussed, including influence on the overall development process.

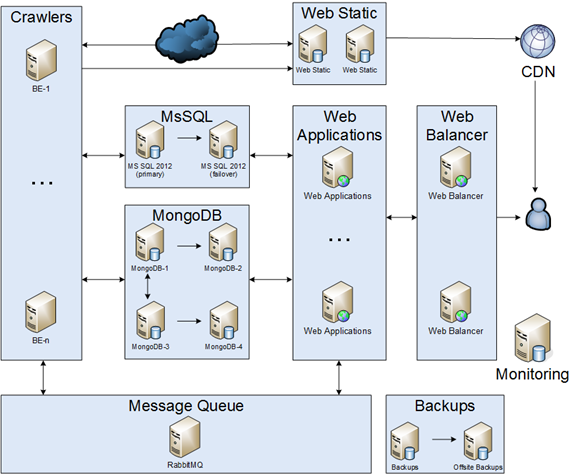

I'd like to share my story about migration monolith application into microservices. Please, keep in mind that it was during 2012 — 2014. It is transcription of my presentation at dotnetconf(RU). I'm going to share a story about changing every part of the infrastructure.

Kata Containers is actually now the main way to run containers in an isolated virtual machine for greater security. I tell you how to install them for use with Containerd and Docker while still being able to switch between release versions.

Secret Management and Why It’s Important

Hi! My name is Evgeny, and I work as a Lead DevOps at Exante. In this article, I will discuss the practical experience of setting up a high-availability HashiCorp Vault with a GCP storage backend and auto unseal in Kubernetes (K8s).

Our infrastructure used to consist of thousands of virtual and physical machines hosting our legacy services. Configuration files, including plain-text secrets, were distributed across these machines, both manually and with the help of Chef.

We decided to change the company’s strategy for several reasons: to accelerate code delivery processes, ensure continuous delivery, securely store secrets, and speed up the deployment of new applications and environments.

We decided to transition our product to a cloud-native model, which required us to change our approach to development and infrastructure. This involved refactoring our legacy services, adopting a microservices architecture, deploying services in cloud-based Kubernetes (K8s), and utilizing managed resources like Redis and PostgreSQL.

In our situation, everything needed to change—from applications and infrastructure to how we distribute configs and secrets. We chose Google as our cloud provider and HashiCorp Vault for secret storage. We've since made significant progress on this journey.

Why HashiCorp Vault?

There were several reasons:

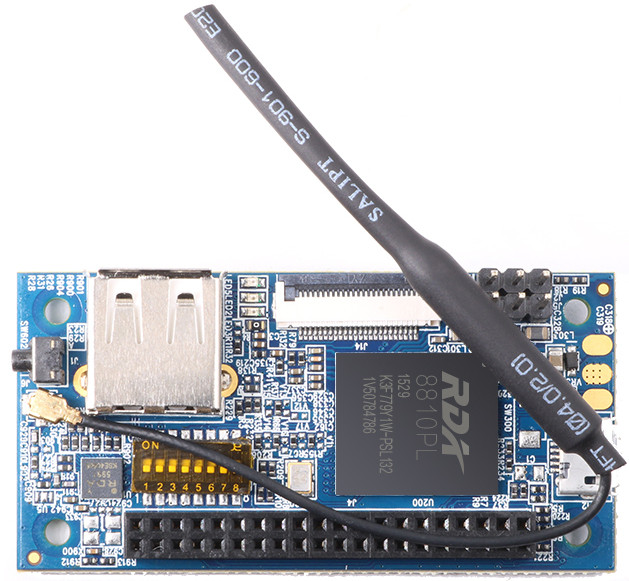

Hellow my name is Dmitry. Once I bought "Orange PI i96", but unfortunately producer not update it firmvere very long. Last firmwere kernel version is 3.10.62 but kernel current at time this article writing (russian version) is 6.5.1. And so I decide build my own firmware from scratch, and do it from sourse completely.

Arbeiten im technischen Support brachte zusätzlich zu allen Aufgaben die Pflicht mit sich, die Kommunikationskanäle zu überwachen. Dies wurde über den Grafana-Dienst realisiert, der die erforderlichen Metriken aus Zabbix bezog. Da die Art der Arbeit jedoch bedeutete, dass man nicht immer an seinem Arbeitsplatz sitzt, kam mir die Idee, dies ein wenig zu automatisieren und Benachrichtigungen auf das Telefon oder zum Beispiel in einen Messenger zu erhalten, falls ein Kommunikationskanal ausfällt. Allerdings hatte ich keinen Zugriff auf das Zabbix-System und auch keinen erweiterten Zugriff auf Grafana.

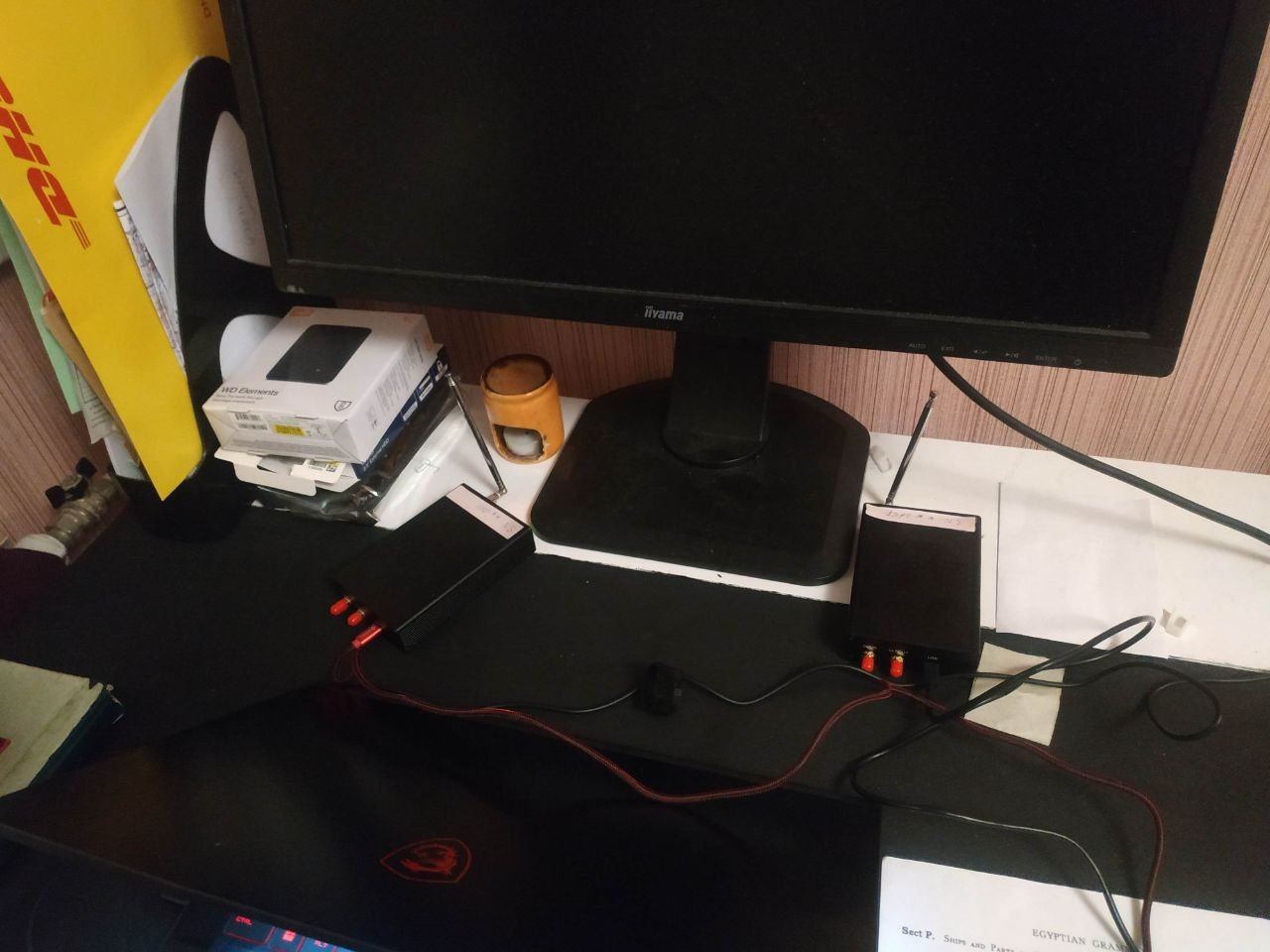

In this post I'll share my experience in adjustment of WiFi physical channel. The channel was implemented on a software defined radio (SDR) platform. WiFi looks like a very complicated thing standardized over hundreds of pages. Could a non-expert with a PC and a couple of 100$ devices (HackRFs) somehow improve it? Here I try to develop a WiFi optimization approach basically agnostic of protocol implementation details. There's some math and Python programming in it.

In 2021, we were contacted by an industrial plant that was faced with the need to create a system for analyzing processes in its production. The enterprise team studied ready-made solutions, but none of the analytics system designs fully covered the required functionality. So they turned to us with a request to develop their own analytical system that would collect data from all machines and allow it to be analyzed to see bottlenecks in production. For this project, we created a data-driven UI/UX design and also developed a web-based interface for the equipment monitoring system.

I had some experience in the matching engine development for cryptocurrency exchange some time ago. That was an interesting and challenging experience. I developed it in clear C++ from scratch. The testing of it is also quite a challenging task. You need to get data for testing, perform testing, collect some statistics, and at last, analyze collected data to find weak points and bottlenecks. I want to focus on testing the C++ matching engine and show how testing can give insights for optimizations even without the need to change the code. The matching engine I developed can do more than 1’000’000 TPS (transactions per second) and is 10x times faster than the matching engine of the Binance cryptocurrency exchange (see one post on Binance Blog).